BatchNorm

https://en.wikipedia.org/wiki/Batch_normalization

Each layer of a neural network has inputs with a corresponding distribution, which is affected during the training process by the randomness in the parameter initialization and the randomness in the input data. The effect of these sources of randomness on the distribution of the inputs to internal layers during training is described as internal co-variate shift.

Batch normalization was initially proposed to mitigate internal co-variate shift.

Transformation

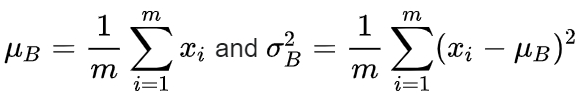

Let us use B to denote a mini-batch of size m of the entire training set. The empirical mean and variance of B could thus be denoted as

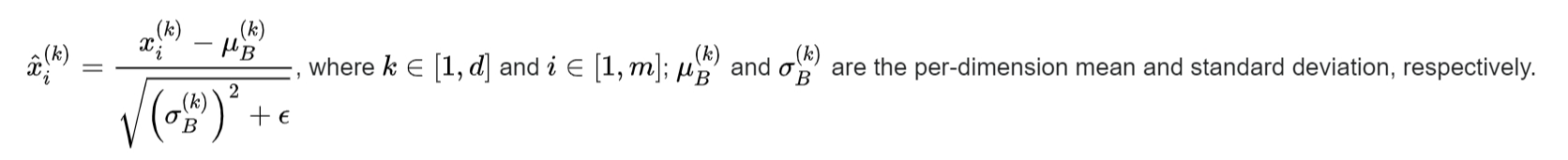

Normalized as

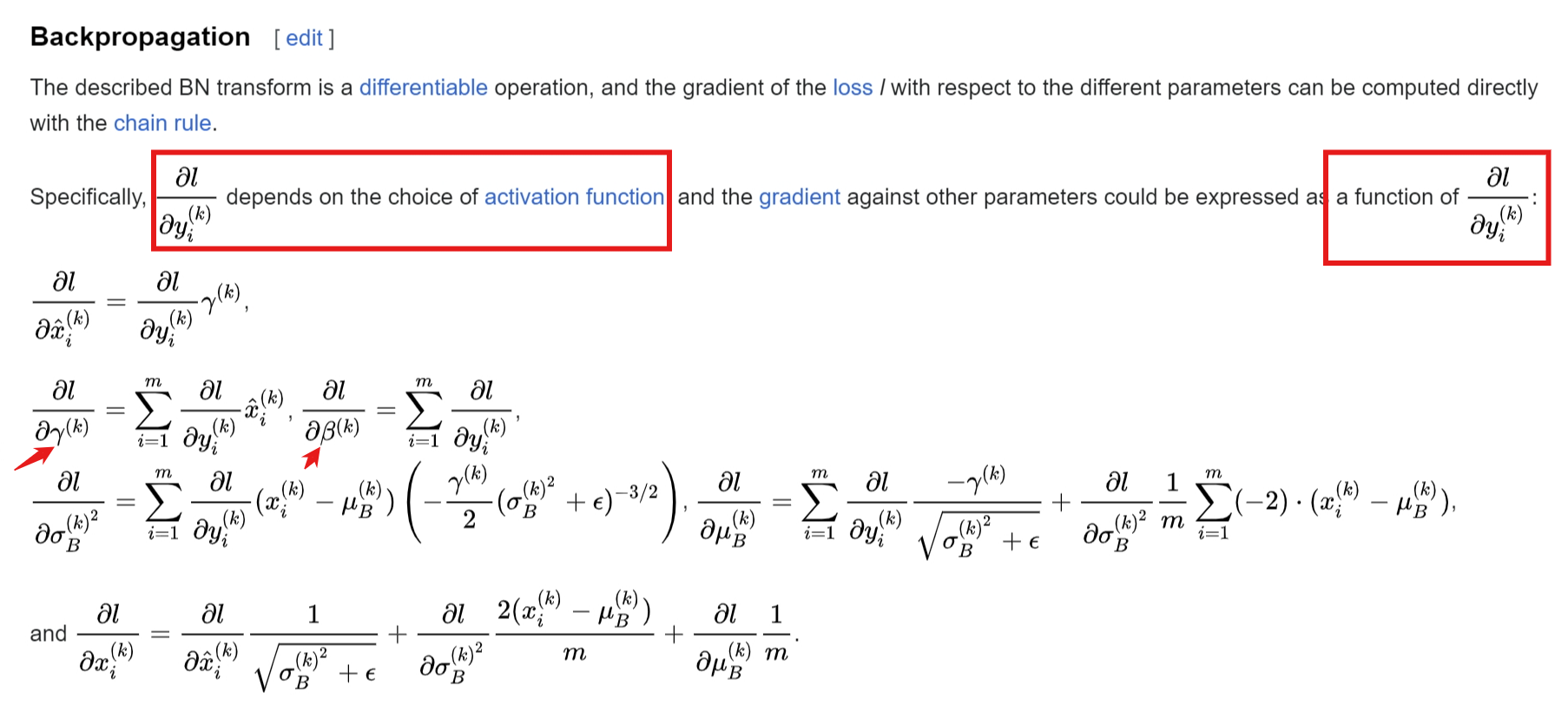

BP

How to update

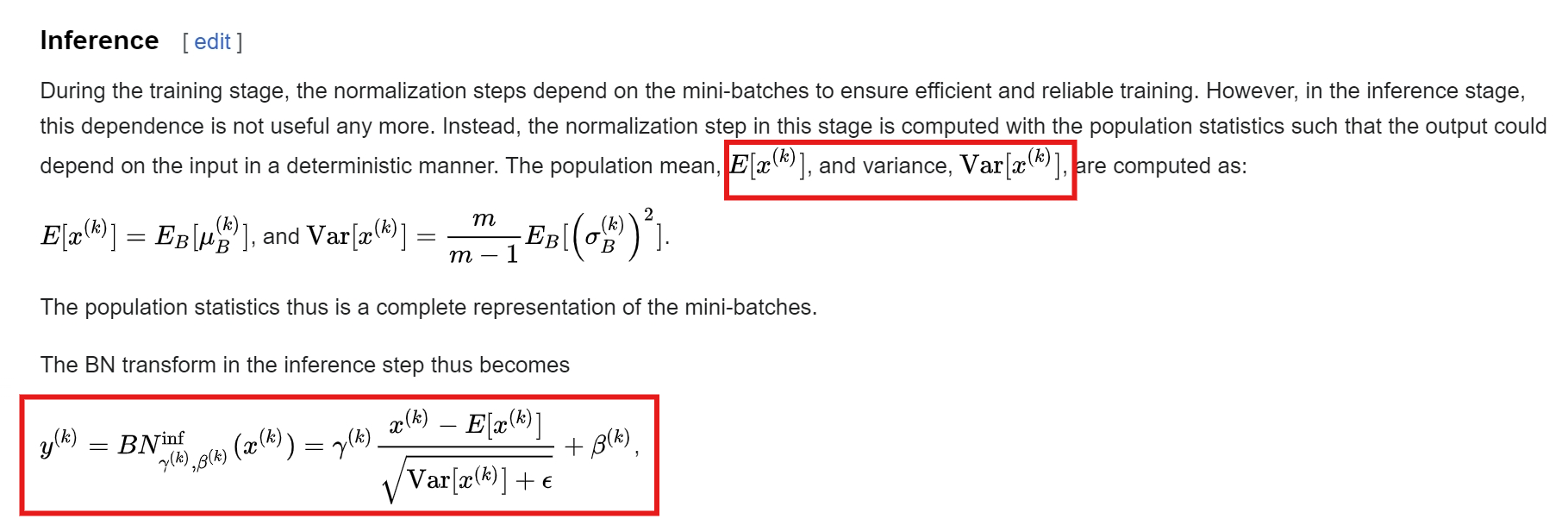

Inference

Last modified: 10 March 2024