Soft Filter Pruning for Accelerating Deep Convolutional Neural Networks

Abstract

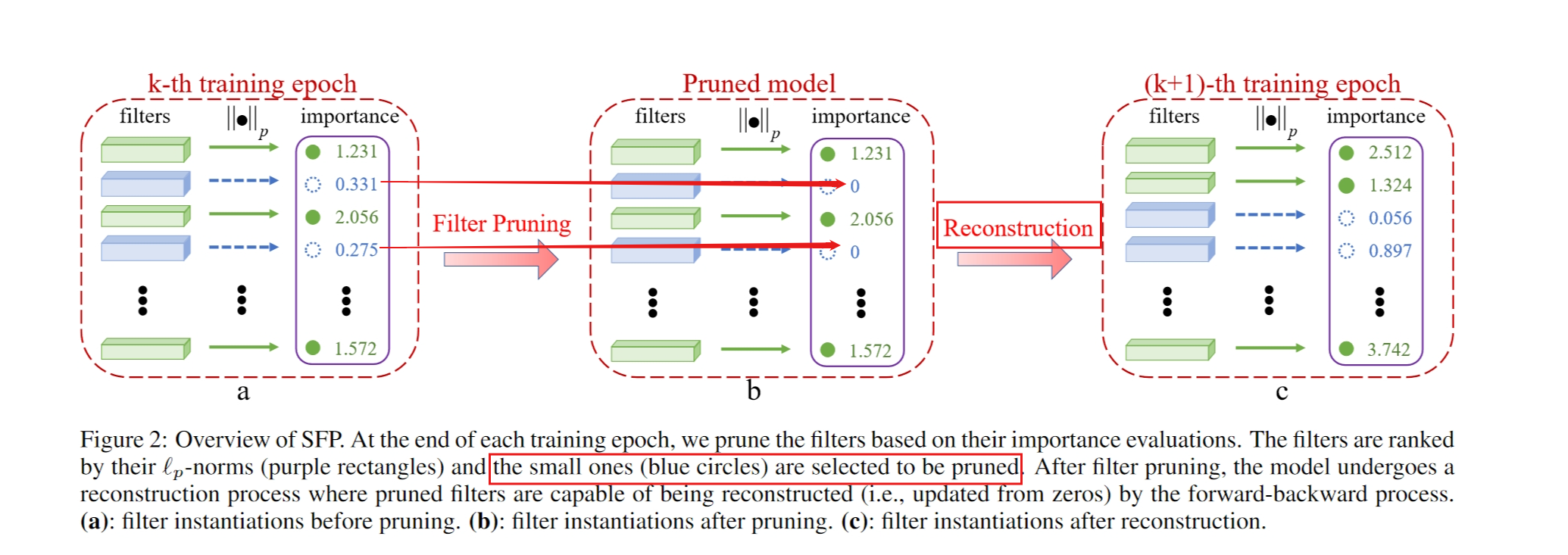

Specifically, the proposed SFP enables the pruned filters to be updated when training the model after pruning.

Larger model capacity(as they can be updated)

Less dependence on the pretrained model (Large capacity enables SFP to train from scratch and prune the model simultaneously)

Introduction

Efficient Architectures

Reference [7] [ He et al. , 2016a ] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In CVPR , 2016.

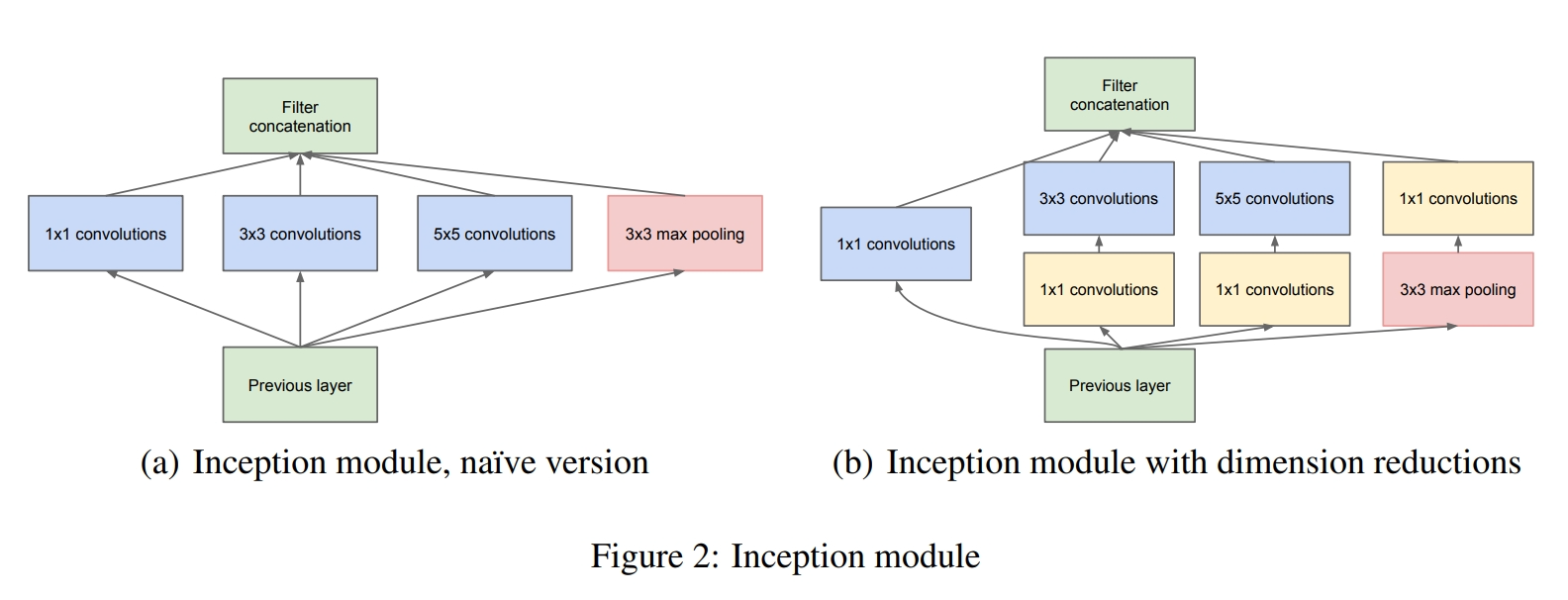

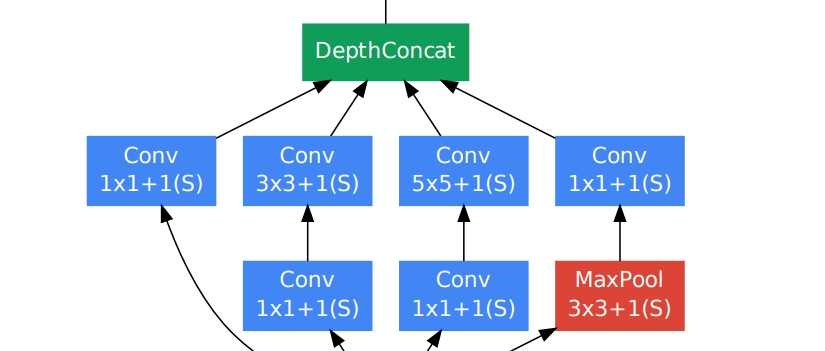

Inception: Reference [23] [ Szegedy et al. , 2015 ] Christian Szegedy, Wei Liu, Yangqing Jia, Pierre Sermanet, Scott Reed, Dragomir Anguelov, Dumitru Erhan, Vincent Vanhoucke, and Andrew Rabinovich. Going deeper with convolutions. In CVPR , 2015.

Recent Efforts

weight pruning

filter pruning

Nevertheless, most of the previous works on filter pruning still suffer from the problems of

(1) the model capacity reduction and

(2) the dependence on pre-trained model.

Related Works

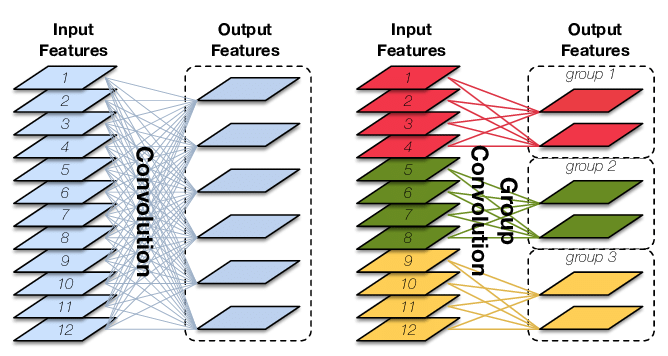

Most previous works on accelerating CNNs can be roughly divided into three categories, namely,

matrix decomposition,

low-precision weights,

and pruning.

weight pruning

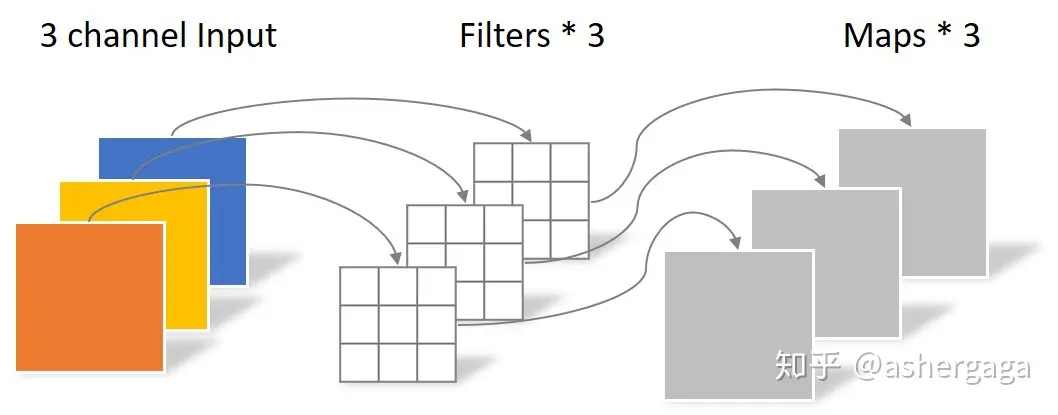

filter pruning

uses

L_1-norm to select unimportant filtersintroduces

L_1regularization on the scaling factors in batch normalization (BN) layers as a penalty term, and prune channel with small scaling factors in BN layers.a Taylor expansion based pruning criterion to approximate the change in the cost function induced by pruning.

adopts the statistics information from next layer to guide the importance evaluation of filters.

Methodology

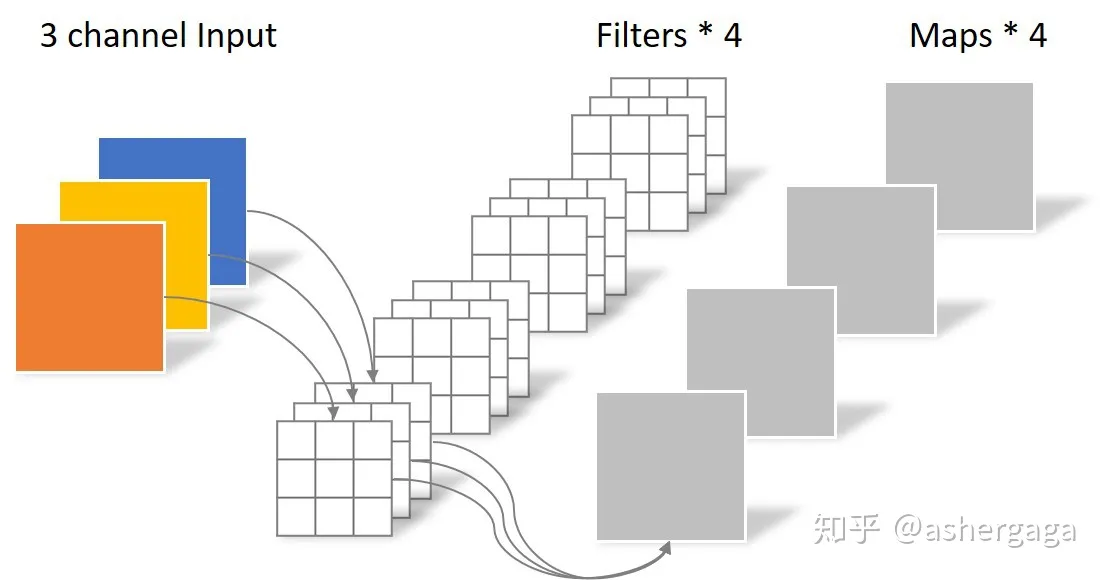

Preliminaries

CNN parameterized as

Def:

denotes a matrix of connection weights in the i-th layer.

denotes the number of input channels for the i-th convolution layer.

denotes the number of layers.

The shapes of input tensor U and output tensor V are N_i × H_i × W_i and N_i+1 × H_i+1 × W_i+1, respectively.

The convolutional operation of the i-th layer can be written as:

where F_{i,j} ∈ R^{N_i×K×K} represents the j-th filter of the i-th layer.

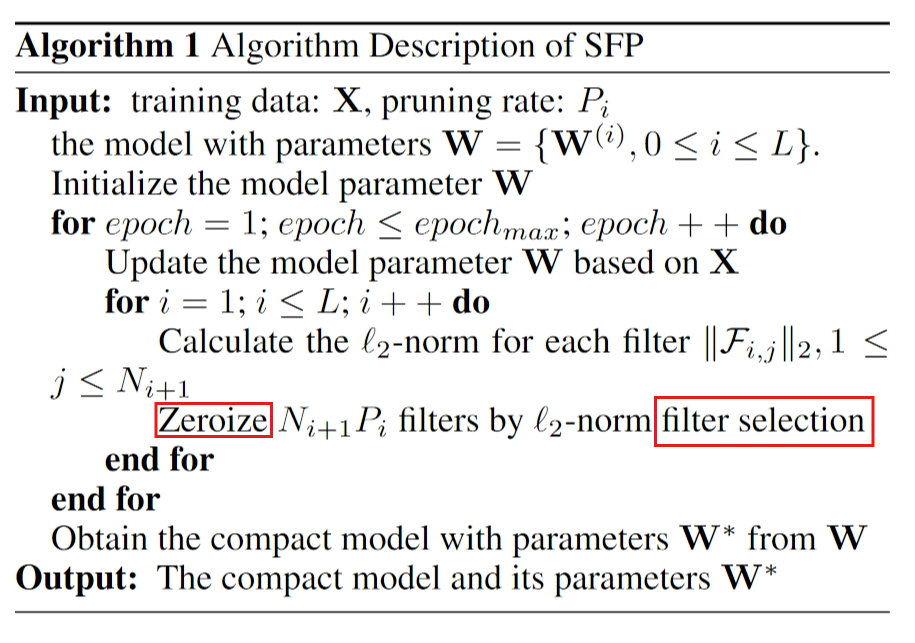

Let us assume the pruning rate of SFP is P_i for the i-th layer.

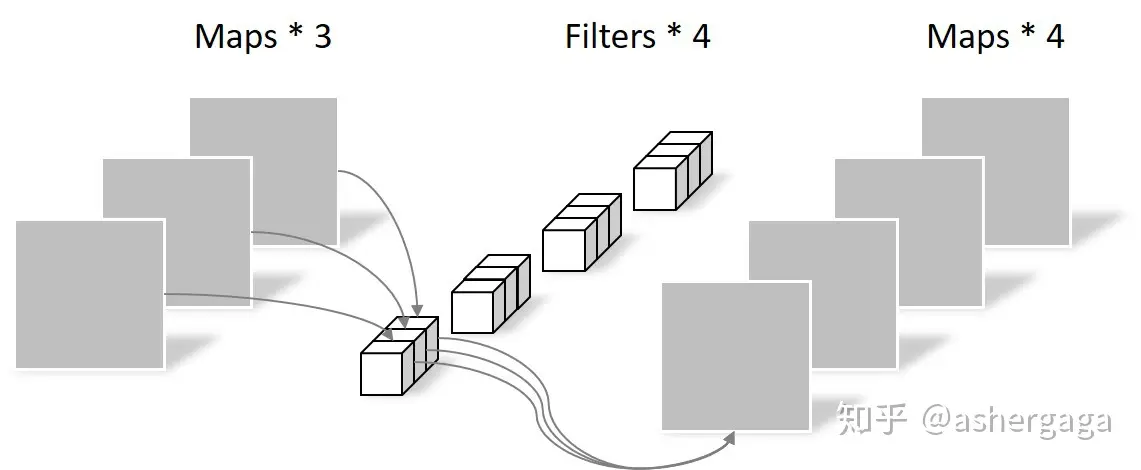

Soft Filter Pruning (SFP)

The key is to keep updating the pruned filters in the training stage

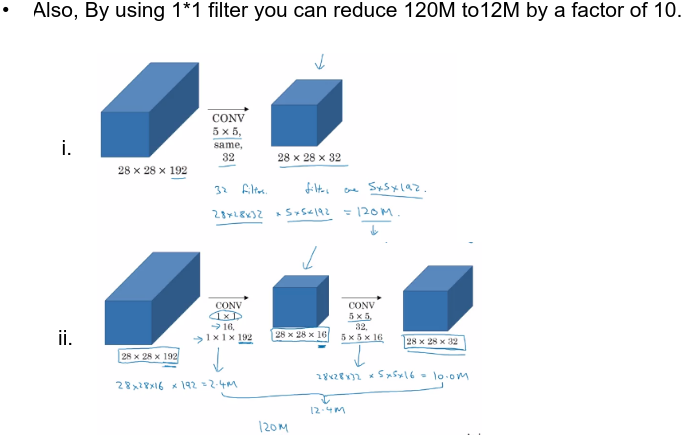

After each epoch, the L2-norm of all filters are computed for each weighted layer and used as the criterion of our filter selection strategy.

Then we will prune the selected filters by setting the corresponding filter weights as zero, which is followed by next training epoch. Finally, the original deep CNNs are pruned into a compact and efficient model.

Filter selection

We use the L_p-norm to evaluate the importance of each filter as Eq. (2)

Filter Pruning

In the filter pruning step, we simply prune all the weighted layers at the same time. In this way, we can prune each filter in parallel, which would cost negligible computation time.

Reconstruction

Obtaining Compact Model

Finally, a compact model

is obtained.