Accelerating Convolutional Networks via Global & Dynamic Filter Pruning

Abstract

most approaches tend to prune filters in a layer-wise fixed manner, which is incapable of dynamically recovering the previously removed filter, as well as jointly optimize the pruned network across layers.

In this paper, we propose a novel global & dynamic pruning (GDP) scheme to prune redundant filters for CNN acceleration.

In particular, GDP first globally prunes the unsalient filters across all layers by proposing a global discriminative function based on prior knowledge of each filter.

Second, it dynamically updates the filter saliency all over the pruned sparse network, and then recovers the mistakenly pruned filter, followed by a retraining phase to improve the model accuracy.

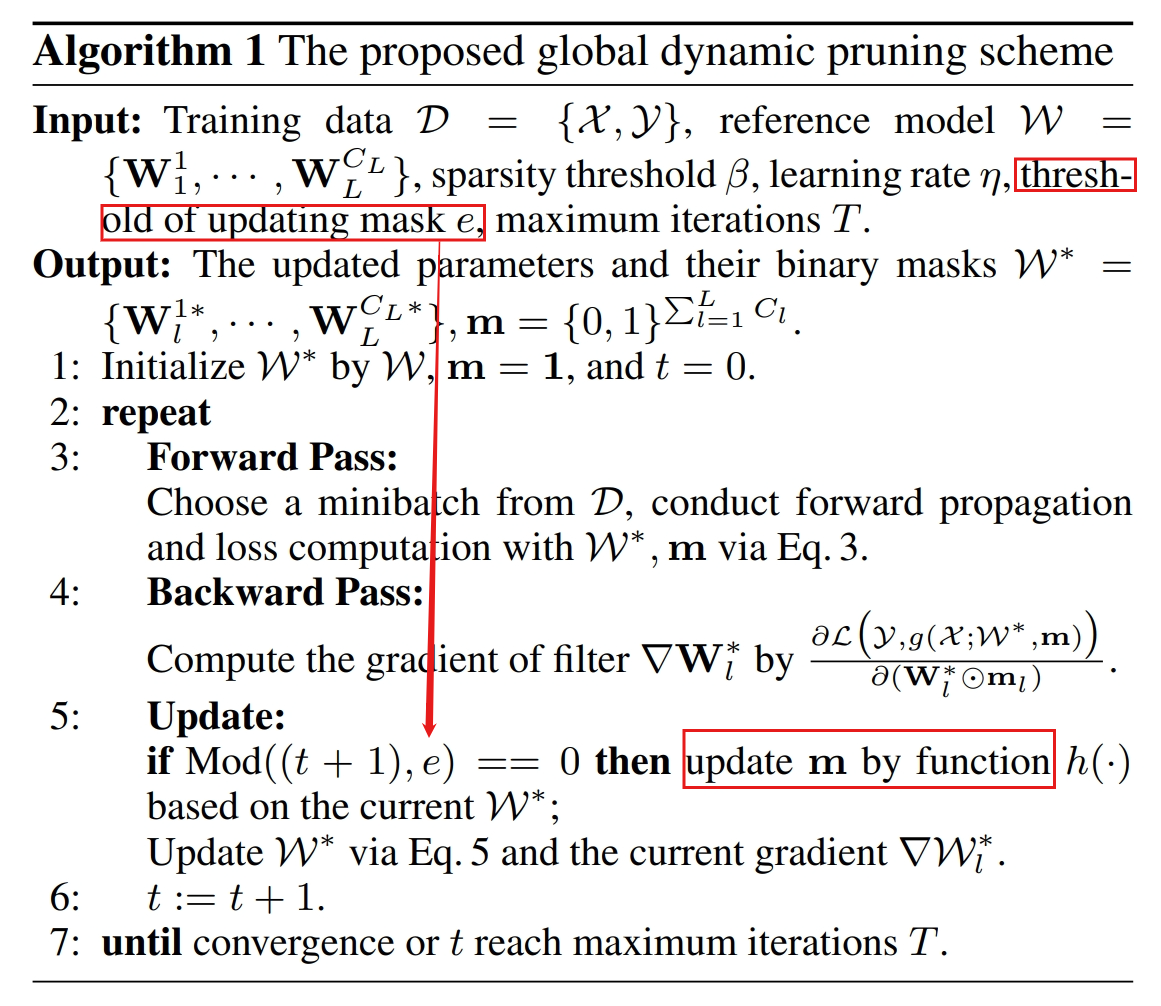

Specially, we effectively solve the corresponding non-convex optimization problem of the proposed GDP via stochastic gradient descent with greedy alternative updating.

Global Dynamic Pruning

The Proposed Pruning Scheme

Then, conventional convolution layer computation could be rewritten like this:

if the k-th filter is salient in the l-th layer, and 0 otherwise.

denotes the Khatri-Rao product operator.

We propose to solve the following optimization problem:

The problem is NP-hard, because of the || · ||_0 operator

h(·) is a global discriminative function to determine the saliency values of filters, which depends on the prior knowledge of W^∗ .

The output entry of function h(·) is binary, i.e., to be 1 if the corresponding filter is salient, and 0 otherwise.

Solver

Since every filter has a mask, we update W∗ as below:

The Global Mask

simplified as

Then,

Since the filter W^{k∗} _l

is a d^2C_{l−1}-dimensional vector, we construct a function to measure the saliency score of a filter.