VTC-LFC: Vision Transformer Compression with Low-Frequency Components

Abstract

The compression only in the spatial domain suffers from a dramatic performance drop without fine-tuning and is not robust to noise.

Because the noise in the spatial domain can easily confuse the pruning criteria, leading to some parameters/channels being pruned incorrectly.

Inspired by recent findings that self-attention is a low-pass filter and low-frequency signals/components are more informative to ViTs, this paper proposes compressing ViTs with low-frequency components.

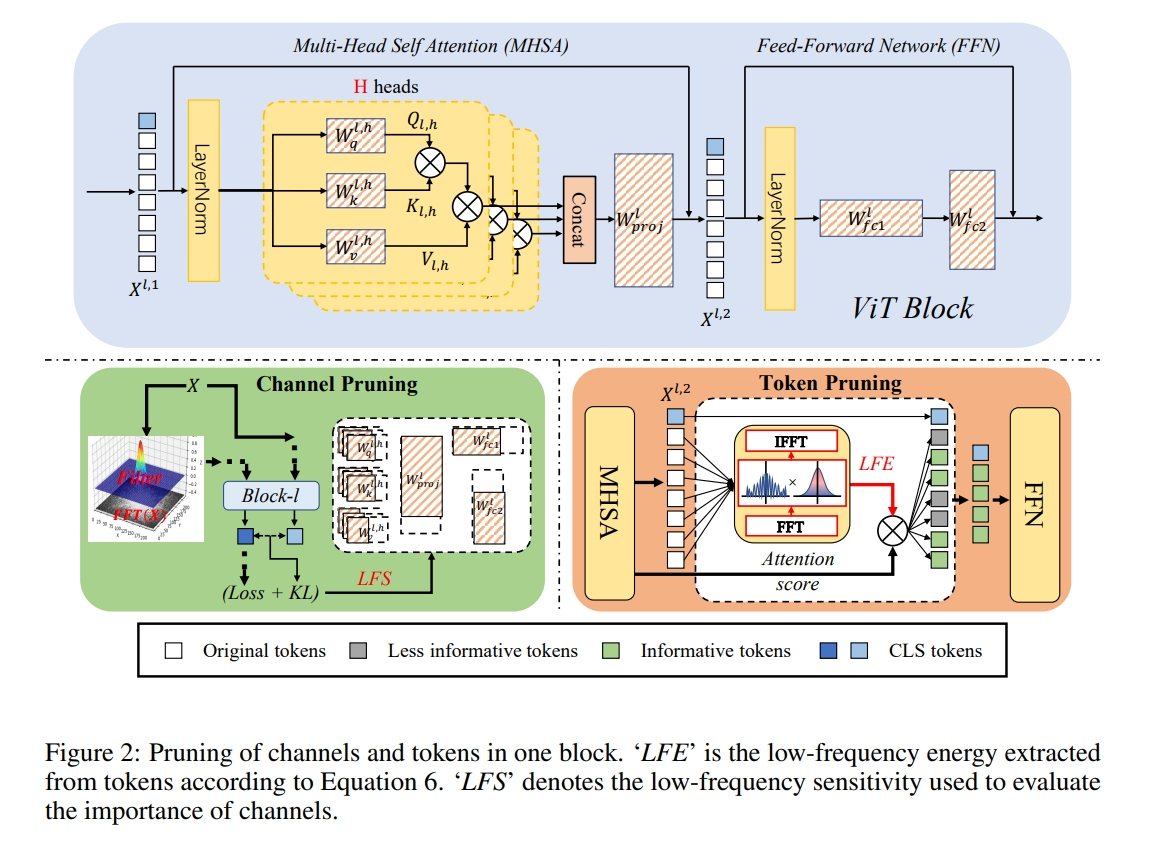

Two metrics named low-frequency sensitivity (LFS) and low-frequency energy (LFE) are proposed for better channel pruning and token pruning.

Introduction

Token pruning based on low-frequency energy: Token compression/sampling aims to select the informative tokens that store more useful information.

The popular methods dynamically select those tokens with high correlation to other tokens (e.g. the CLS token) as the informative tokens. However, it may be sub-optimal because the selected tokens tend to be similar to each other, and the information included in the token itself has been neglected to some extent.

Methodology

Our goal is to reduce the channel number of linear projection matrices and the token number N^l.

Channel Pruning based on Low-Frequency Sensitivity

The importance score I_j of a weight w_j is formulated as:

(cross-entropy loss in this paper)

The low-frequency components in images X˜ are formulated as

G (·) is the low-pass filter. Considering that a binary filter will cause the Ringing effect when the image is transformed back to the spatial domain, Gaussian filter is chosen for G (·).

In addition to the task-specific loss, the pruned model shall also provide robust feature representation as the original model. In other words, the feature representation of the low-frequency images X˜ shall be as close to that of the original images X as possible.

Hence, apart from the cross-entropy loss L for the classification task, a knowledge-distillation loss is also taken into account

We can measure the error between the CLS tokens corresponding to the low-frequency image and nature image, respectively.

Then, Low-Frequency Sensitivity (LFS) is formulated as:

where λ is the hyper-parameter for the balance of two loss functions.

Calculating the LFS for each parameter is infeasible for models with millions of parameters.

The LFS of a channel is computed by the sum of sˆj.

Token Pruning based on Low-Frequency Energy

we evaluate the low-frequency ratio of the token after transforming tokens X^l,2 into the frequency domain by applying FFT on each channel of tokens, denoted as

Given filter G with a cutoff factor σ_t, the LFE is formulated as:

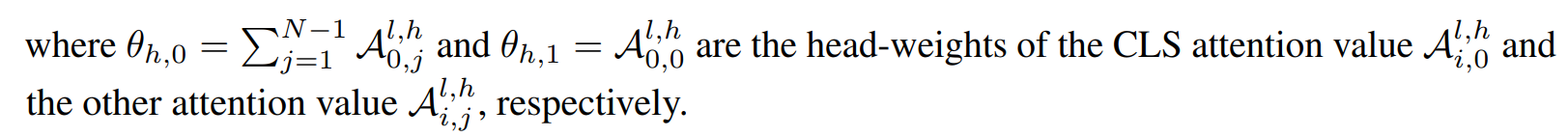

For the h-th head in ViT, the attention value is calculated as:

The CLS token plays a more significant role than other tokens because it is the final output feature that collects information.

To estimate the importance score of tokens from multiple and diverse aspects, we consider to combine the LFE η_l,i and attention score Tˆ l,i to get the final importance score of a token as:

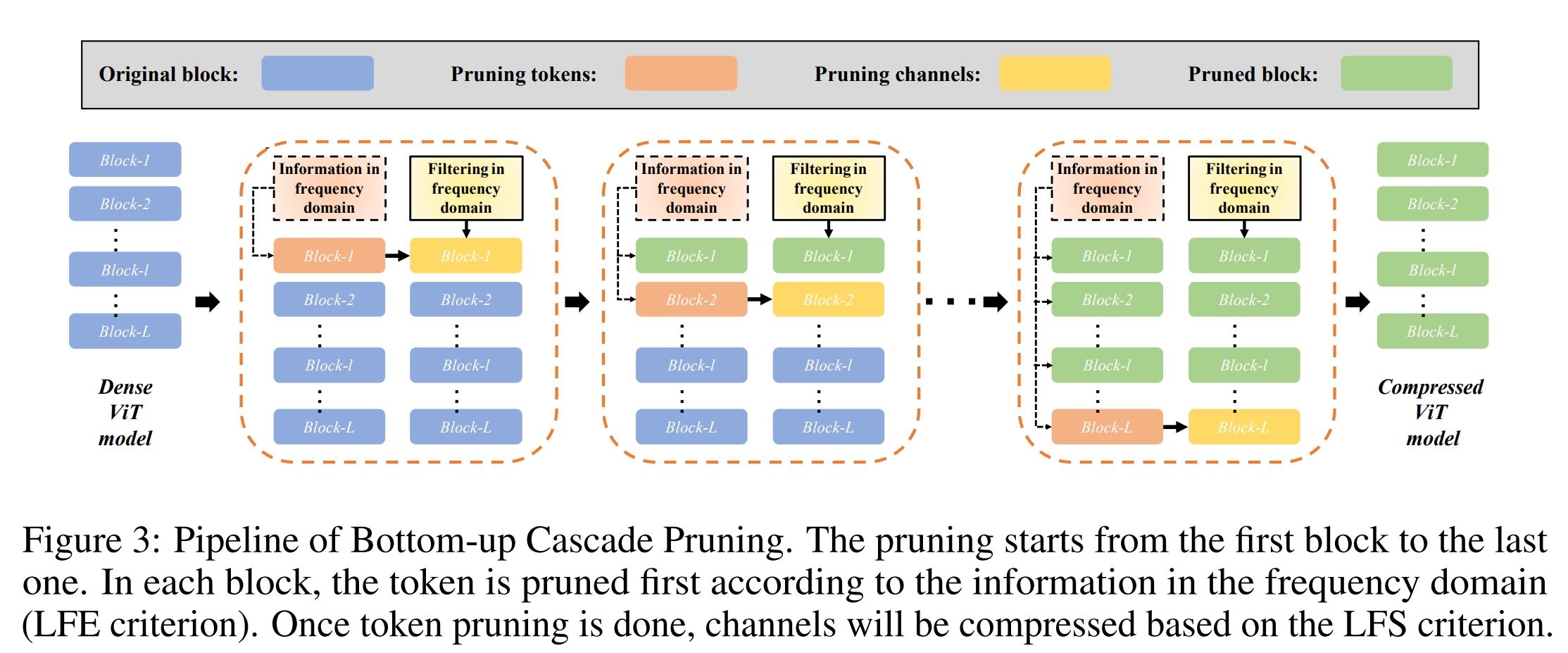

Bottom-up Cascade Pruning