CHEX: CHannel EXploration for CNN Model Compression

Abstract

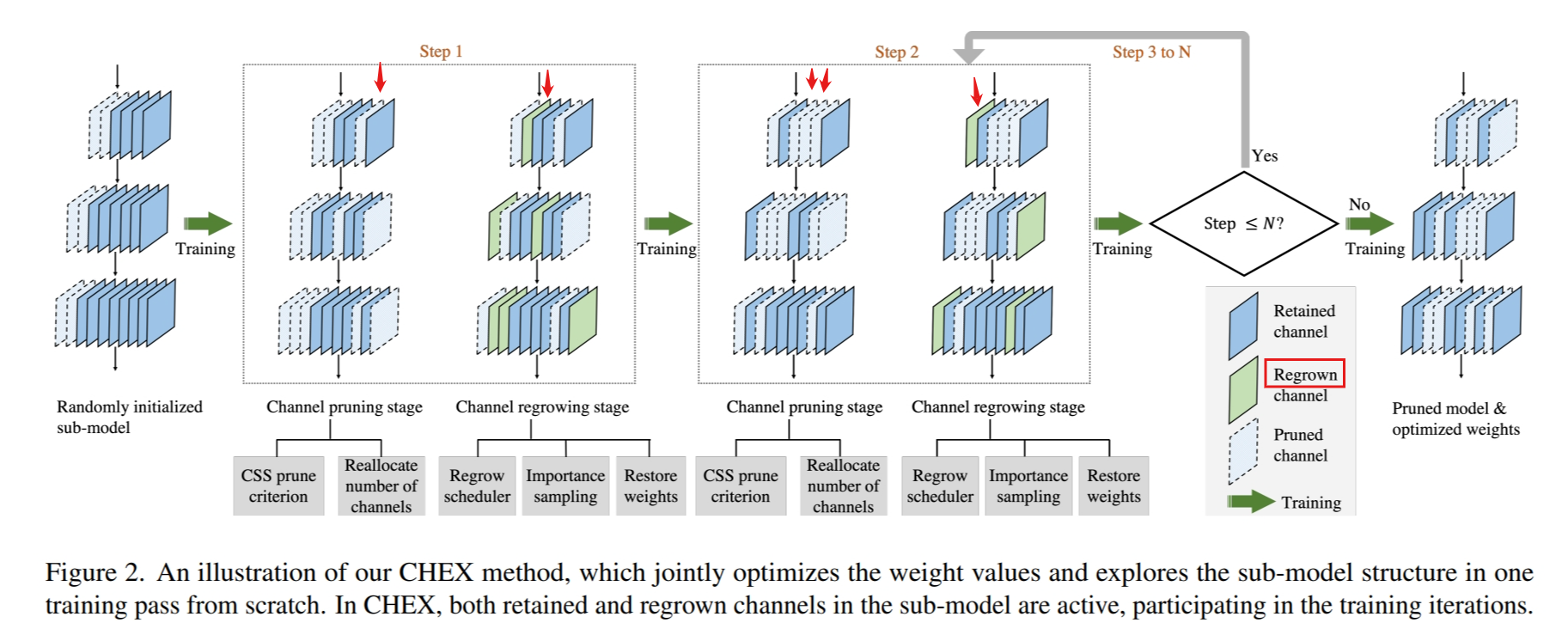

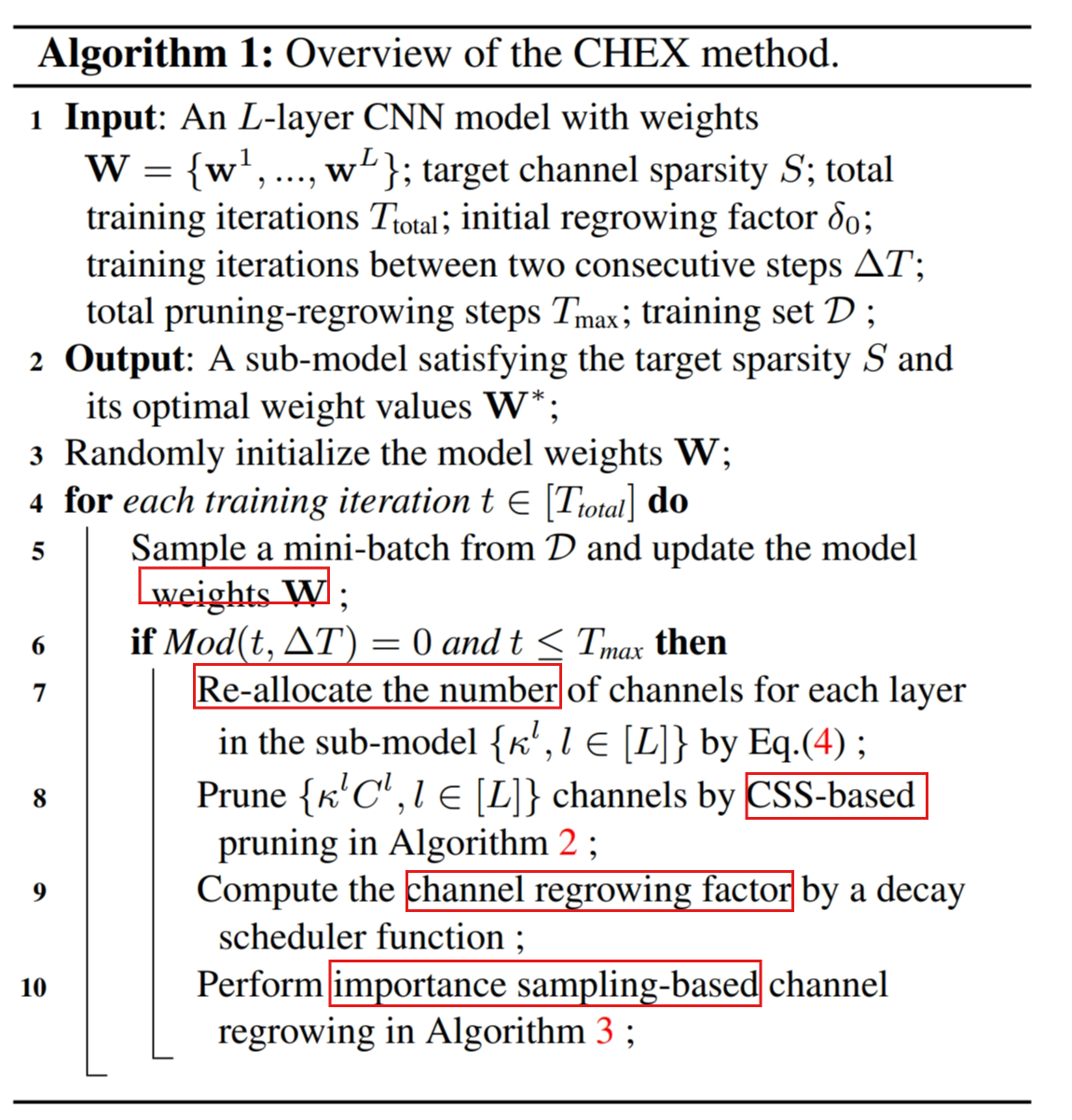

As opposed to pruning-only strategy, we propose to repeatedly prune and regrow the channels throughout the training process, which reduces the risk of pruning important channels prematurely.

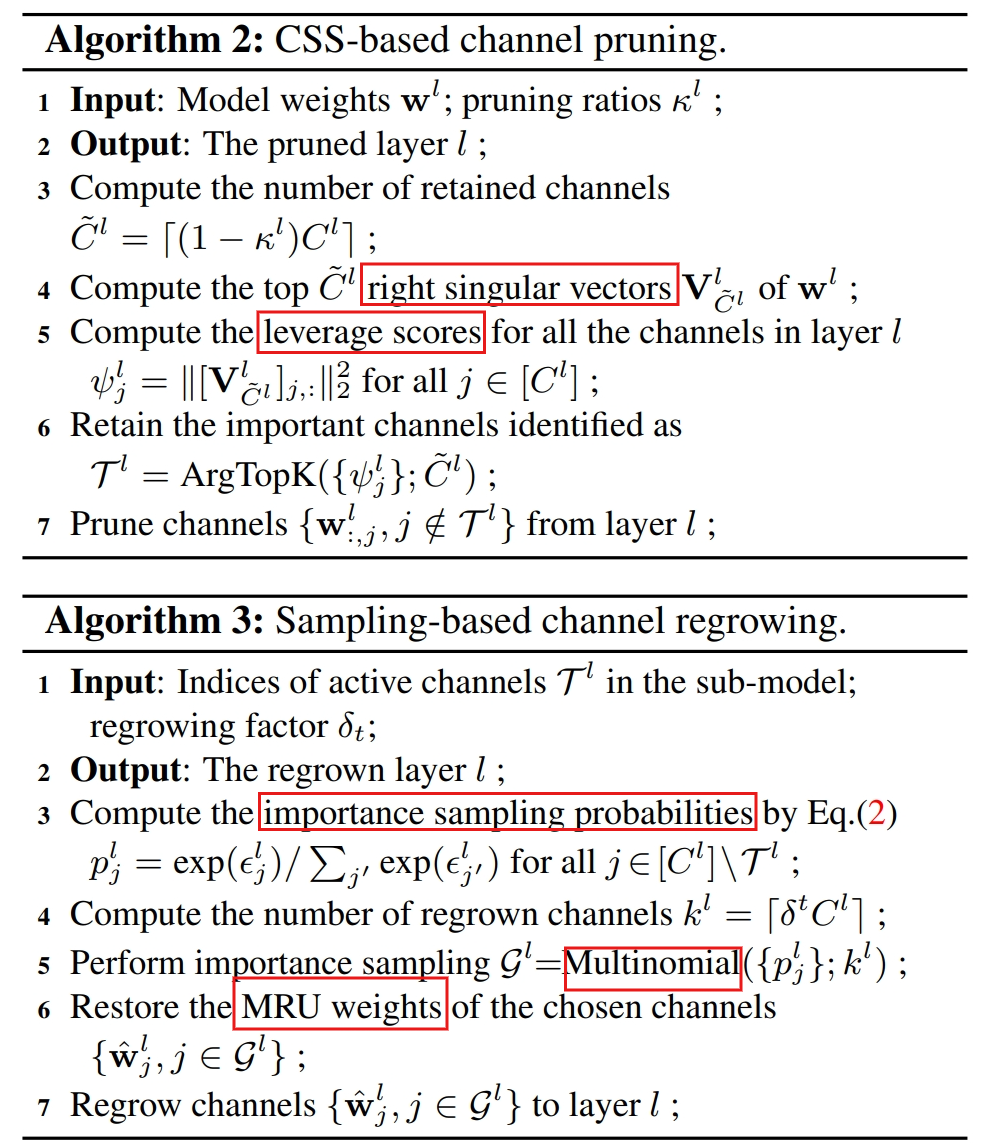

From intra-layer’s aspect, we tackle the channel pruning problem via a well-known column subset selection (CSS) formulation.

From interlayer’s aspect, our regrowing stages open a path for dynamically re-allocating the number of channels across all the layers under a global channel sparsity constraint.

Introduction

existing channel pruning methods

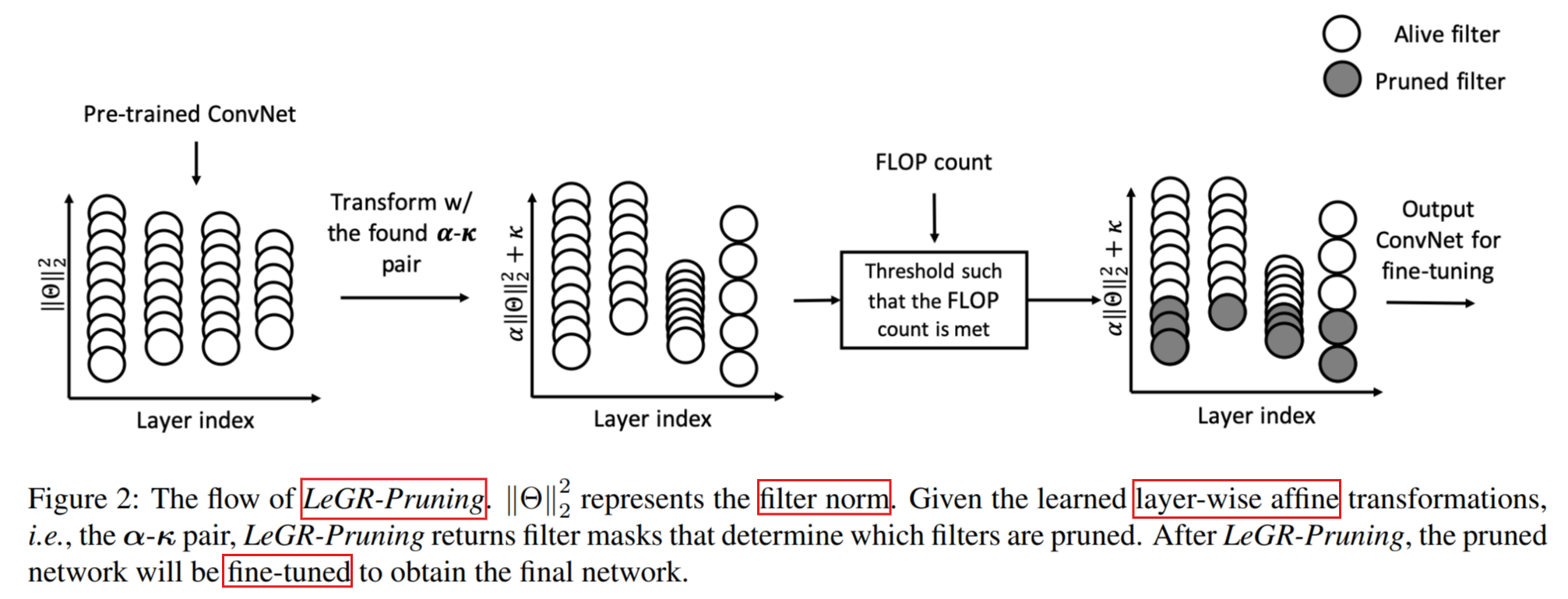

Towards Efficient Model Compression via Learned Global Ranking

determining a target model complexity can be difficult for optimizing various embodied AI applications such as autonomous robots, drones, and user-facing applications

This work takes a first step toward making this process more efficient by altering the goal of model compression to producing a set of ConvNets with various accuracy and latency trade-offs instead of producing one ConvNet targeting some pre-defined latency constraint

Channel pruning guided by classification loss and feature importance

Filter Pruning via Geometric Median for Deep Convolutional Neural Networks Acceleration

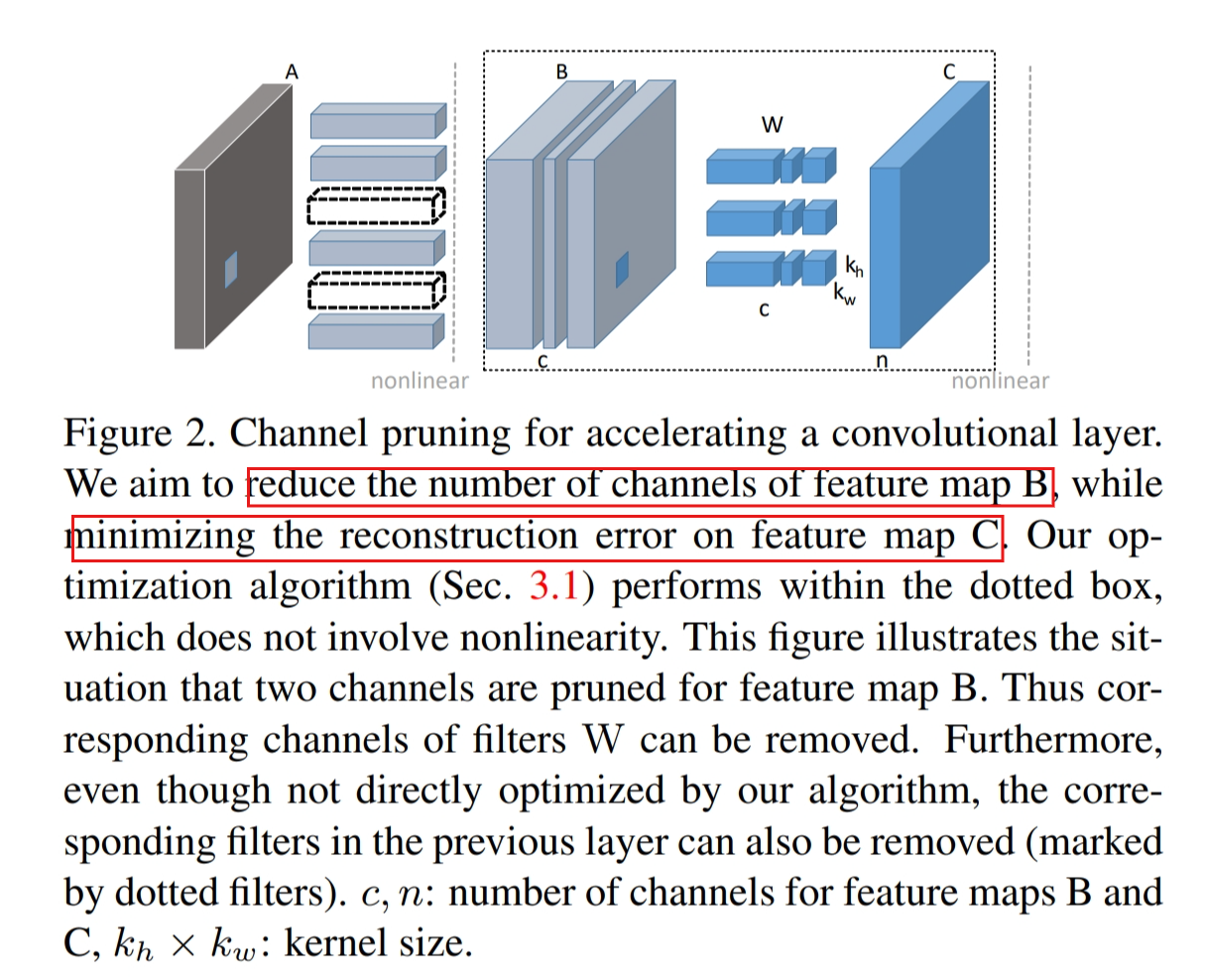

Channel pruning for accelerating very deep neural networks

Sampler Based

Minimized Reconstruction Error of sampled ones.

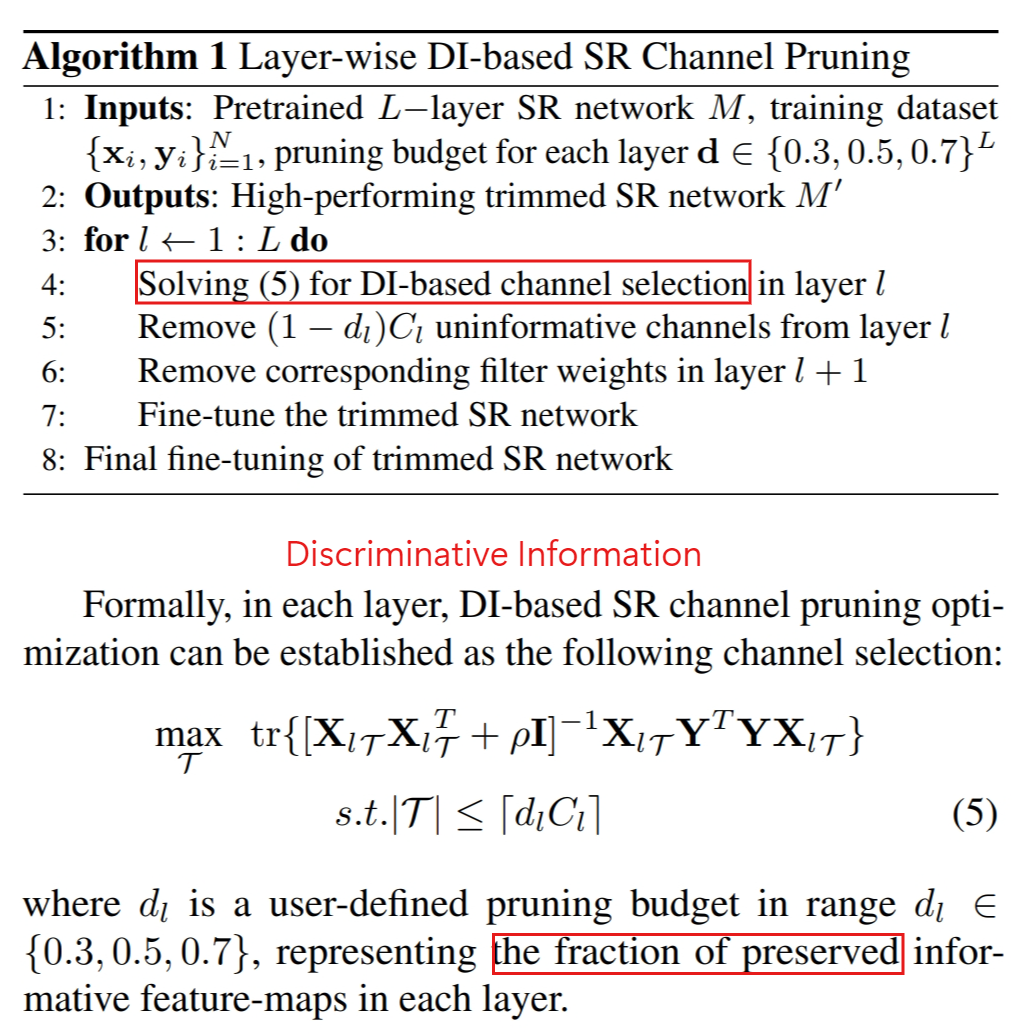

Efficient image super resolution via channel discriminative deep neural network pruning

Non-Structured DNN Weight Pruning—Is It Beneficial in Any Platform?

non-structured pruning is not competitive in terms of storage and computation efficiency

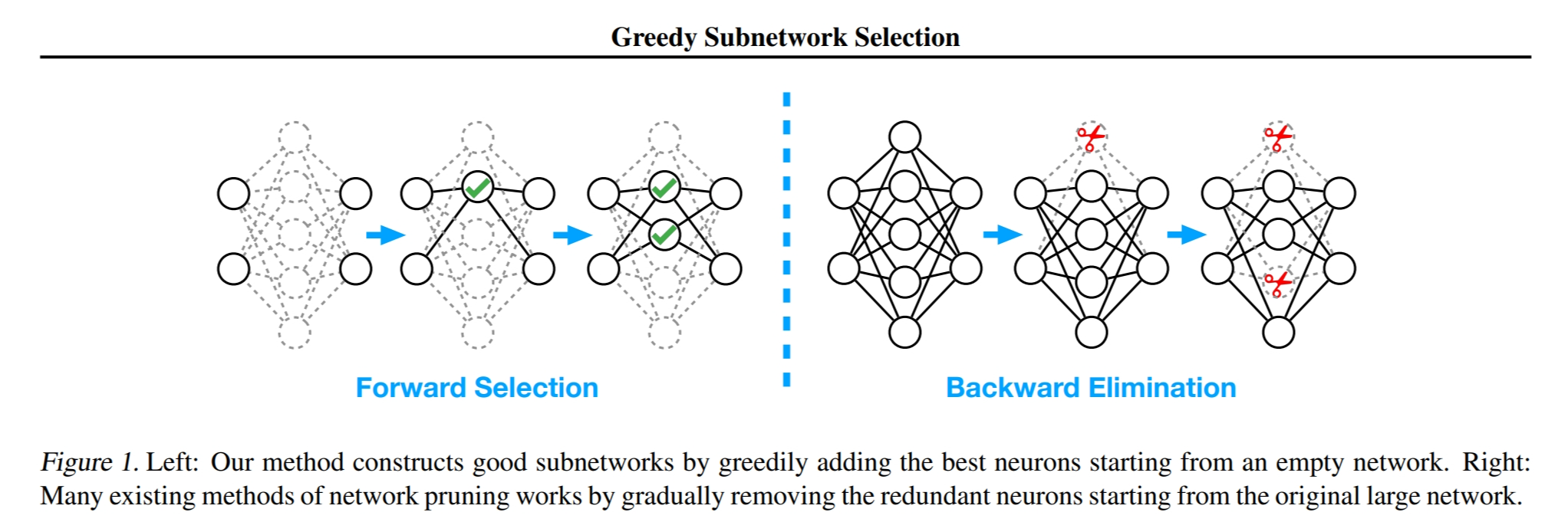

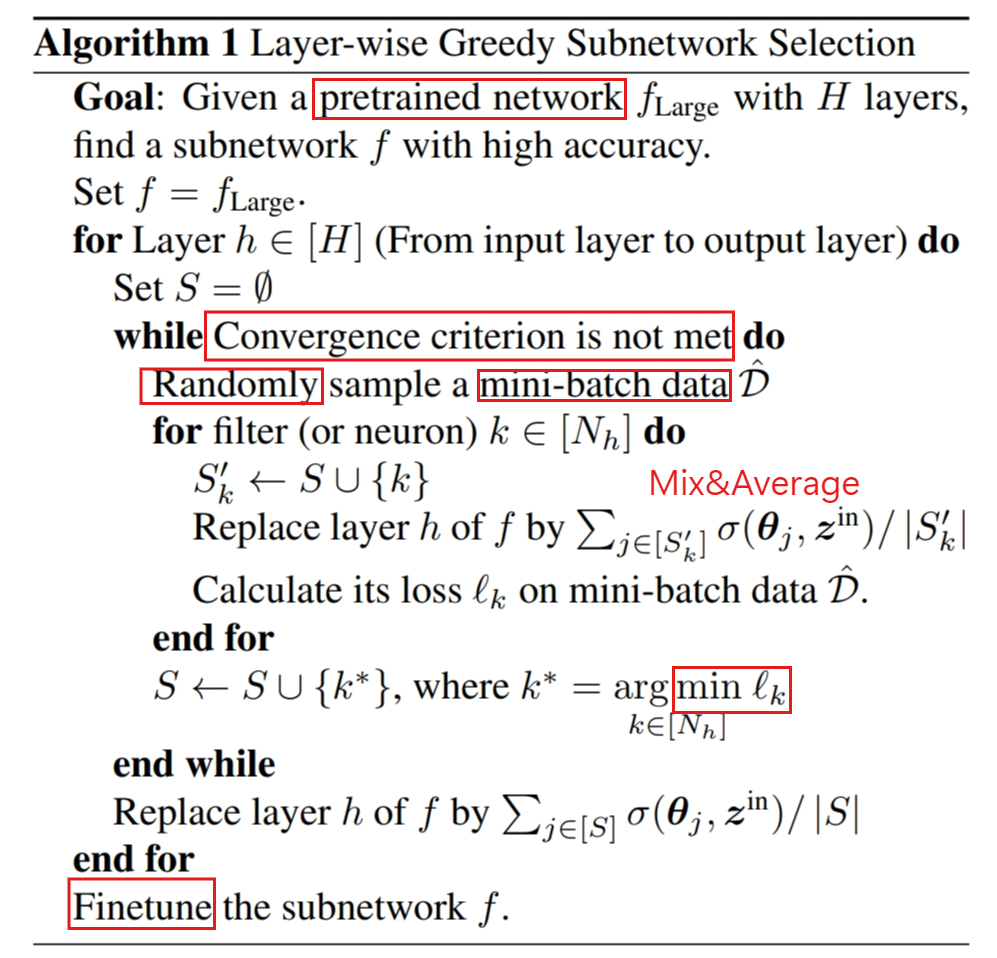

Good Subnetworks Provably Exist: Pruning via Greedy Forward Selection

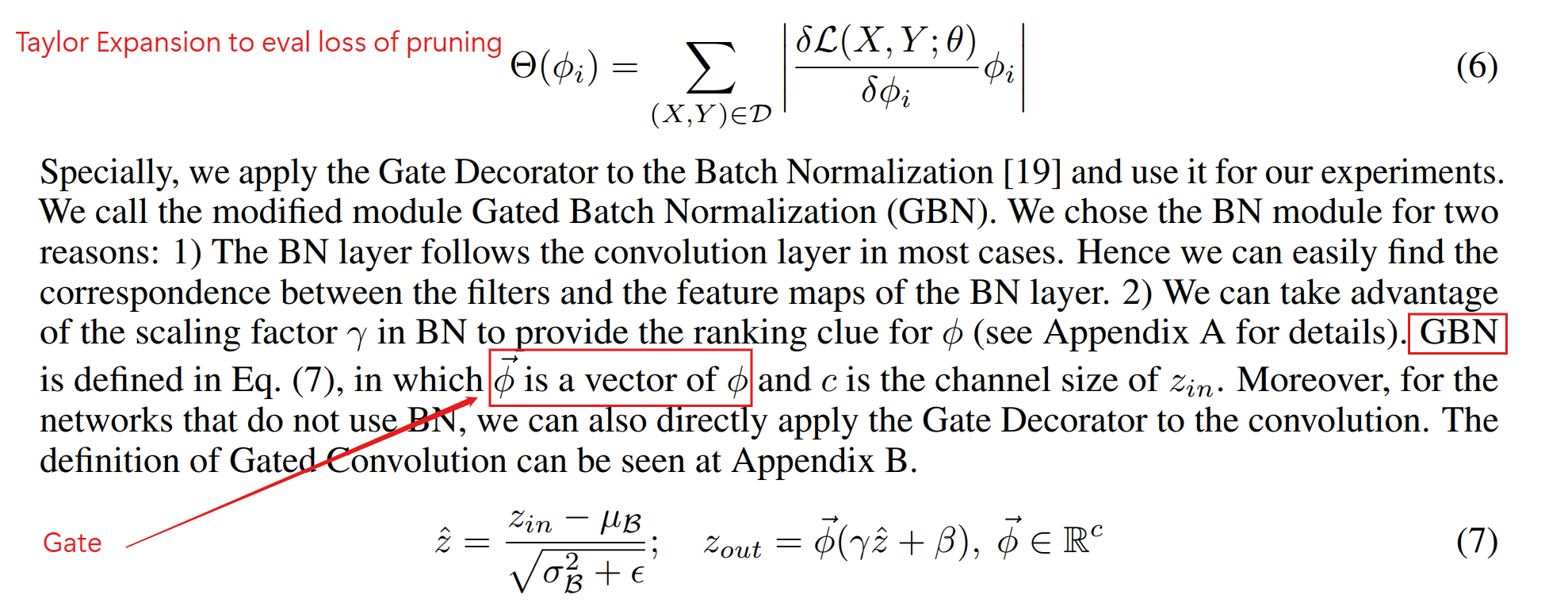

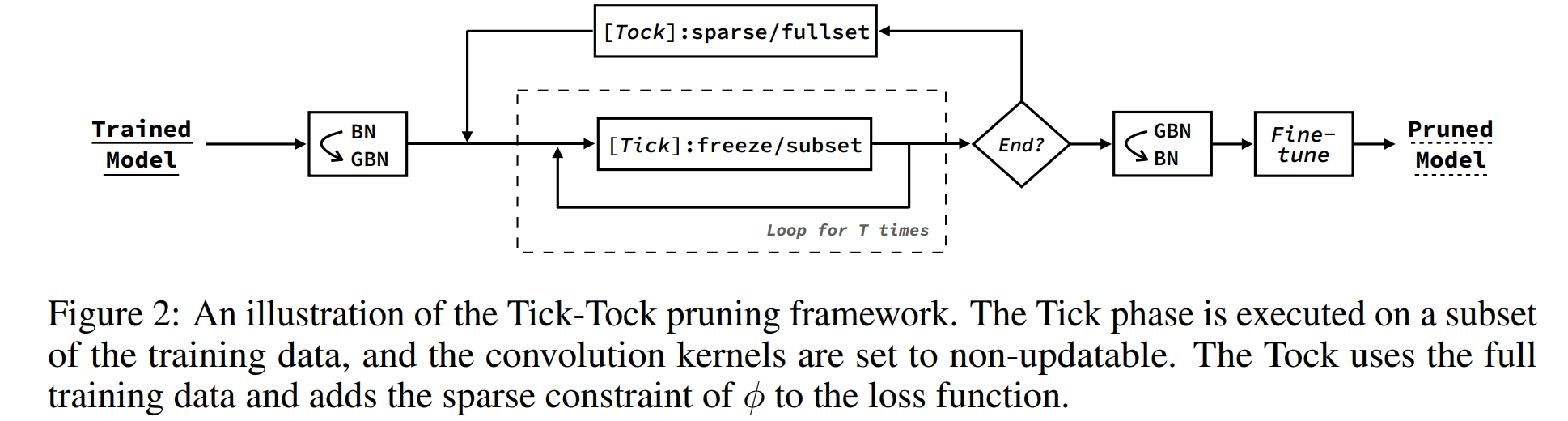

Gate decorator: Global filter pruning method for accelerating deep convolutional neural networks

StructADMM: Achieving Ultrahigh Efficiency in Structured Pruning for DNNs

The proposed framework incorporates stochastic gradient descent (SGD; or ADAM) with alternating direction method of multipliers (ADMM) and can be understood as a dynamic regularization method in which the regularization target is analytically updated in each iteration

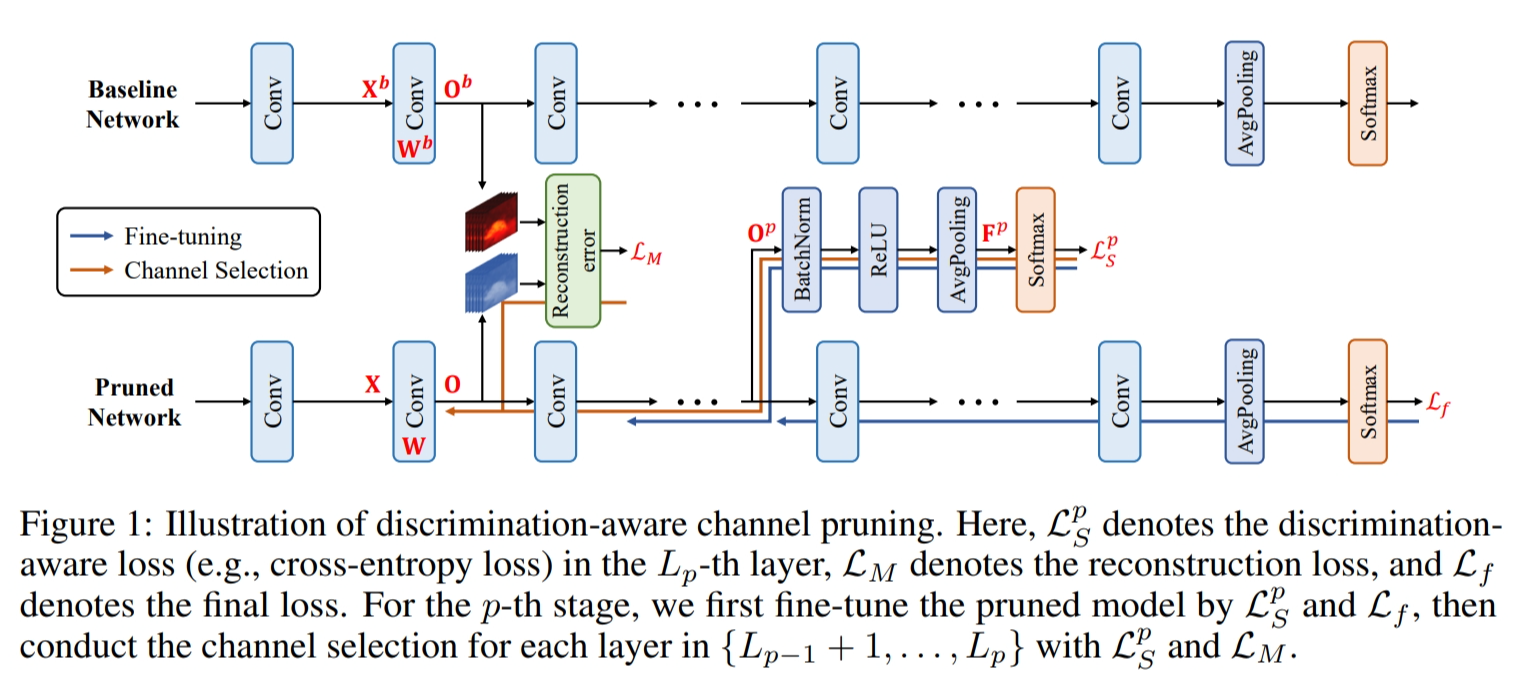

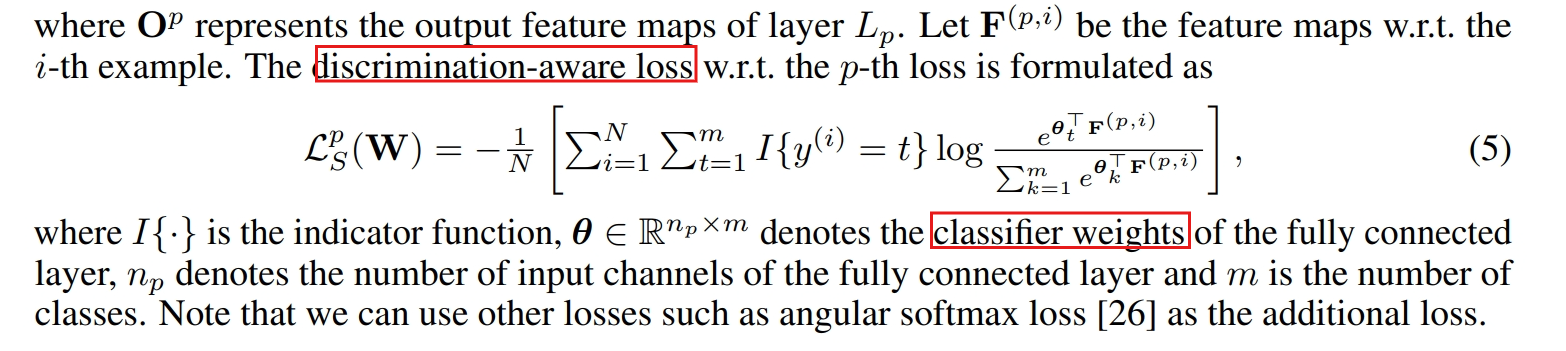

Discrimination-aware Channel Pruning for Deep Neural Networks

Training-based pruning

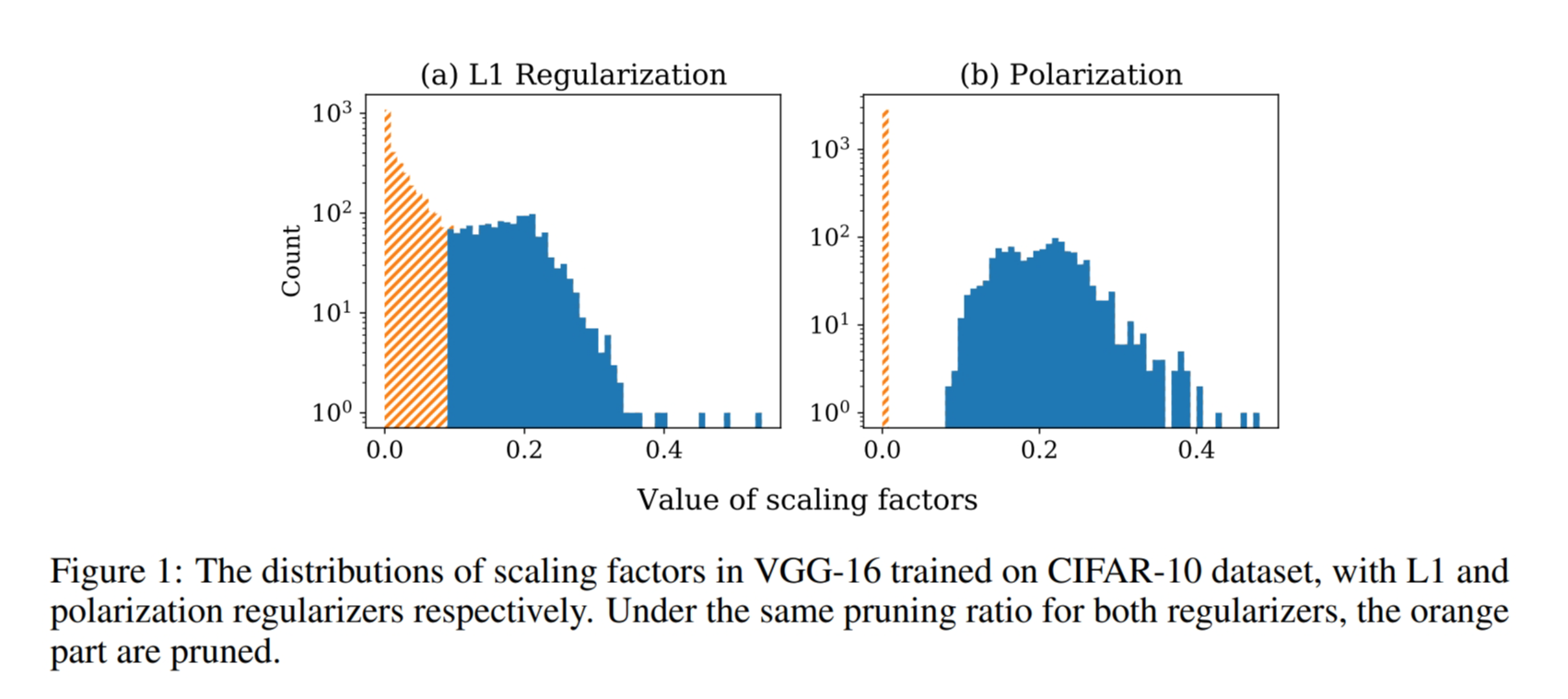

Neuron-level Structured Pruning using Polarization Regularizer

We propose a novel regularizer, namely polarization, for structured pruning of neural networks.

We theoretically analyzed the properties of polarization regularizer and proved that it simultaneously pushes a proportion of scaling factors to 0 and others to values larger than 0.

directly leverage the γ parameters in BN layers as the scaling factors

Towards Optimal Structured CNN Pruning via Generative Adversarial Learning

Compressing Convolutional Neural Networks via Factorized Convolutional Filters

In this work, we propose to conduct filter selection and filter learning simultaneously, in a unified model.

To this end, we define a factorized convolutional filter (FCF), consisting of a standard real-valued convolutional filter and a binary scalar, as well as a dot-product operator between them.

We train a CNN model with factorized convolutional filters (CNN-FCF) by updating the standard filter using back-propagation, while updating the binary scalar using the alternating direction method of multipliers (ADMM) based optimization method.

With this trained CNN-FCF model, we only keep the standard filters corresponding to the 1-valued scalars, while all other filters and all binary scalars are discarded, to obtain a compact CNN model.

Multi-Dimensional Pruning: A Unified Framework for Model Compression

Our MDP framework consists of three stages: the searching stage, the pruning stage, and the fine-tuning stage.

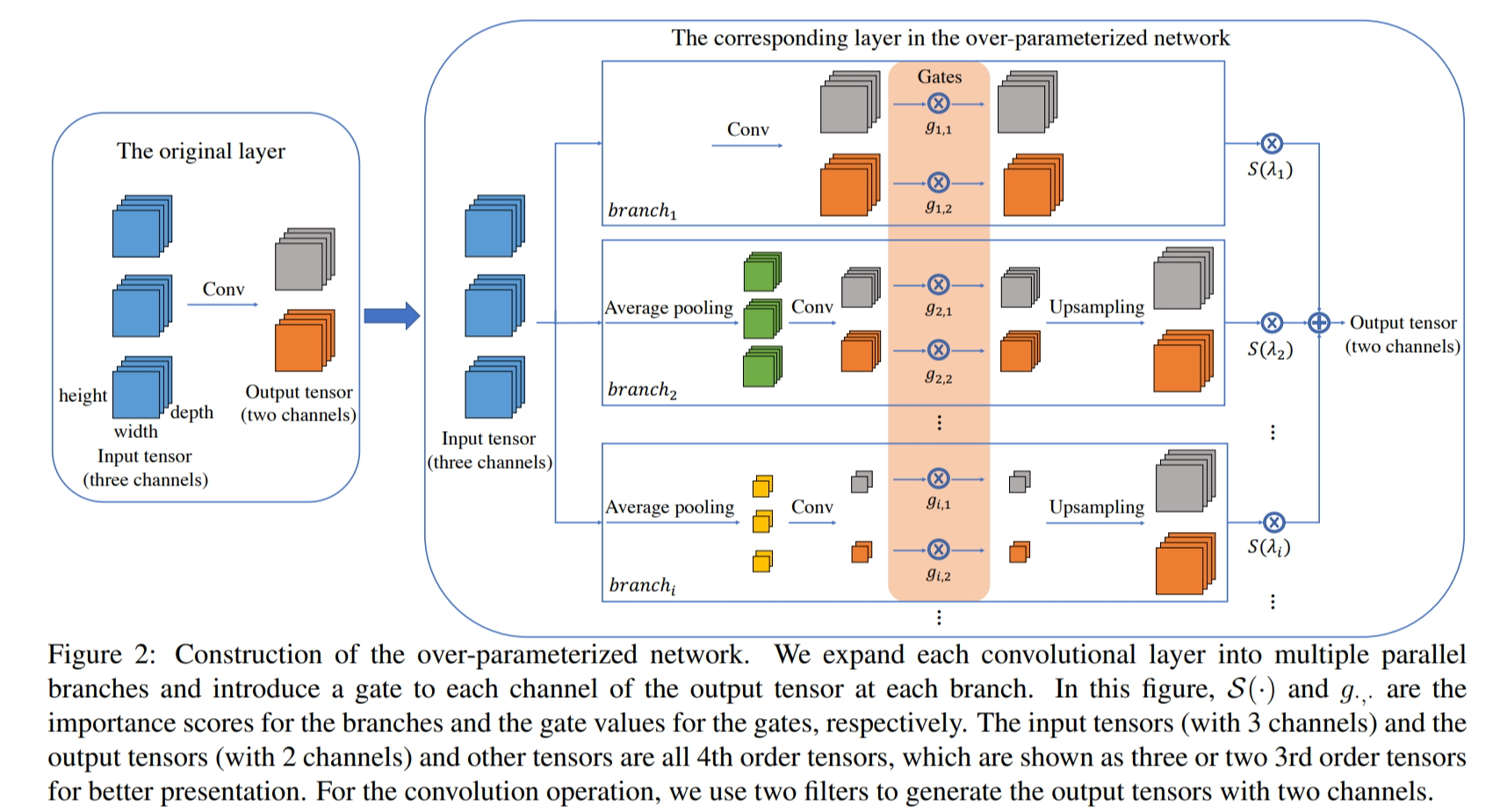

In the searching stage, we firstly construct an over-parameterized network from any given original network to be pruned and then train this over-parameterized network by using the objective function introduced in Sec. 3.2.2.

In the pruning stage, we prune the unimportant branches and channels in this over-parameterized network based on the importance scores learned in the searching stage.

In the fine-tuning stage, we fine-tune the pruned network to recover from the accuracy drop.

Specialized Optimizer

Centripetal SGD for Pruning Very Deep Convolutional Networks with Complicated Structure

However, instead of zeroing out filters, which ends up with a pattern where some whole filters are close to zero, we intend to merge multiple filters into one, leading to a redundancy pattern where some filters are identical.

Difference

In contrast to the traditional pruning approaches that permanently remove channels, we dynamically adjust the importance of the channels via a periodic pruning and regrowing process, which allows the prematurely pruned channels to be recovered and prevents the model from losing the representation ability early in the training process.

Methodology

Overview

Channel regrowing stage

Definition: Channel orthogonality measures the linear independence between a candidate channel and the active channels in a neural network layer.

Calculation: Orthogonality $\epsilon_^$ is computed using the orthogonal projection formula, quantifying the distance between the candidate channel and the subspace spanned by active channels.

Importance-Sampling Probabilities: Probabilities $p_j^l$ are derived from orthogonality scores, guiding the selection of channels for regrowing. Higher orthogonality yields higher probabilities.

Importance Sampling: Channels are selected for regrowing using importance sampling, favoring those with greater orthogonality to ensure diversity.

Regrowing Channels: Selected channels are regrown and added to the layer, contributing to diversity and improving neural network performance.

In the regrowing stage, we employ a cosine decay scheduler to gradually reduce the number of regrown channels so that the sub-model converges to the target channel sparsity at the end of training. Specifically, the regrowing factor at t-th step is computed as:

denotes the where $\delta_{0}$ is the initial regrowing factor, $T_max$ denotes the total exploration steps, and $\Delta T$ represents the frequency to N invoke the pruning-regrowing steps.

Sub-model structure exploration

Denote the BN scaling factors of all channels across all layers by

and the overall target channel sparsity by S.

We calculate the layer-wise pruning ratios by ranking all scaling factors in descending order and preserving the top 1 − S percent of the channels.

Then, the sparsity κ_ l for layer l is given as:

q(Γ, S) represents the S-th percentile of all the scaling factors Γ

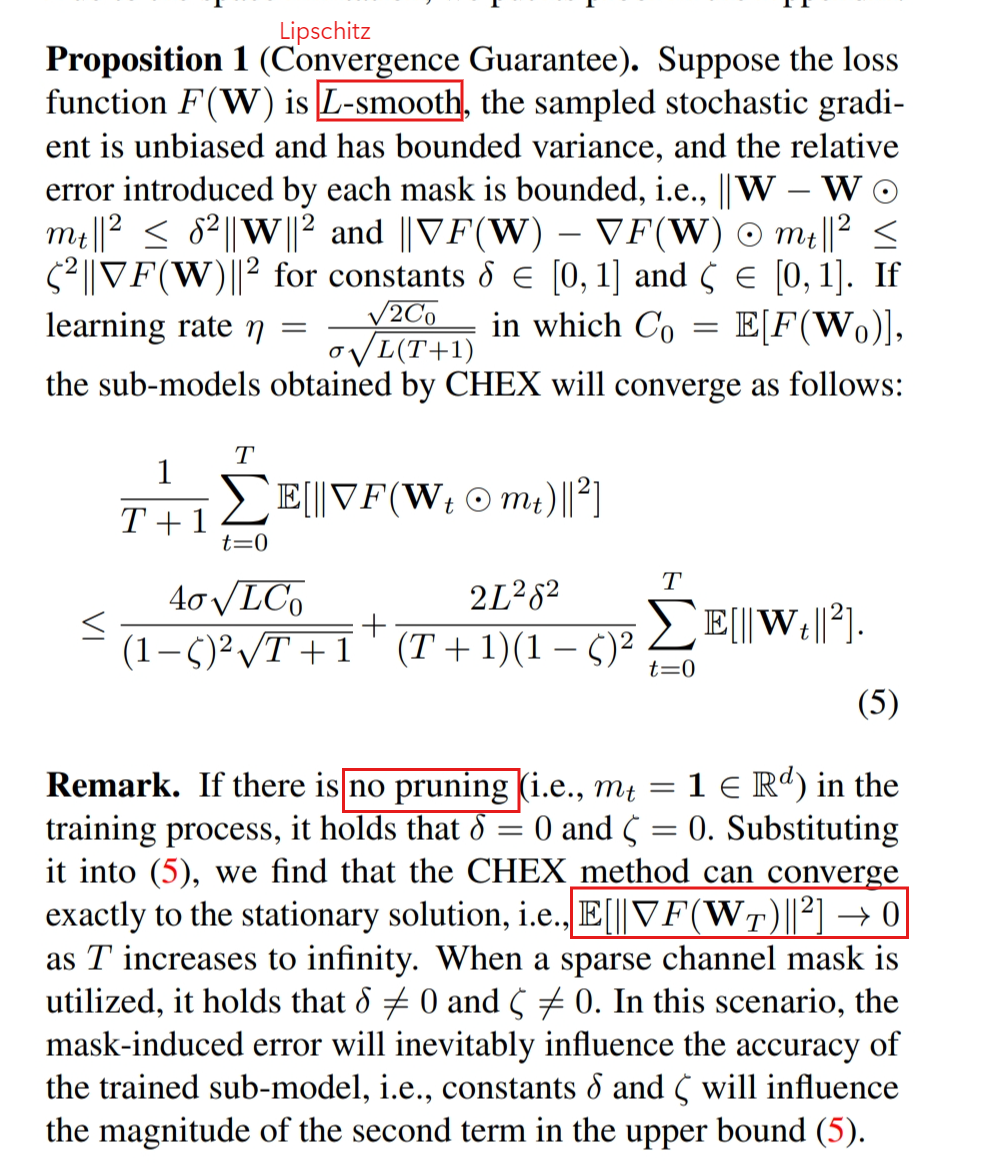

Theoretical Justification