ResRep: Lossless CNN Pruning via Decoupling Remembering and Forgetting

Abstract

We propose to re-parameterize CNN into remembering parts and forgetting parts,

where the former learn to maintain the performance

and the latter learns to prune

Via training with regular SGD on the former but a novel update rule with penalty gradients on the latter, we realize structured sparsity.

Then we equivalently merge the remembering and forgetting parts into the original architecture with narrower layers.

In this sense, ResRep can be viewed as a successful application of Structural Re-parameterization

Introduction

sparsity

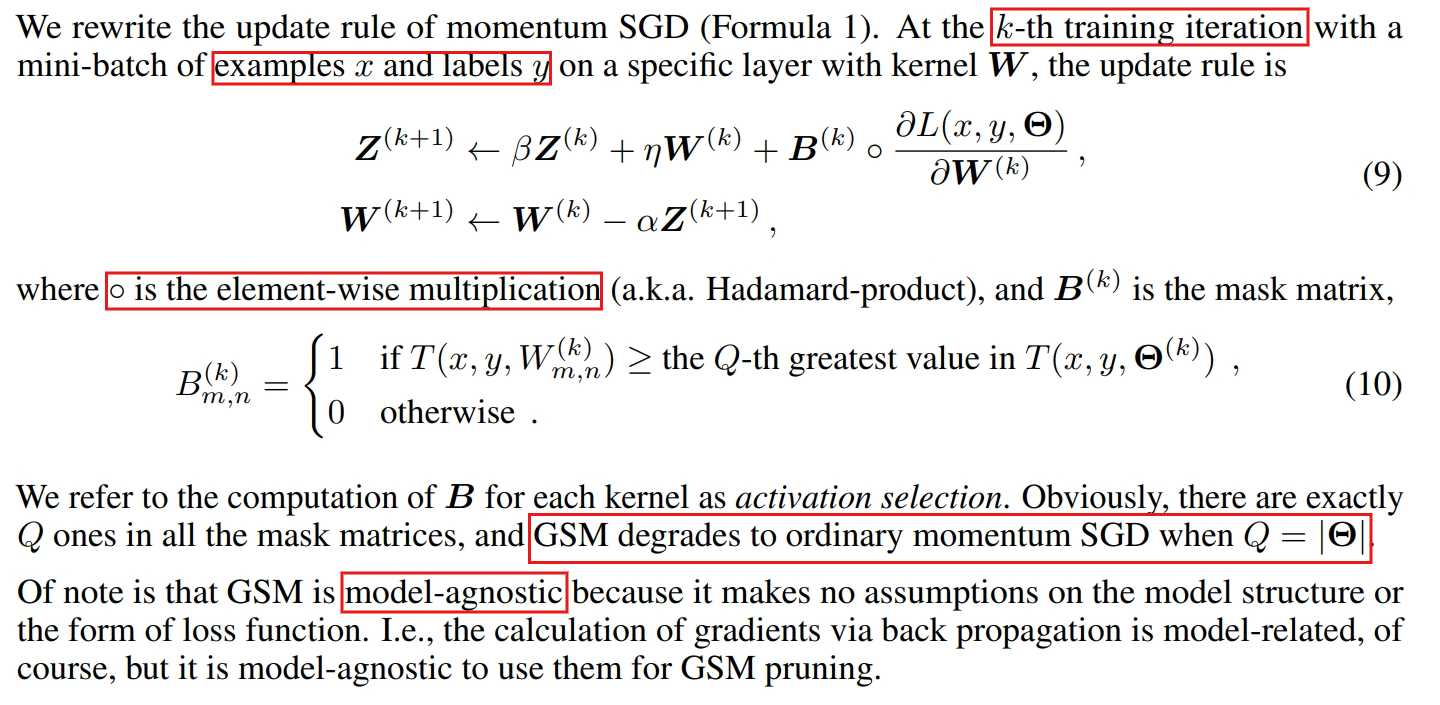

Global sparse momentum SGD for pruning very deep neural networks

https://arxiv.org/abs/1909.12778

To overcome the drawbacks of the two paradigms discussed above

we intend to explicitly control the eventual compression ratio via end-to-end training

by directly altering the gradient flow of momentum SGD to deviate the training direction in order to achieve a high-compression ratio as well as maintain the accuracy

Approximation metrics

Rewritten SGD Rule

Channel Pruning

https://arxiv.org/abs/2002.10179

Our HRank is inspired by the discovery that the average rank of multiple feature maps generated by a single filter is always the same, regardless of the number of image batches CNNs receive.

Based on HRank, we develop a method that is mathematically formulated to prune filters with low-rank feature maps.

The principle behind our pruning is that low-rank feature maps contain less information, and thus pruned results can be easily reproduced.

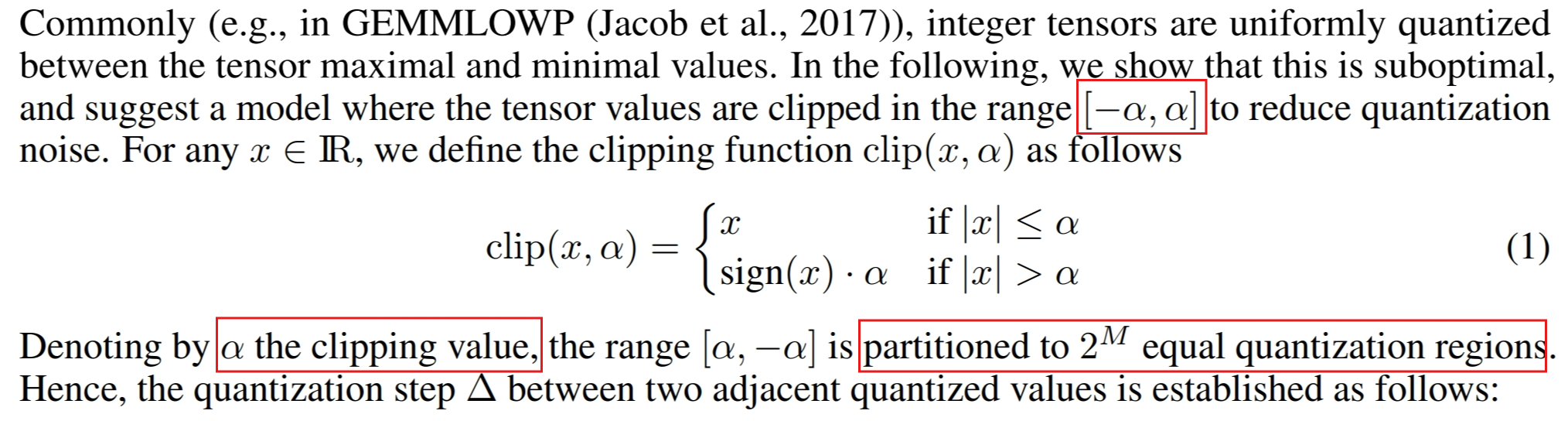

Quantization

Post training 4-bit quantization of convolutional networks for rapid-deployment

https://arxiv.org/abs/1810.05723

We use ACIQ for activation quantization and bias-correction for quantizing weights.

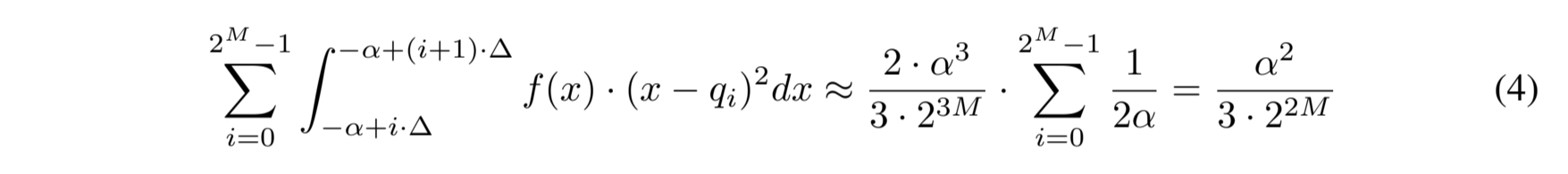

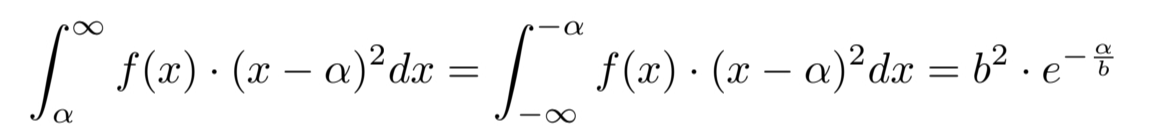

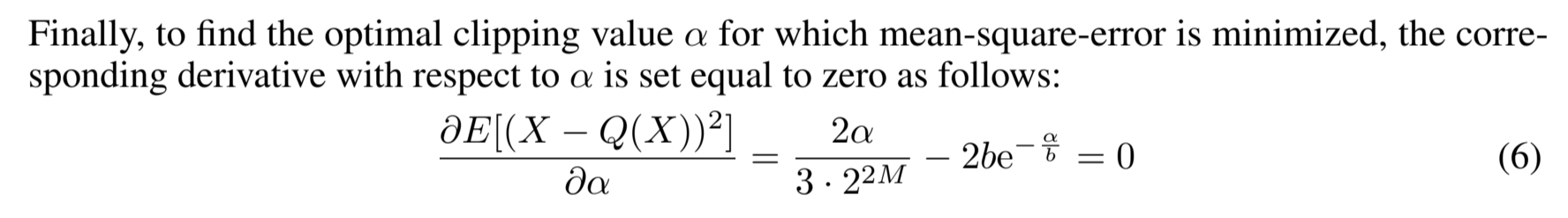

Analytical Clipping for Integer Quantization (ACIQ)

Assuming bit-width M, we would like to quantize the values in the tensor uniformly to 2^M discrete values.

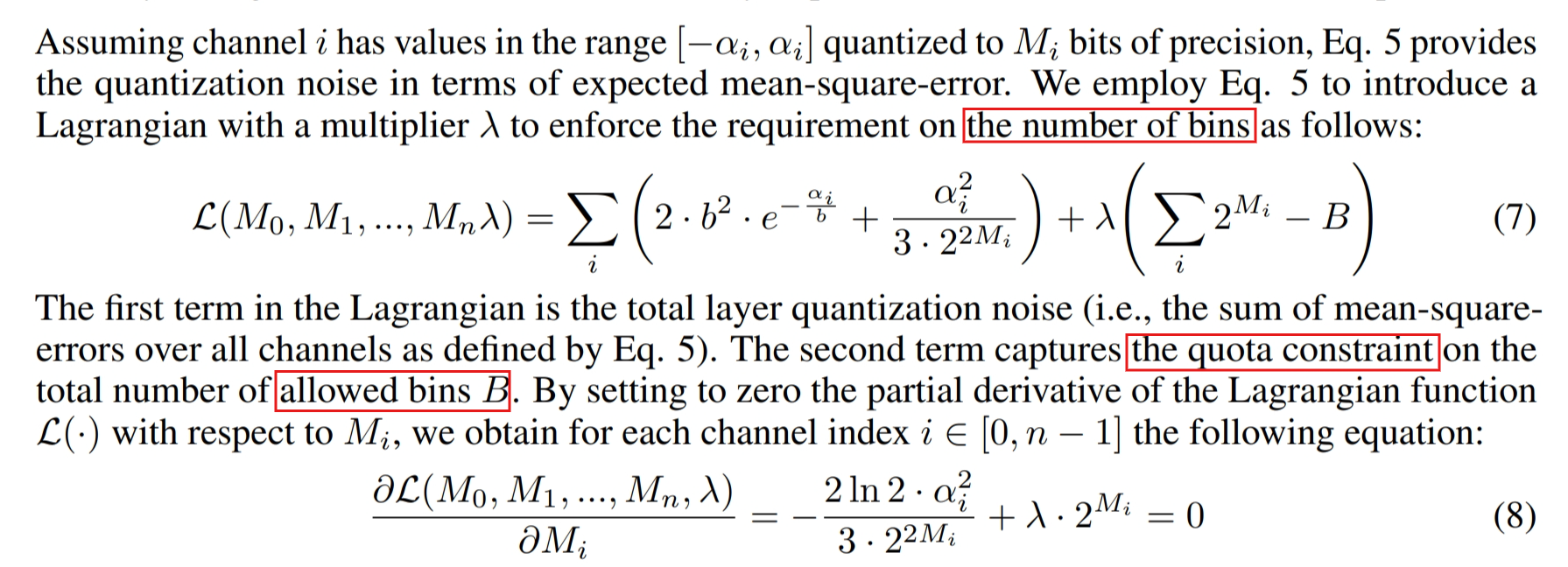

Per-channel bit allocation

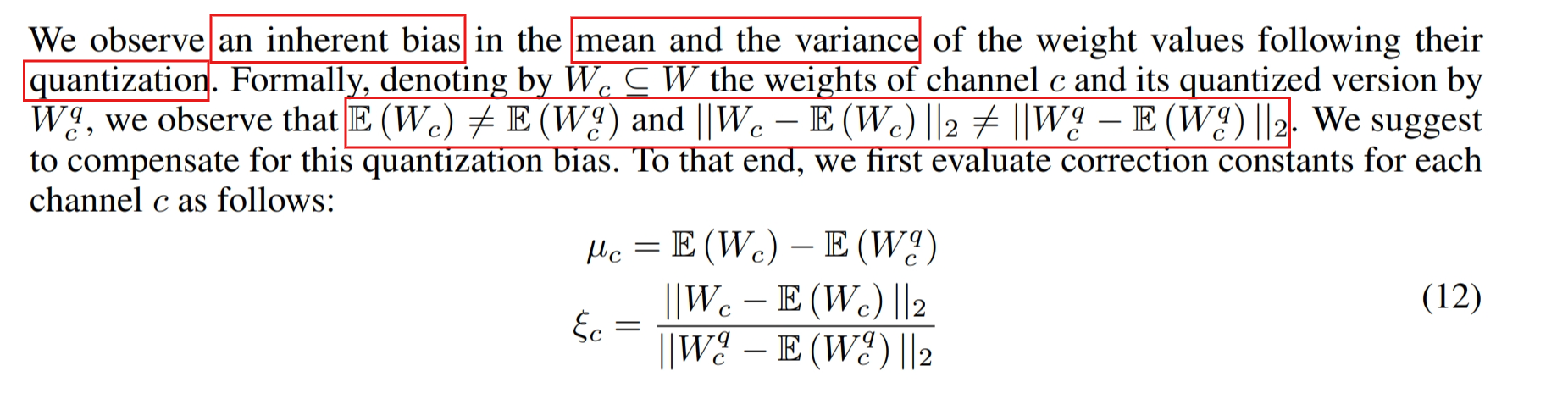

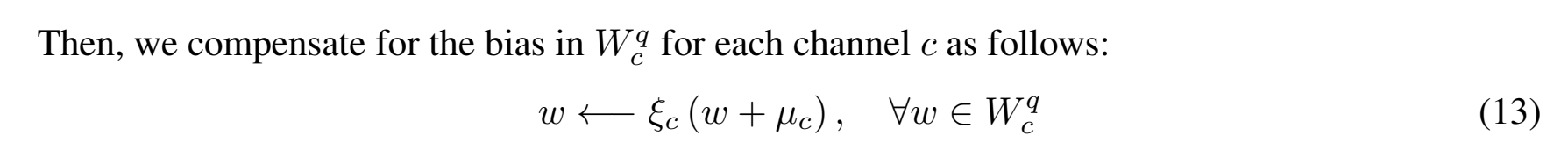

Bias-correction

Bi-real net

https://arxiv.org/abs/1808.00278

Bi-real net: Enhancing the performance of 1-bit cnns with improved representational capability and advanced training algorithm.: Combining 1-bit with original feature map shortcut to keep information

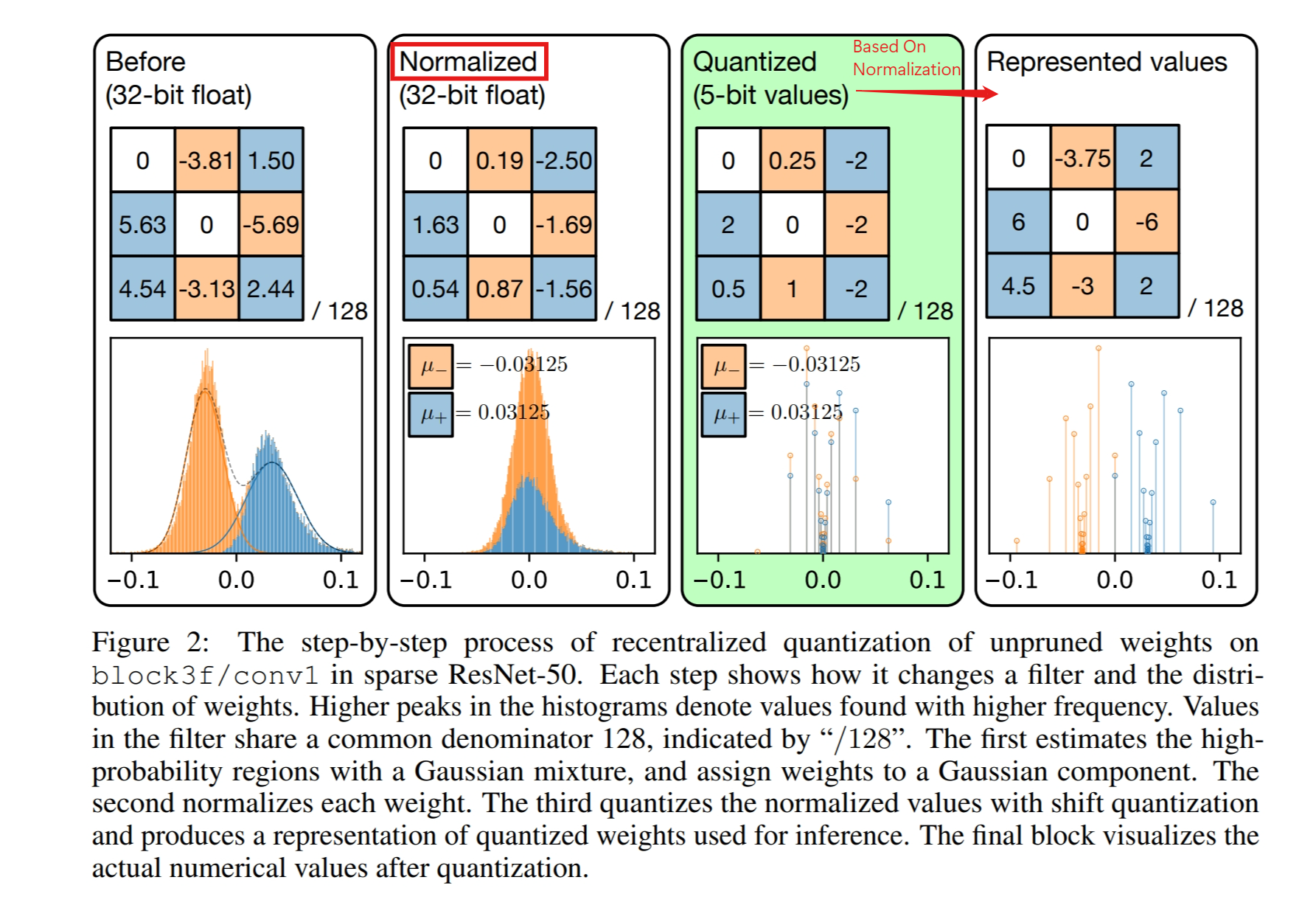

FOCUSED QUANTIZATION FOR SPARSE CNN

https://arxiv.org/abs/1903.03046

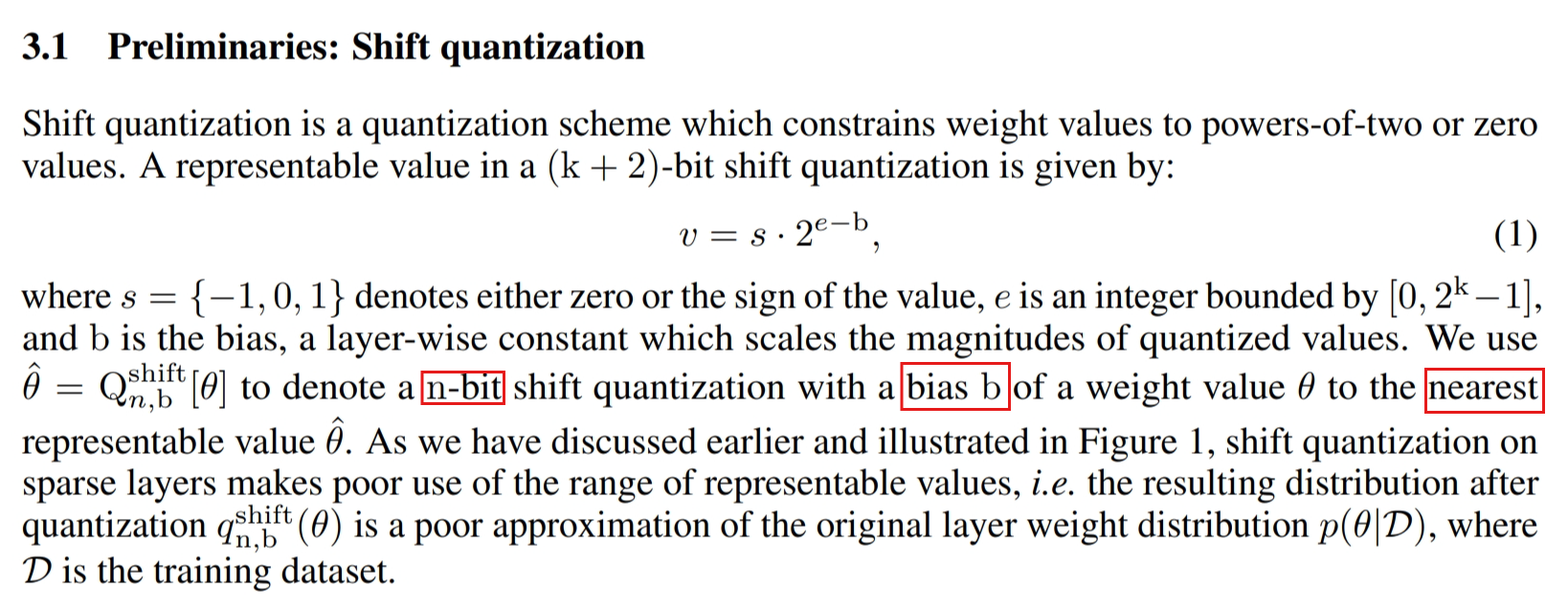

Shift quantization of weights, which quantizes weight values in a model to powers-of-two or zero, i.e. {0, ±1, ±2, ±4, . . .}, is of particular of interest, as multiplications in convolutions become much-simpler bit-shift operations.

Fine-grained pruning, however, is often in conflict with quantization, as pruning introduces various degrees of sparsity to different layers.

Linear quantization methods (integers) have uniform quantization levels and non-linear quantization (logarithmic, floating-point and shift) have fine levels around zero

but levels grow further apart as values get larger in magnitude.

Both linear and nonlinear quantization thus provide precision where it is not actually required in the case of a pruned CNN

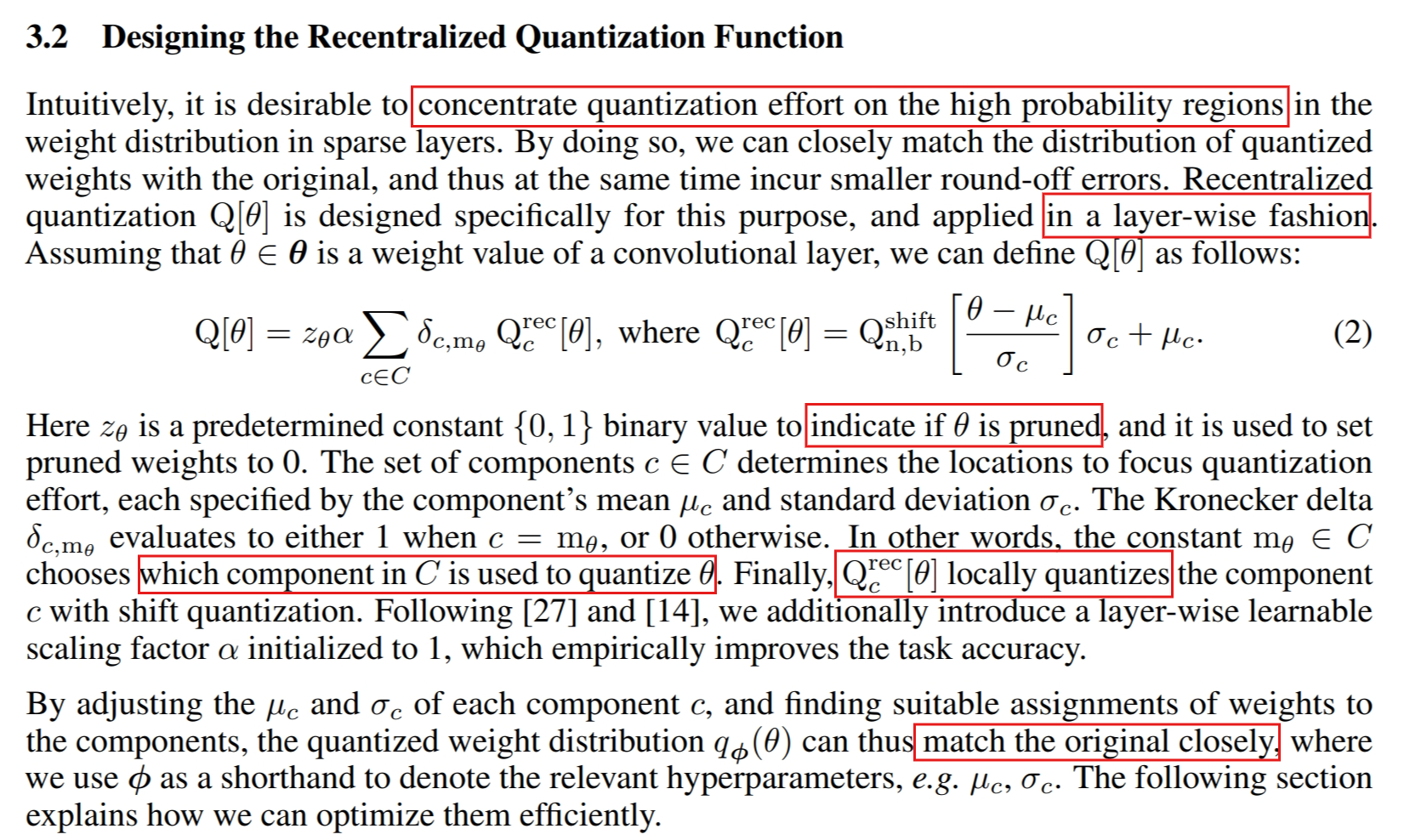

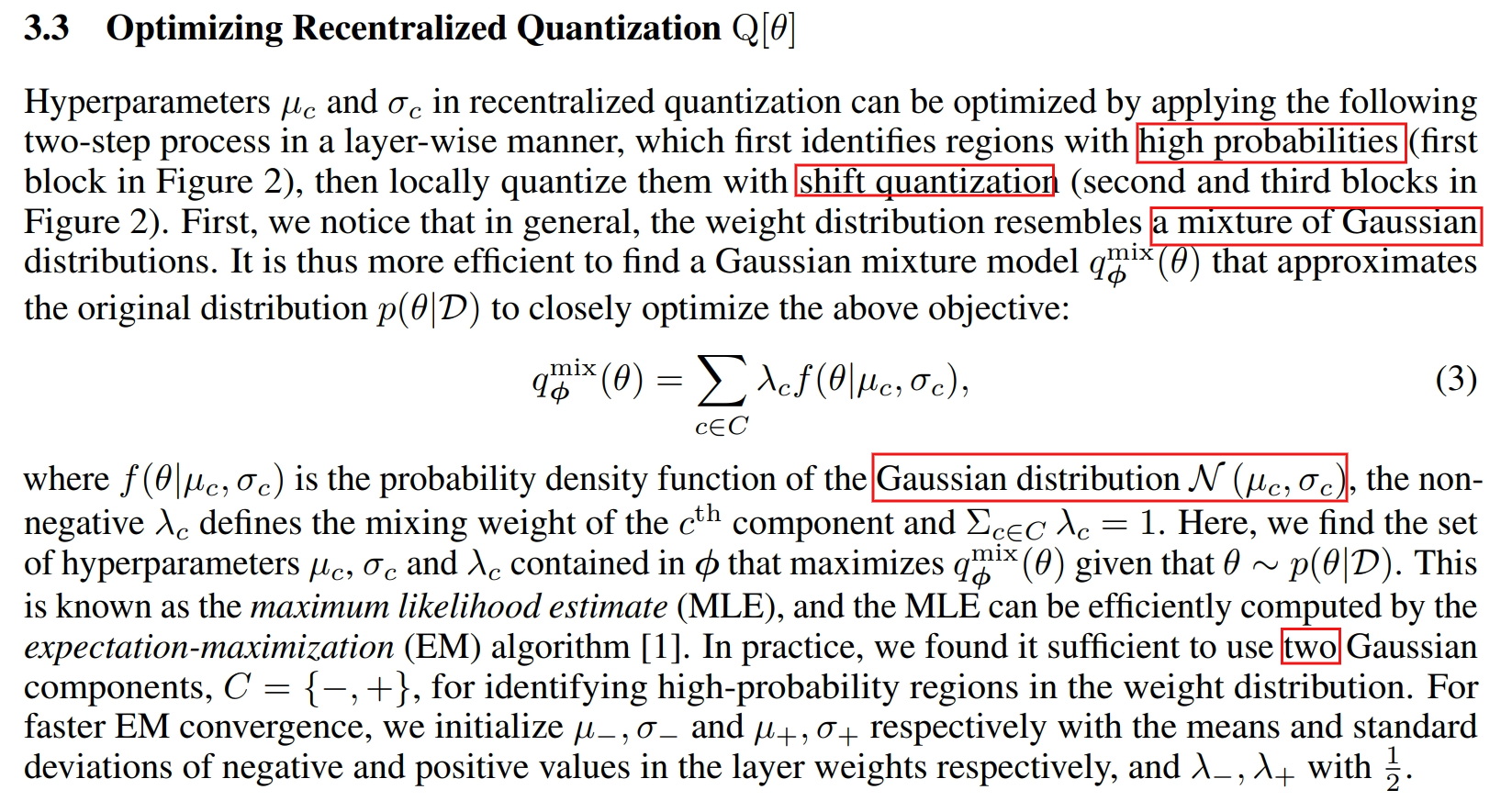

We address both issues by proposing a new approach to quantizing parameters in CNNs which we call focused quantization (FQ) that mixes shift and re-centralized quantization methods.

is used to select which quantized component could be better to fit the original distribution.

Knowledge Distillation

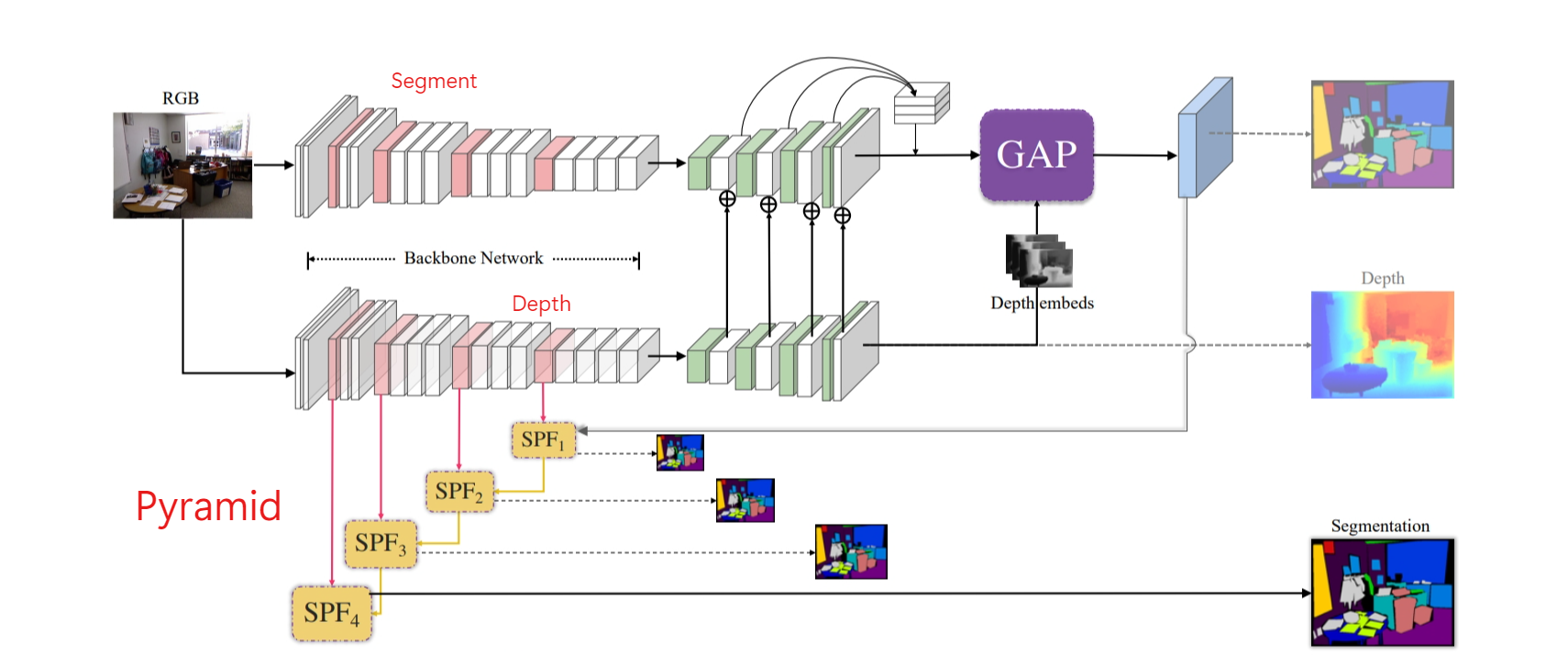

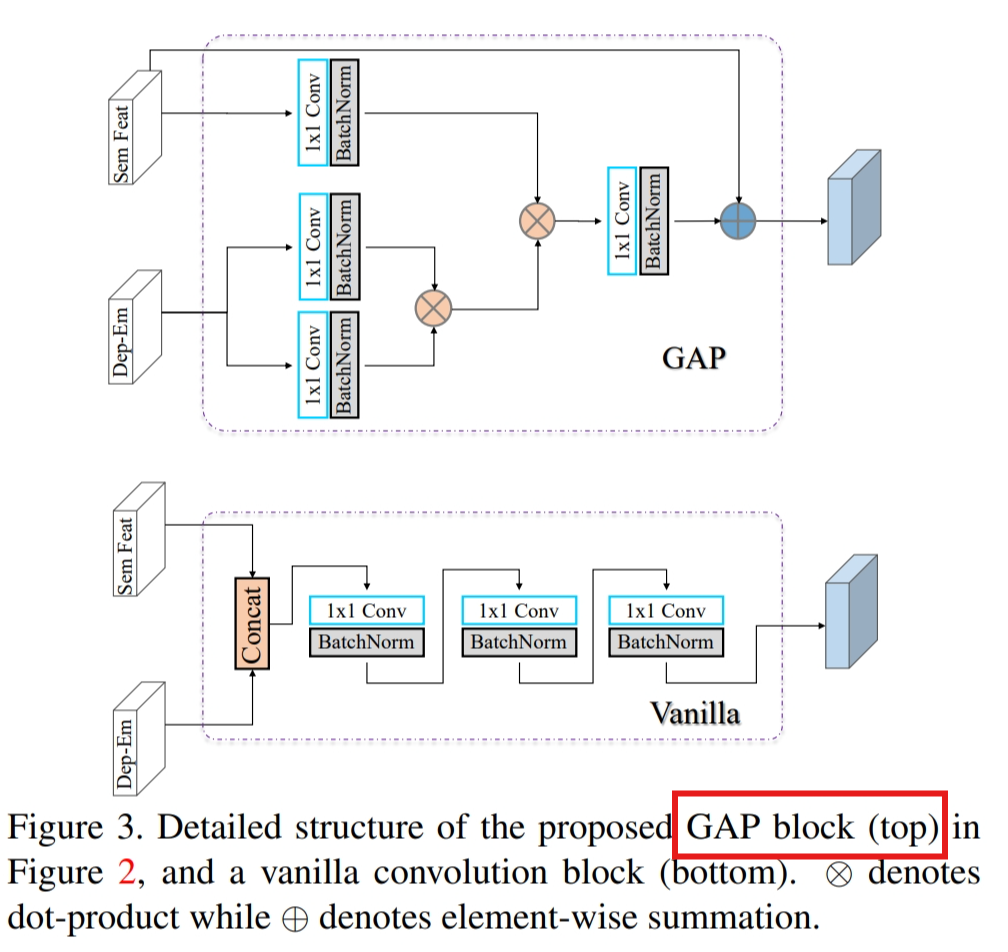

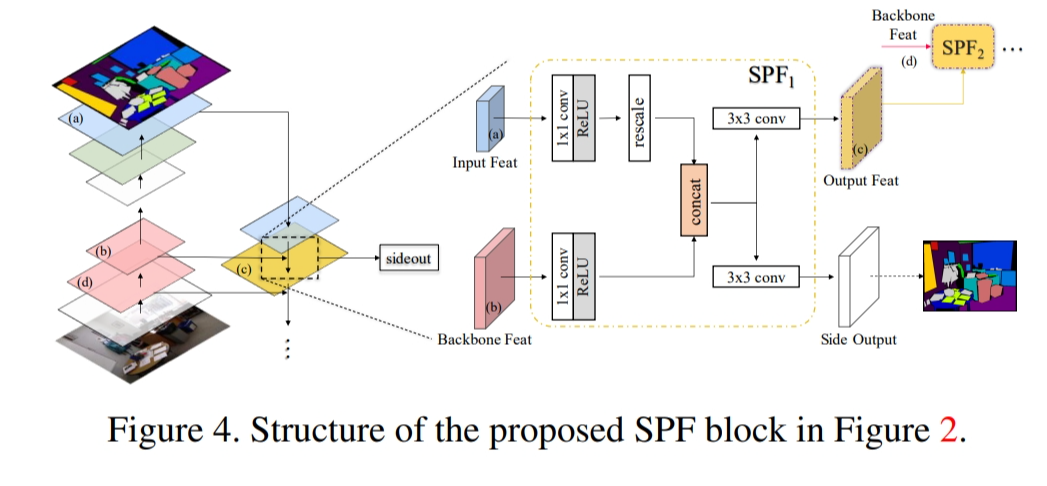

Geometry-Aware Distillation for Indoor Semantic Segmentation

https://ieeexplore.ieee.org/document/8954087

Structured Knowledge Distillation for Semantic Segmentation

https://ieeexplore.ieee.org/document/8954081

Teacher and Student

pair-wise distillation

learn association between each pair of pixels

pixel-wise distillation

simply learn classification results of each pixel

holistic distillation

Like GAN, with a discriminator network

Unifying Heterogeneous Classifiers with Distillation

https://arxiv.org/abs/1904.06062

Merge Heterogeneous Classifiers into a single one.

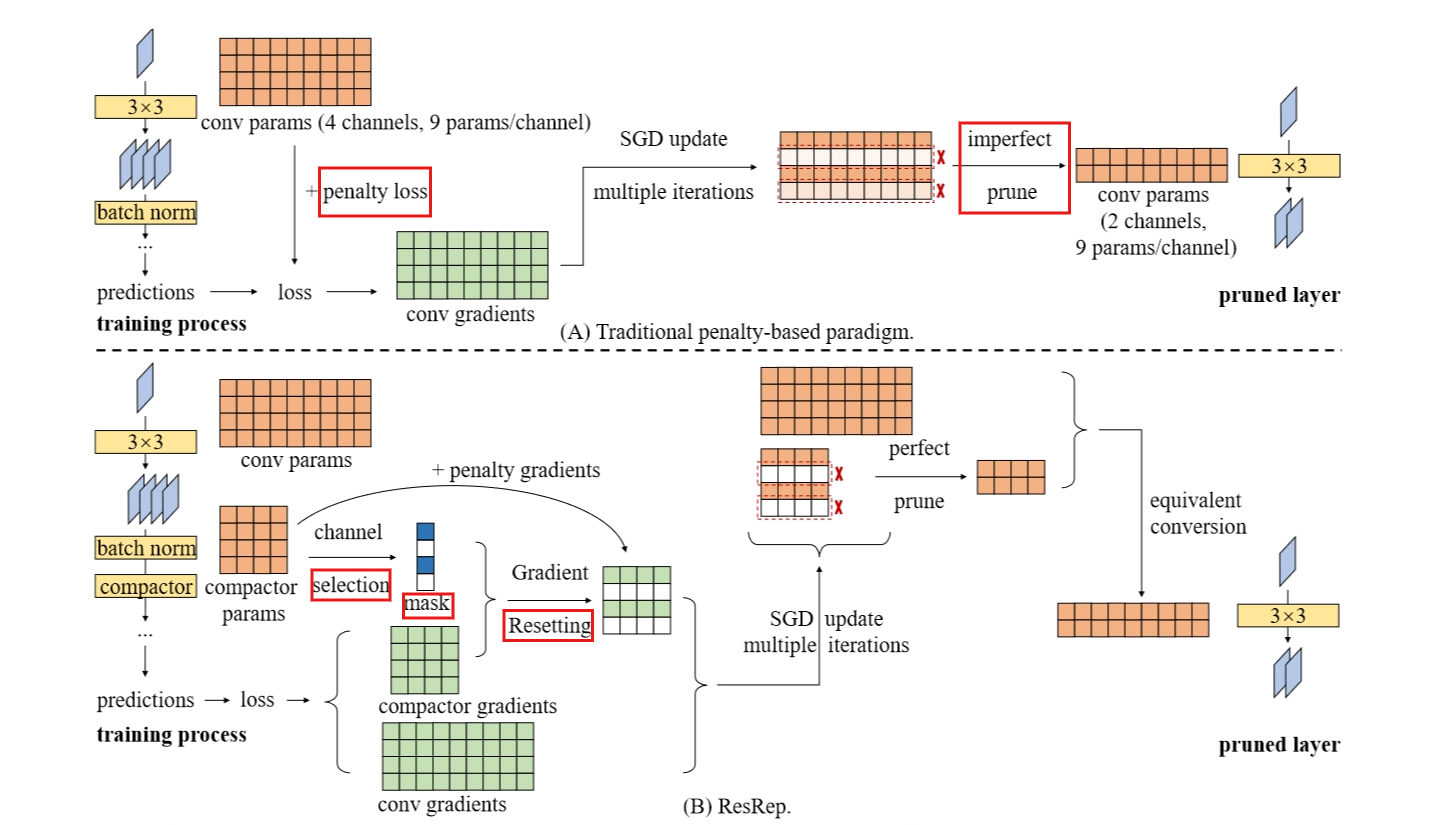

Typical Pruning Paradigm

Auto-balanced filter pruning for efficient convolutional neural networks

https://ojs.aaai.org/index.php/AAAI/article/view/12262

The word auto-balanced includes two meanings.

On the one hand, according to Eq. 13, the intensity of stimulation on strong filters varies with the weak ones. When the weak filters are zeroed out, the stimulation automatically stops and the training converges.

On the other hand, as the weak filters in a certain layer are weakened and the strong ones are stimulated, the representational capacity of the weak part is gradually transferred to the strong part, keeping the whole layer’s representational capacity unharmed.

Resistance & Prunability

We evaluate a training-based pruning method from two aspects.

Resistance

We say a model has high resistance if the performance maintains high during training.

Prunability

If the model endures a high pruning ratio with low performance drop, we say it has high prunability.

We desire both high resistance and prunability, but the traditional penalty-based paradigm naturally suffers from a resistance-prunability trade-off.

Two Key Components

ResRep comprises two key components: Convolutional Re-parameterization (Rep, the methodology of decoupling and the corresponding equivalent conversion) and Gradient Resetting (Res, the update rule for “forgetting”).

Related Works

The lottery ticket hypothesis: Finding sparse, trainable neural networks

this hypothesis suggests that when training a large neural network, the successful training of the model is equivalent to finding a small, "winning" subnetwork (termed as the 'lottery ticket') within the large network. These subnetworks are sparse, i.e., only a certain small fraction of the parameters is non-zero.

Pruning-then-Fine-tuning

Some methods repeat pruning-finetuning iterations to measure the importance and prune progressively.

A major drawback is that the pruned models can be easily trapped into bad local minima, and sometimes cannot even reach a similar level of accuracy with a counterpart of the same structure trained from scratch.

This discovery highlights the significance of perfect pruning, which eliminates the need for fine-tuning.

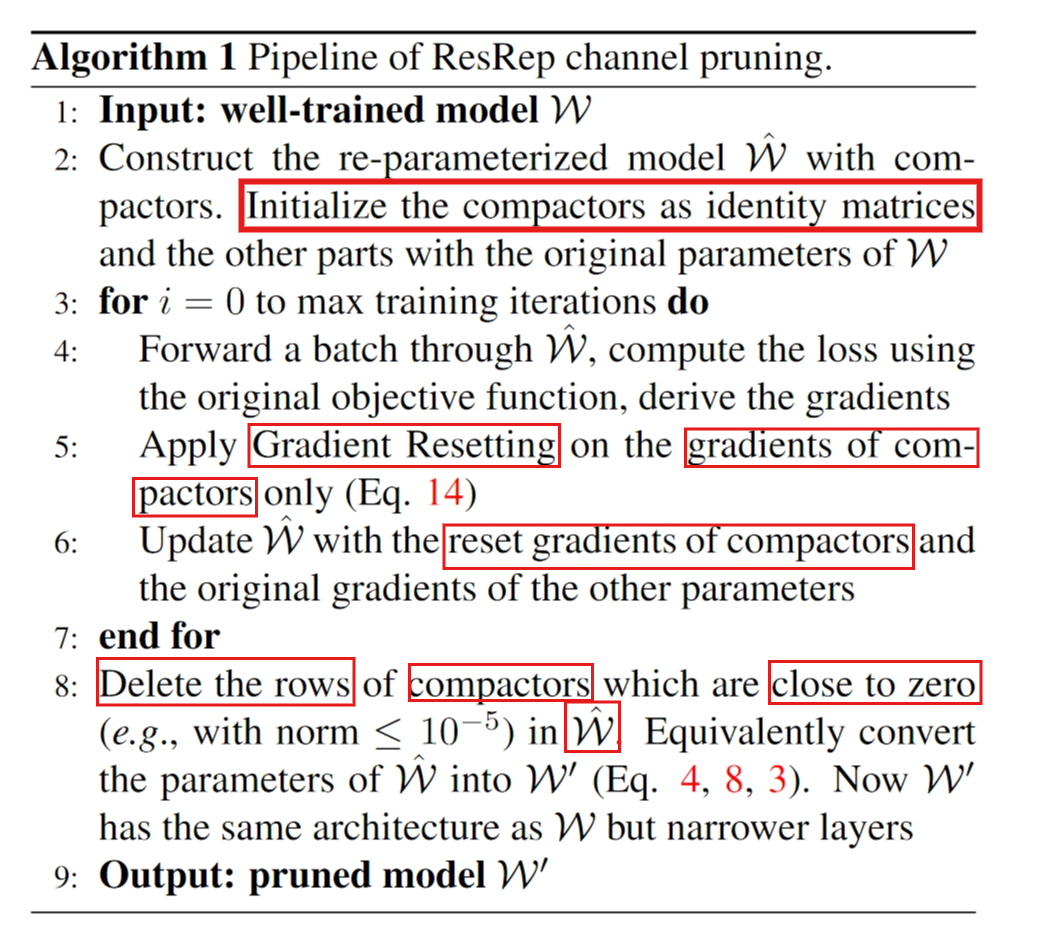

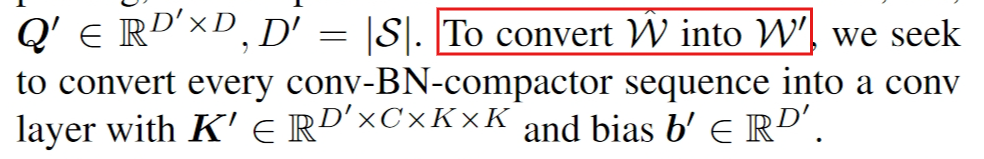

ResRep for Lossless Channel Pruning

Convolutional Re-parameterization

For every conv layer together with the following BN (if any) we desire to prune, which are referred to as the target layers, we append a compactor (1 × 1 conv) with kernel

Given a well-trained model W, we construct a re-parameterized model Wˆ by initializing the conv-BN as the original weights of W and Q as an identity matrix, so that the re-parameterized model produces the identical outputs as the original.

Details about how to cal them could be found in the paper.

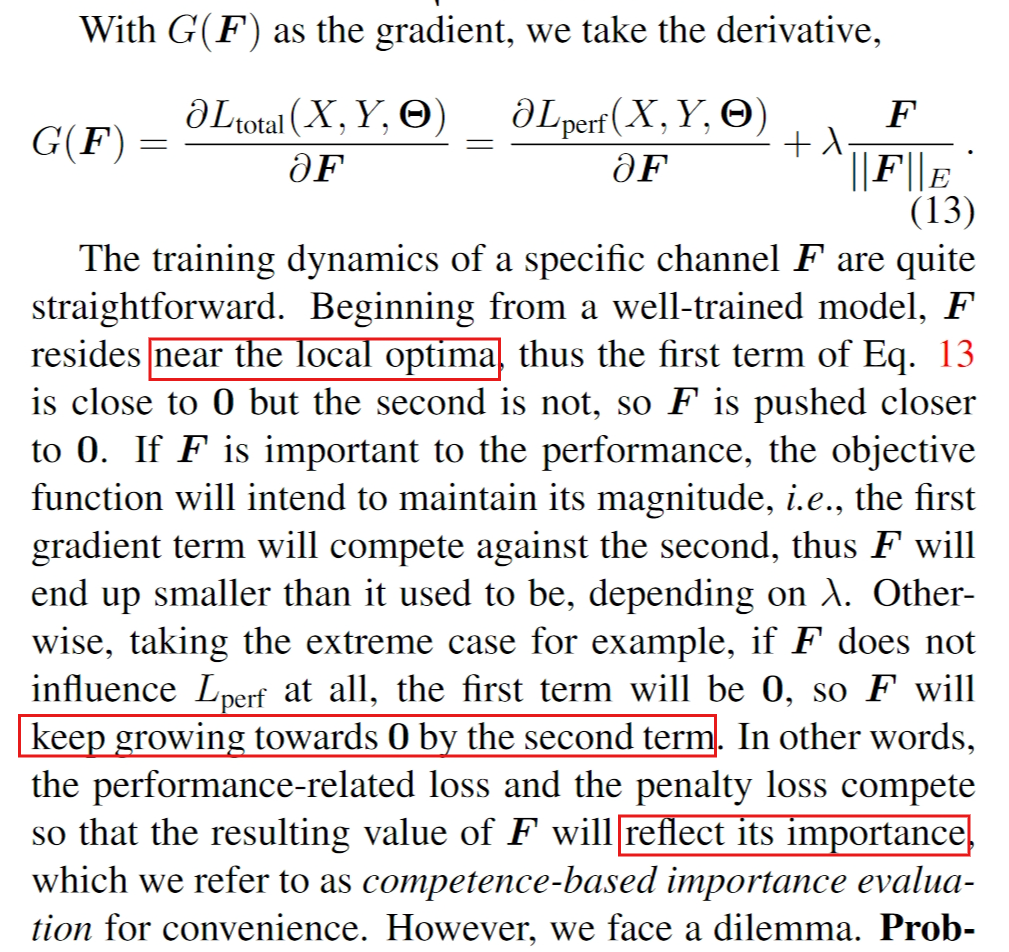

Gradient Resetting

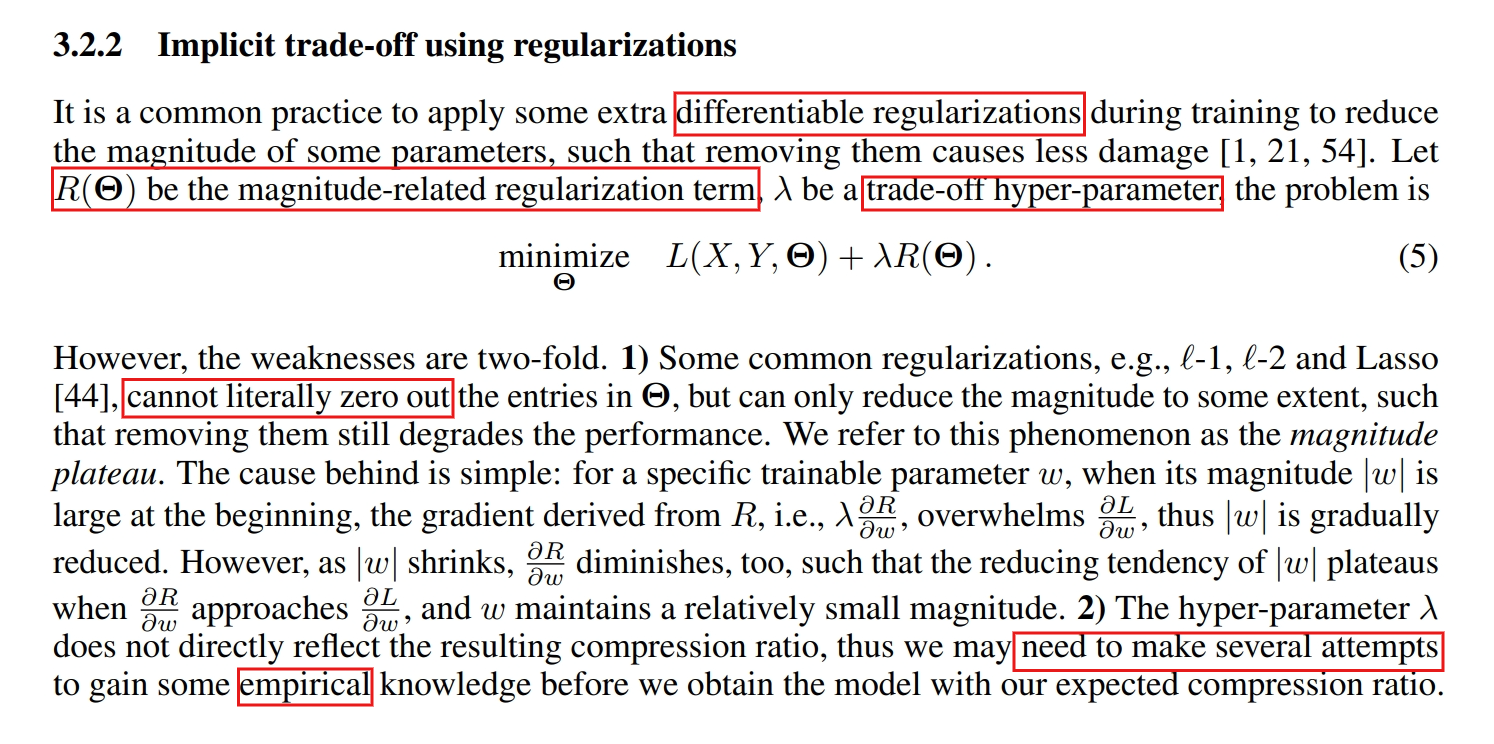

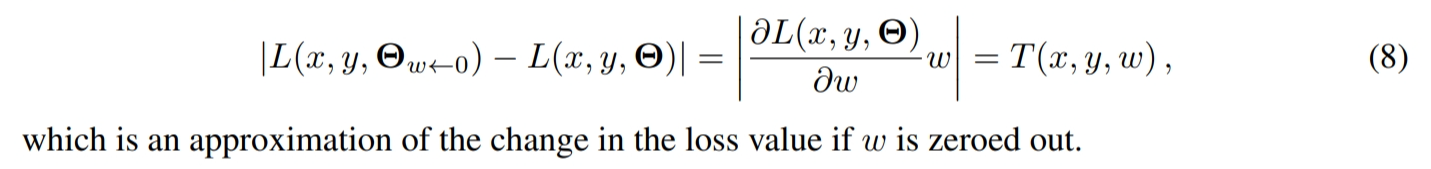

We describe how to produce structured sparsity in compactors while maintaining the accuracy, beginning by discussing the traditional usage of penalty on a specific kernel K to make the magnitude of some channels smaller, i.e.

we denote a specific channel in K by

competence-based importance evaluation

Dilemma Inside

Problem A: The penalty deviates the parameters of every channel from the optima of the objective function.

Notably, a mild deviation may not bring negative effects; e.g., L2 regularization can also be viewed as a mild deviation.

However, with a strong penalty, though some channels are zeroed out for pruning, the remaining channels are also made too small to maintain the representational capacity, which is an undesired side effect.

Problem B: With mild penalty for the high resistance, we cannot achieve high prunability, because most of the channels merely become closer to 0 than they used to be, but not close enough for perfect pruning.

Solution

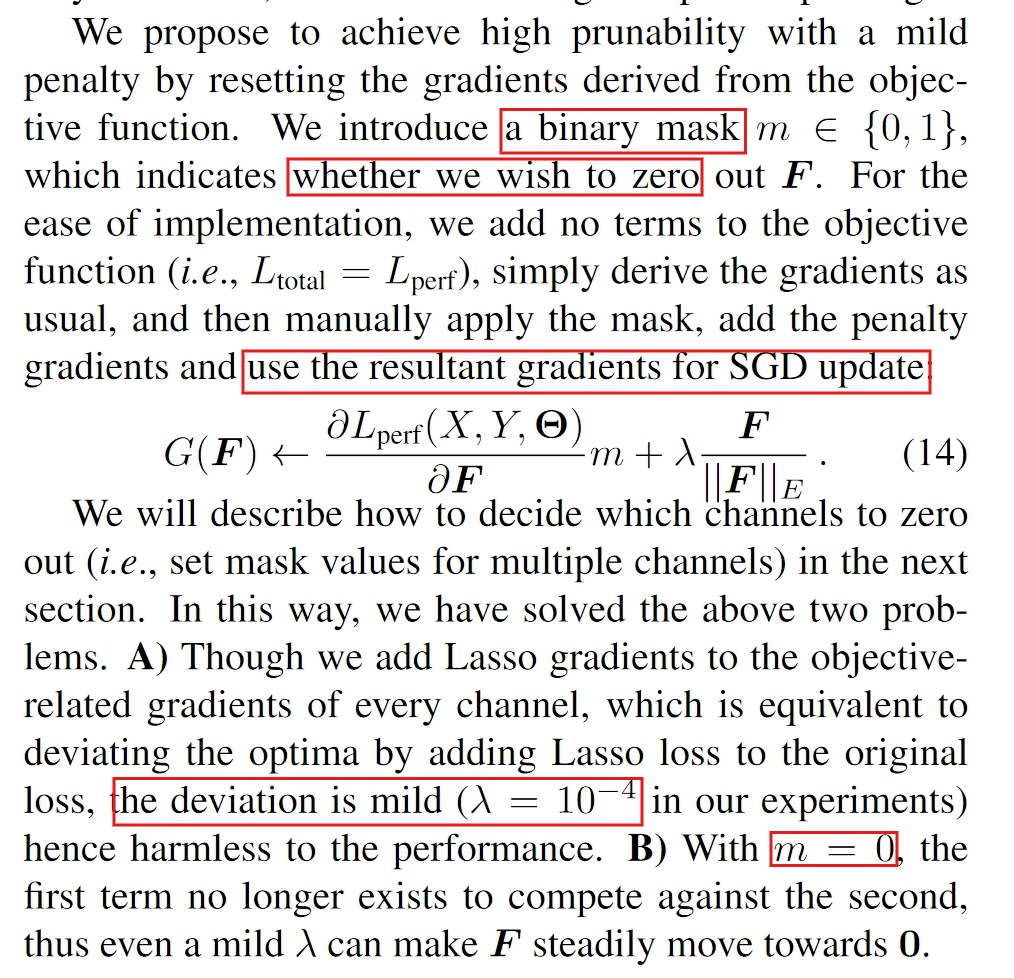

We propose to achieve high prunability with a mild penalty by resetting the gradients derived from the objective function.

The Remembering Parts Remember Always, the Forgetting Parts Forget Progressively

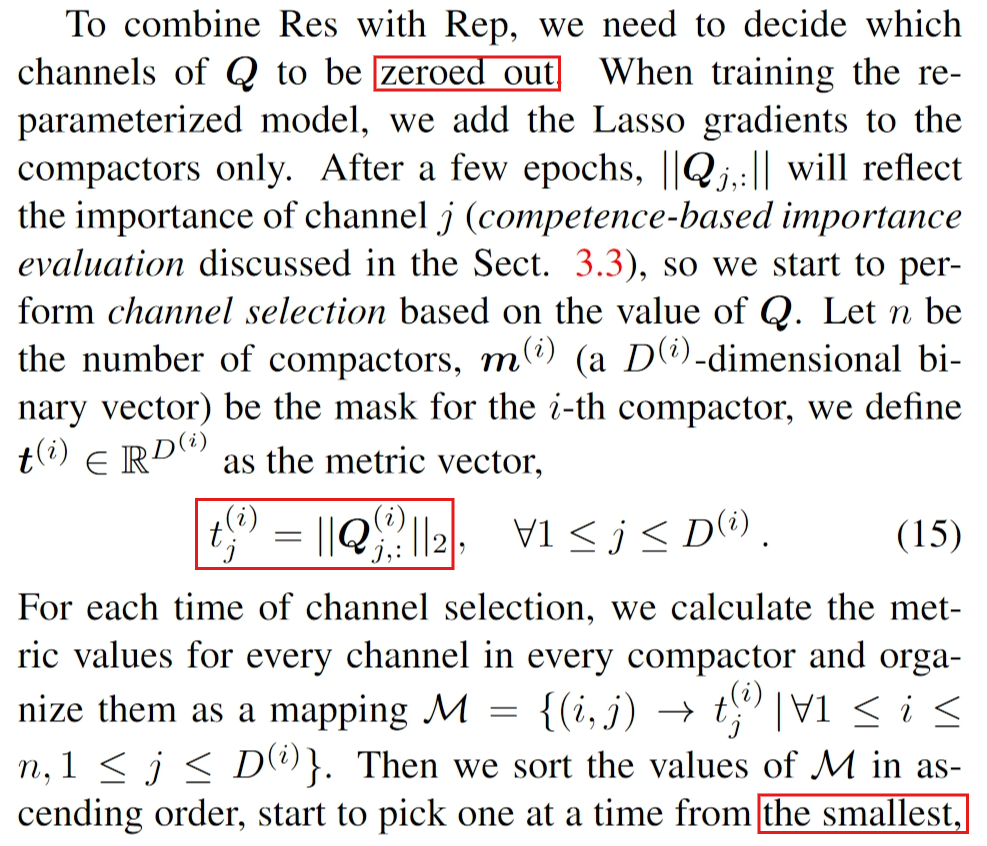

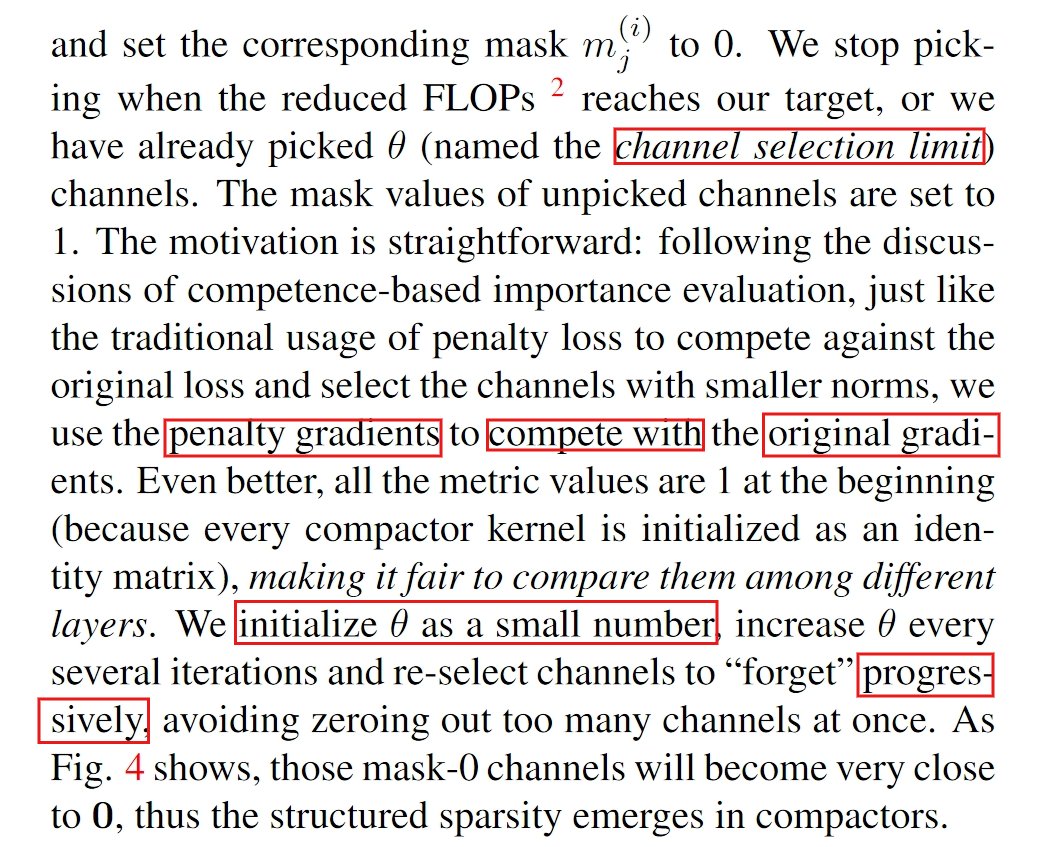

To combine Res with Rep, we need to decide which channels of Q to be zeroed out.