Post-Training Quantization for Vision Transformer

Abstract

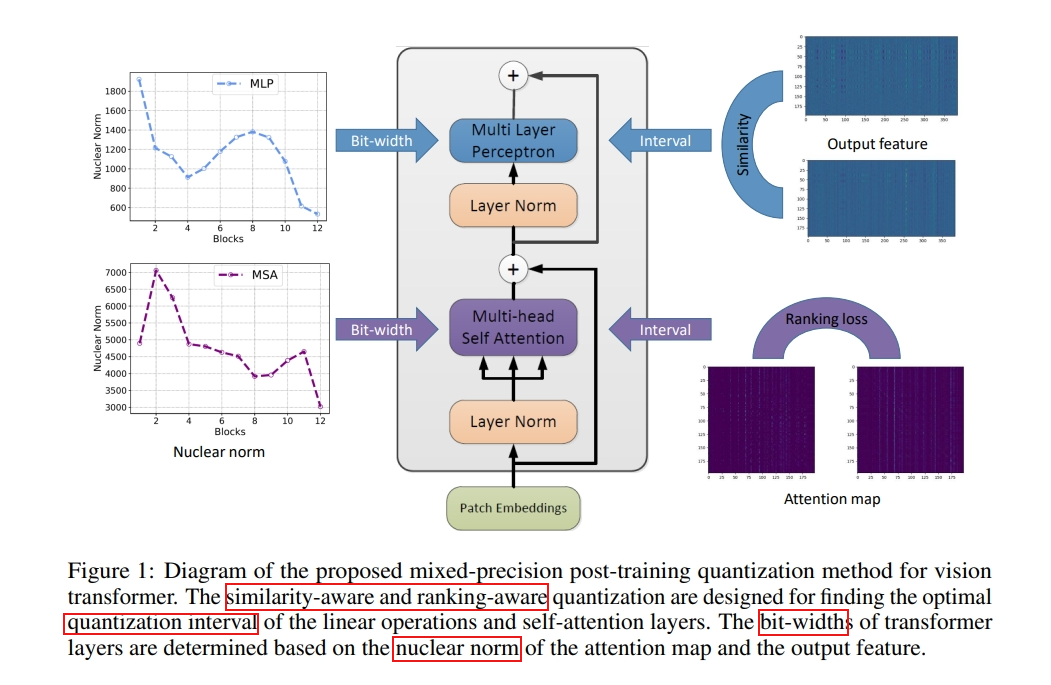

In this paper, we present an effective post-training quantization algorithm for reducing the memory storage and computational costs of vision transformers.

Basically, the quantization task can be regarded as finding the optimal low-bit quantization intervals for weights and inputs, respectively.

To preserve the functionality of the attention mechanism, we introduce a ranking loss into the conventional quantization objective that aims to keep the relative order of the self-attention results after quantization.

Moreover, we thoroughly analyze the relationship between quantization loss of different layers and the feature diversity, and explore a mixed-precision quantization scheme by exploiting the nuclear norm of each attention map and output feature.

Introduction

Quantization in CNN

Trained ternary quantization

https://arxiv.org/pdf/1612.01064.pdf

In this paper, we propose Trained Ternary Quantization which uses two full-precision scaling coefficients W ^p _l , W^n _l for each layer l, and quantize the weights to {−W^n _l , 0, +W^ p _l } instead of traditional {-1, 0, +1} or {-E, 0, +E}

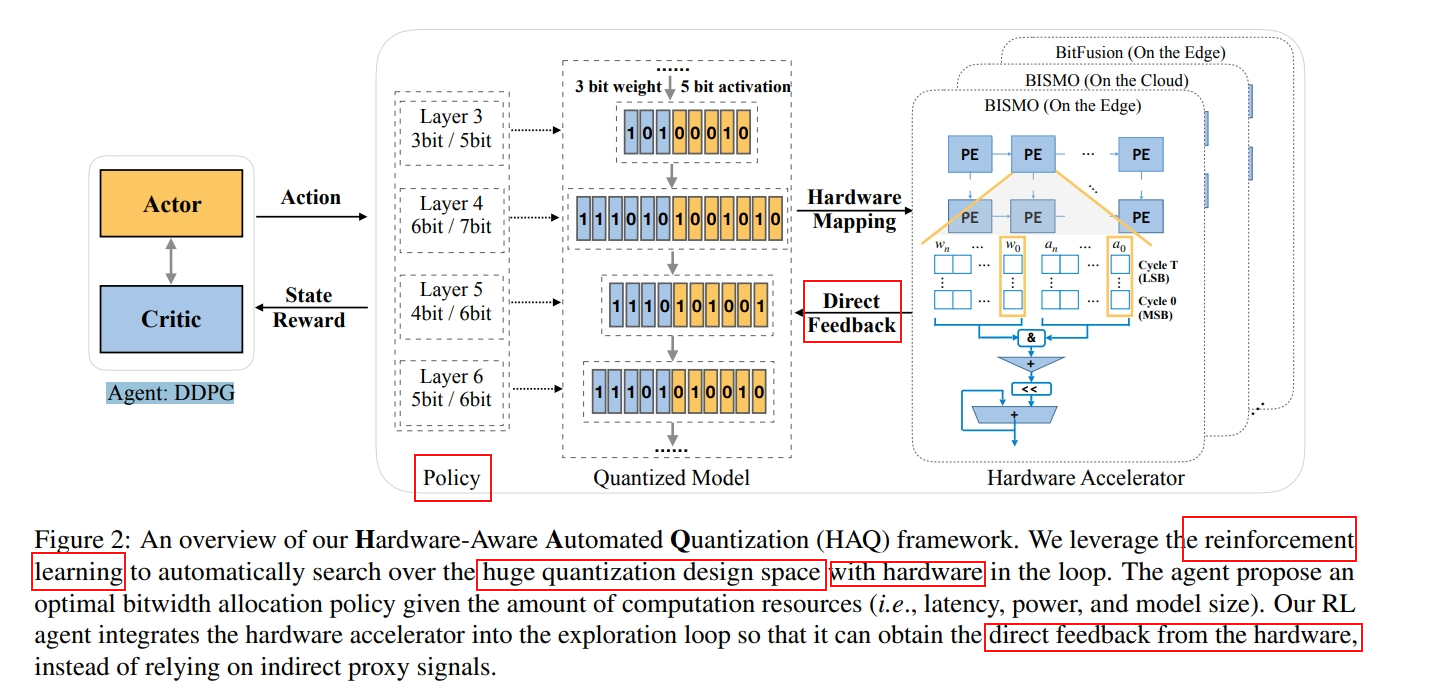

HAQ: Hardware-Aware Automated Quantization with Mixed Precision

https://arxiv.org/pdf/1811.08886.pdf

PACT: Parameterized Clipping Activation for Quantized Neural Networks

https://arxiv.org/pdf/1805.06085.pdf

The modified activation is as follows.

PACT: A new activation quantization scheme for finding the optimal quantization scale during training. We introduce a new parameter α that is used to represent the clipping level in the activation function and is learned via back-propagation. α sets the quantization scale smaller than ReLU to reduce the quantization error, but larger than a conventional clipping activation function (used in previous schemes) to allow gradients to flow more effectively. In addition, regularization is applied to α in the loss function to enable faster convergence.

Quantization in Transformer

Q-Bert

Q-BERT: Hessian Based Ultra Low Precision Quantization of BERT

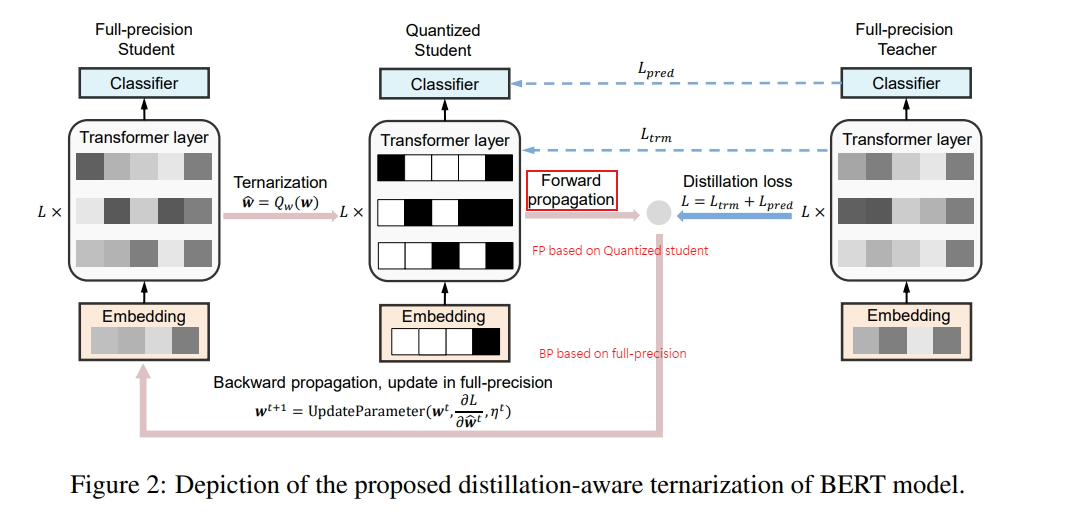

TernaryBERT: Distillation-aware Ultra-low Bit BERT

TernaryBERT: Distillation-aware Ultra-low Bit BERT

Our Contributions

In this paper, we study the post-training quantization method for vision transformer models with mixed-precision for higher compression and speed-up ratios.

The quantized process in the transformer is formulated as an optimization problem for finding the optimal quantization intervals. Specially, our goal is to maximize the similarity between the full-precision and quantized outputs in vision transformers.

Vision Transformer

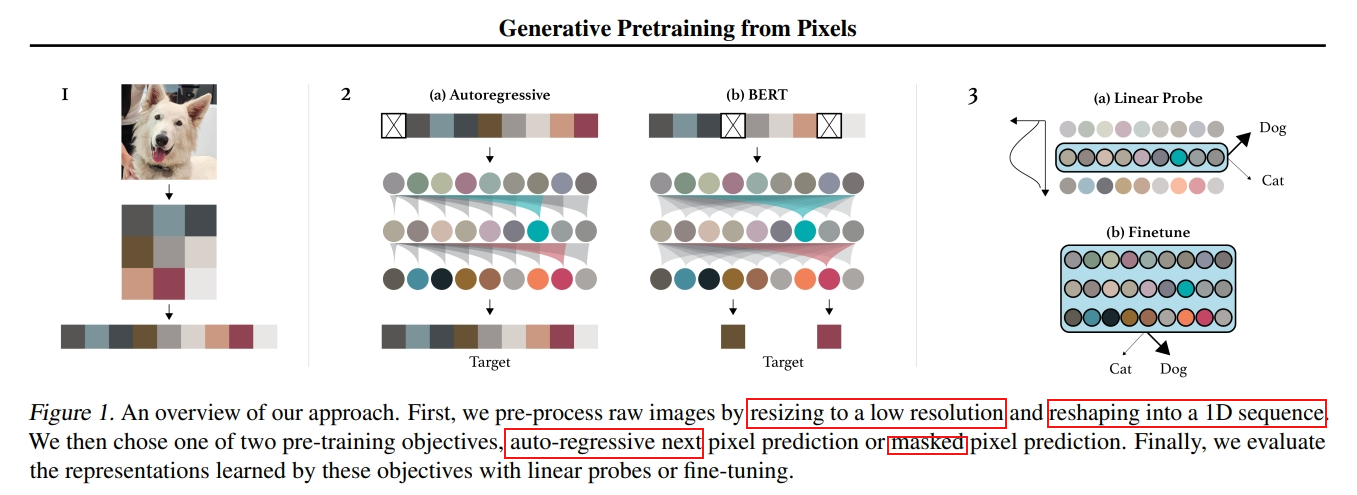

Generative Pretraining from Pixels

https://dl.acm.org/doi/pdf/10.5555/3524938.3525096

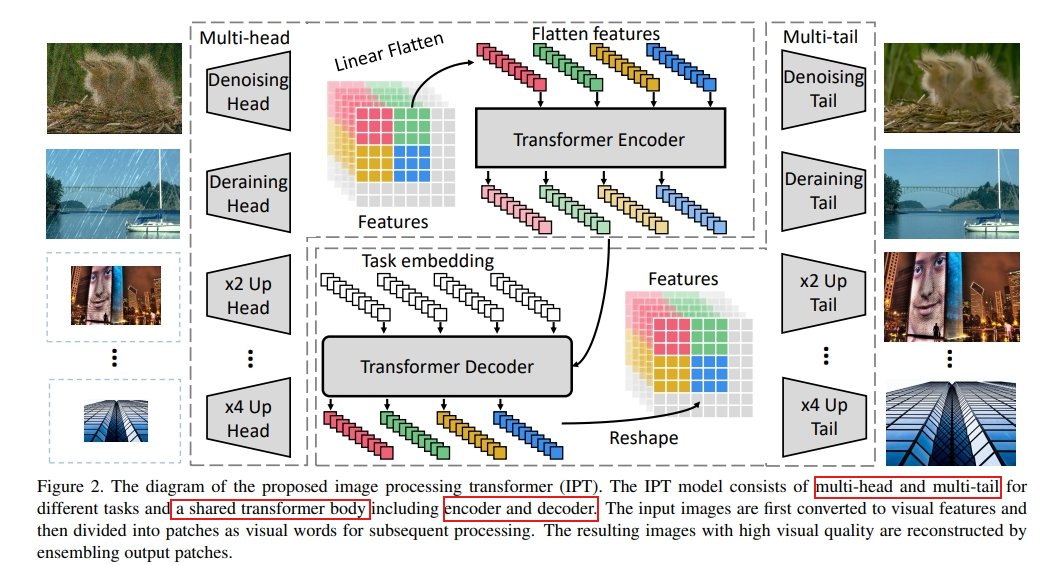

Pre-trained image processing transformer

https://arxiv.org/pdf/2012.00364.pdf

Post-Training Quantization

ACIQ method

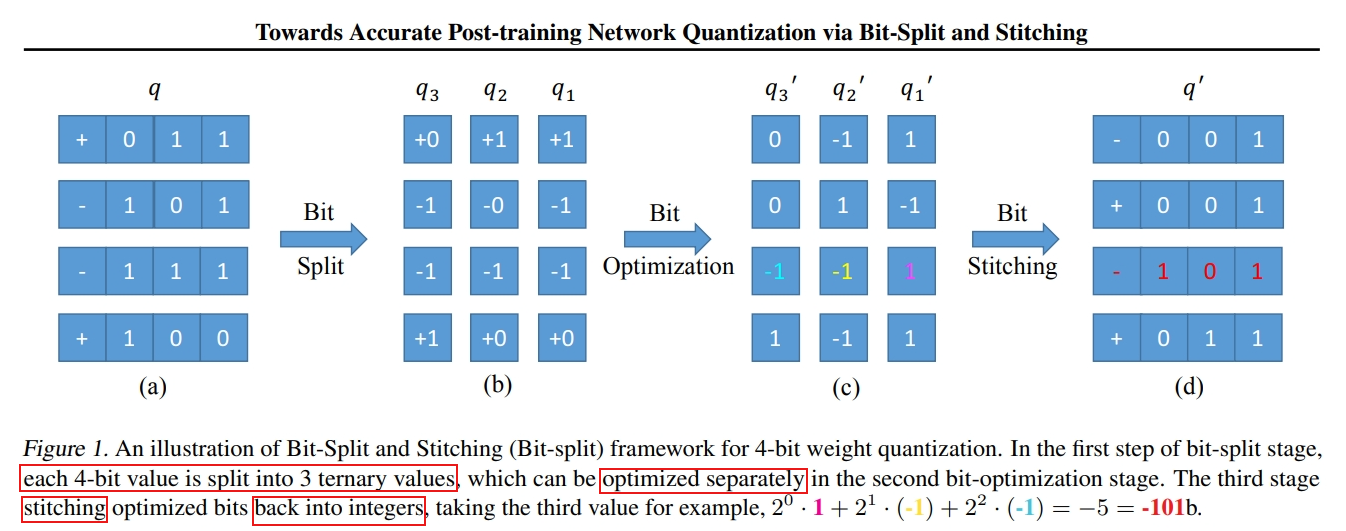

Towards Accurate Post-training Network Quantization via Bit-Split and Stitching

https://dl.acm.org/doi/pdf/10.5555/3524938.3525851

AdaRound

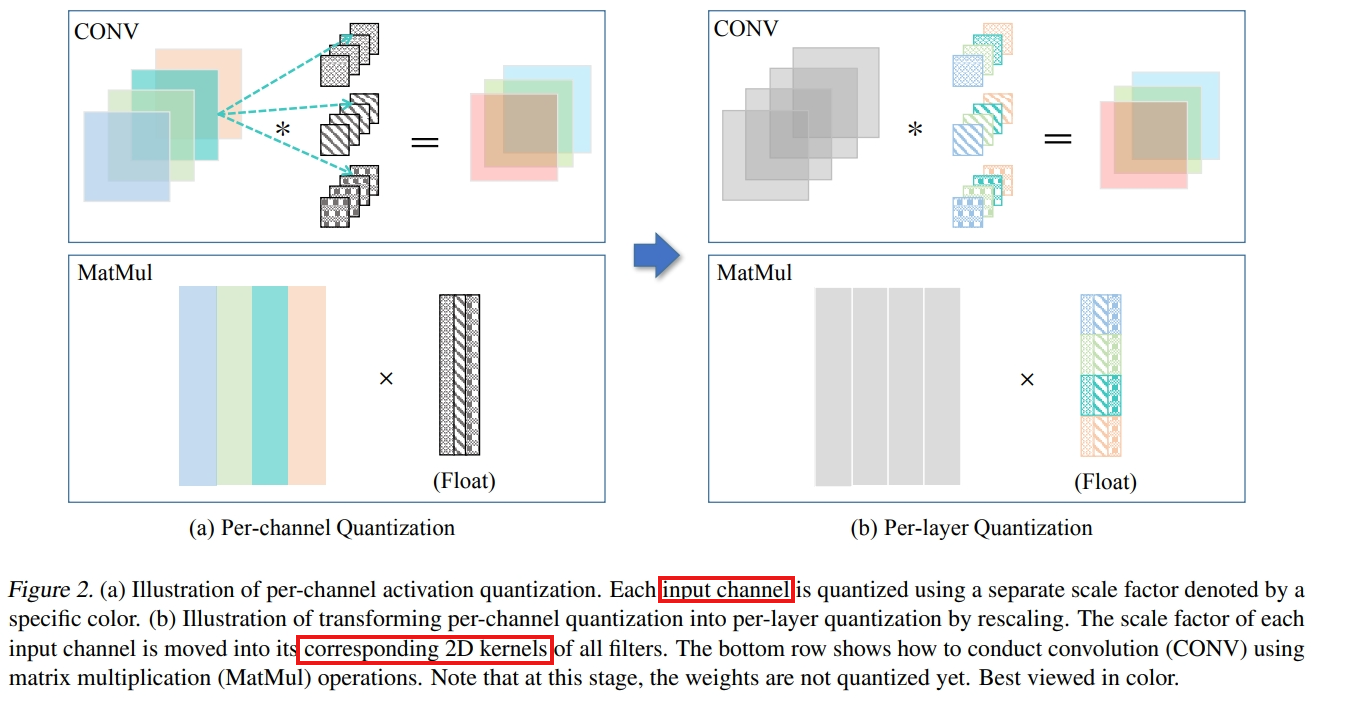

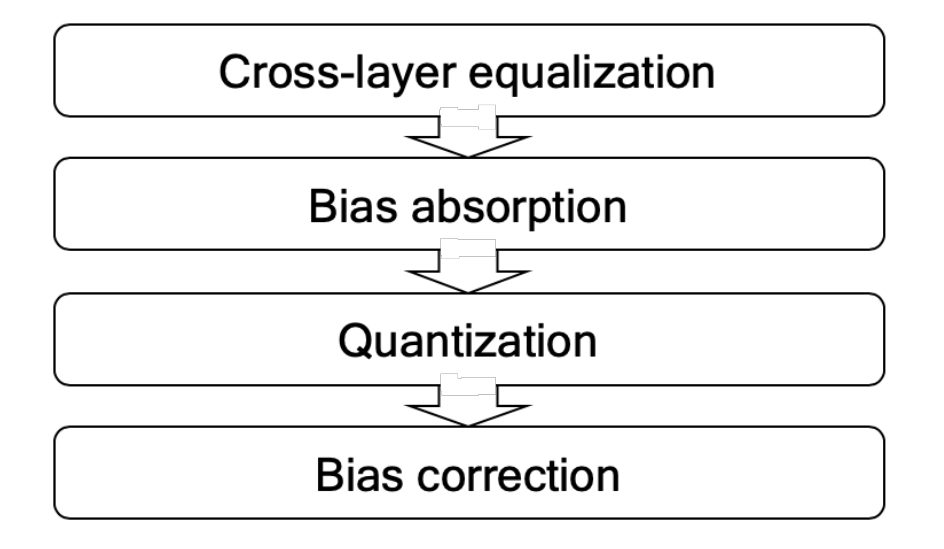

Data-Free Quantization Through Weight Equalization and Bias Correction

https://arxiv.org/pdf/1906.04721.pdf

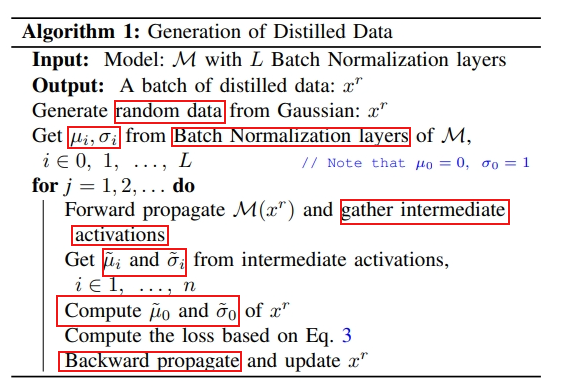

Zeroq: A novel zero shot quantization framework

https://arxiv.org/pdf/2001.00281.pdf

ZEROQ enables mixed-precision quantization without any access to the training or validation data.

This is achieved by optimizing for a Distilled Dataset, which is engineered to match the statistics of batch normalization across different layers of the network.

ZEROQ supports both uniform and mixed-precision quantization. For the latter, we introduce a novel Pareto frontier based method to automatically determine the mixed-precision bit setting for all layers, with no manual search involved.

Methodology

In this section, we elaborate on the proposed mixed-precision post-training quantization scheme for the vision transformer.

The similarity-aware quantization for linear layers and ranking-aware quantization for self-attention layers are presented.

In addition, the bias correction method for optimization and the mixed-precision quantization based on nuclear norm of the attention map and output feature are introduced.

Preliminaries

the input to the first transformer layer is

For weight quantization, we quantize the weights WQ, WK, WV ,WO ,W1 ,W2, as well as the linear embedding WE

Besides these weights, we also quantize the inputs of all linear layers and matrix multiplication operations. Following the methods in [22, 34], we do not quantize the softmax operation and layer normalization, because the parameters contained in these operations are negligible and quantizing them may bring significant accuracy degradation.

Optimization for Post-Training Quantization

The choice of quantization intervals is critical for quantization and one popular option is to use a uniform quantization function, where the data range is equally split:

Similarity-Aware Quantization for Linear Operation

Original one and quantized one are as follows:

it can be seen that the quantization intervals actually control the clipping thresholds in quantization process, which affects the similarity between original output feature maps and quantization feature maps to a great extent.

In the l-th transformer layer, the similarity-aware quantization can be formulated as:

Ranking-Aware Quantization for Self-Attention

The self-attention layer is the critical component of the transformer since it can calculate the global relevance of the features, which makes the transformer unique from the convolutional neural networks. For the calculation of self-attention (Eq. 3), we empirically find that the relative order of the attention map has been changed after quantization as shown in Fig 1, which could cause a significant performance degradation. Thus, a ranking loss is introduced to solve this problem during the quantization process:

To solve the above optimization problem, we present a simple but efficient alternative searching method for the uniform quantization of transformer layers.

We alternatively fix \DeltaW _l,\DeltaX _l to make the other one be optimal.

Moreover, for fast convergence, They are initialized in terms of the maximum of weights or inputs respectively. For the search space of them , we linearly divide intervals of [α∆l , β∆l ] into C candidate options and conduct a simple search strategy on them

Bias Correction

Suppose the quantization error of weights and inputs are defined as:

If the expectation of the error for output is not zero, then the mean of the output will change. This shift in distribution may lead to detrimental behavior in the following layers. We can correct this change by seeing that:

Thus, subtracting the expected error on the output from the biased output ensures that the mean for each output unit is preserved. For implementation, the expected error can be computed using the calibration data and subtracted from the layer’s bias parameter, since the expected error vector has the same shape as the layer’s output.

Mixed-Precision Quantization for Vision Transformer

Considering the unique structure of transformer layer, we assign all the operations in the MSA or MLP modules with the same bit-width. This will also be friendly to the hardware implementation since the weights and inputs are assigned with the same bit-width.

The nuclear norm is the sum of singular values, which represents the data relevance of the matrix.

Inspired by the method in [10], we utilize a Pareto frontier approach to determine the bit-width. The main idea is to sort each candidate bit-width configuration based on the total second-order perturbation that they cause, according to the following metric:

\sigma_j is the j-th singular value on the diagonal.