RepViT: Revisiting Mobile CNN From ViT Perspective

Abstract

the architectural disparities between lightweight ViTs and lightweight CNNs have not been adequately examined.

We incrementally enhance the mobile friendliness of a standard lightweight CNN, specifically MobileNetV3, by integrating the efficient architectural choices of lightweight ViTs

This ends up with a new family of pure lightweight CNNs, namely RepViT

Introduction

Efficient Design Principles

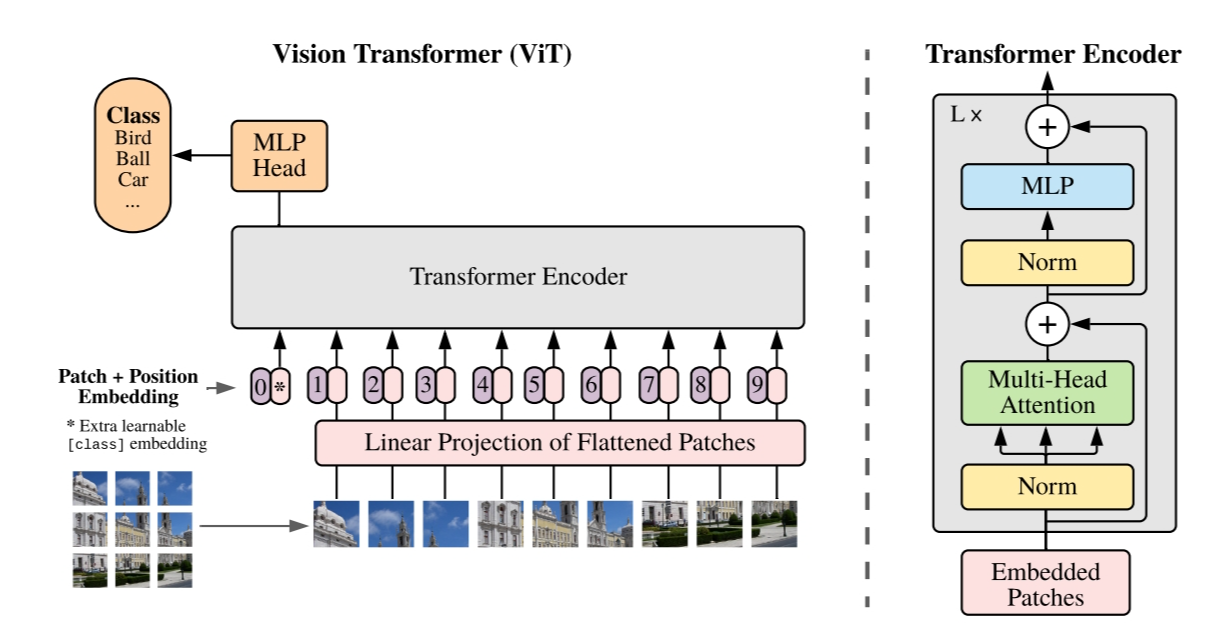

ViT

An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale

a pure transformer applied directly to sequences of image patches can perform very well on image classification tasks.

We split an image into patches and provide the sequence of linear embeddings of these patches as an input to a Transformer. Image patches are treated the same way as tokens (words) in an NLP application. We train the model on image classification in supervised fashion.

Image Classification

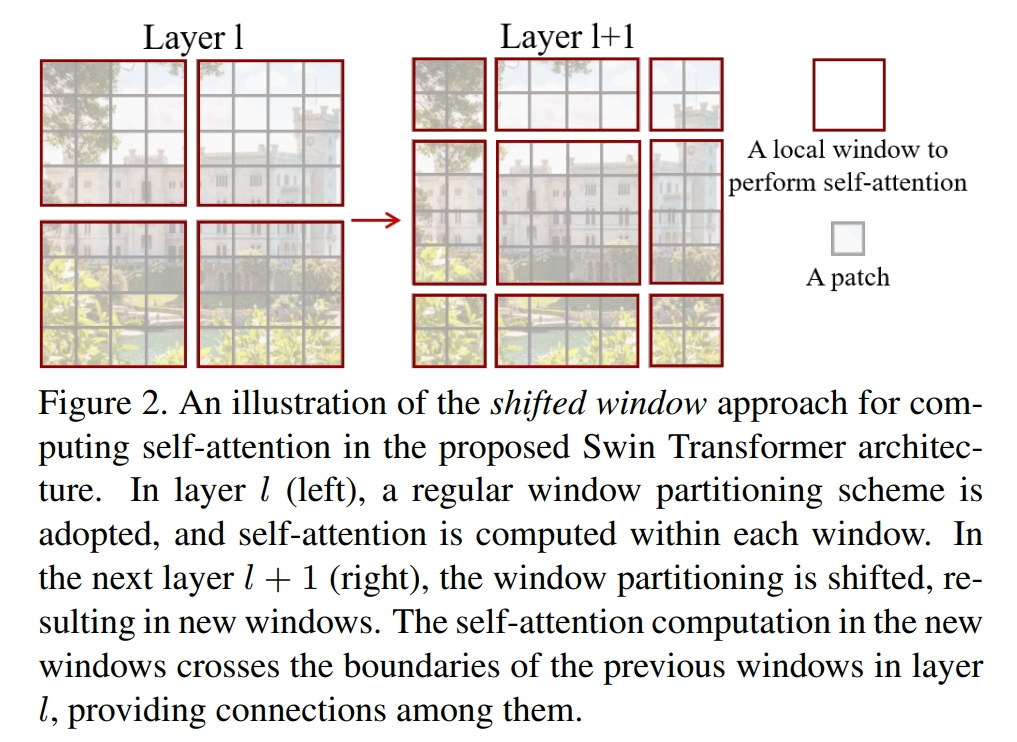

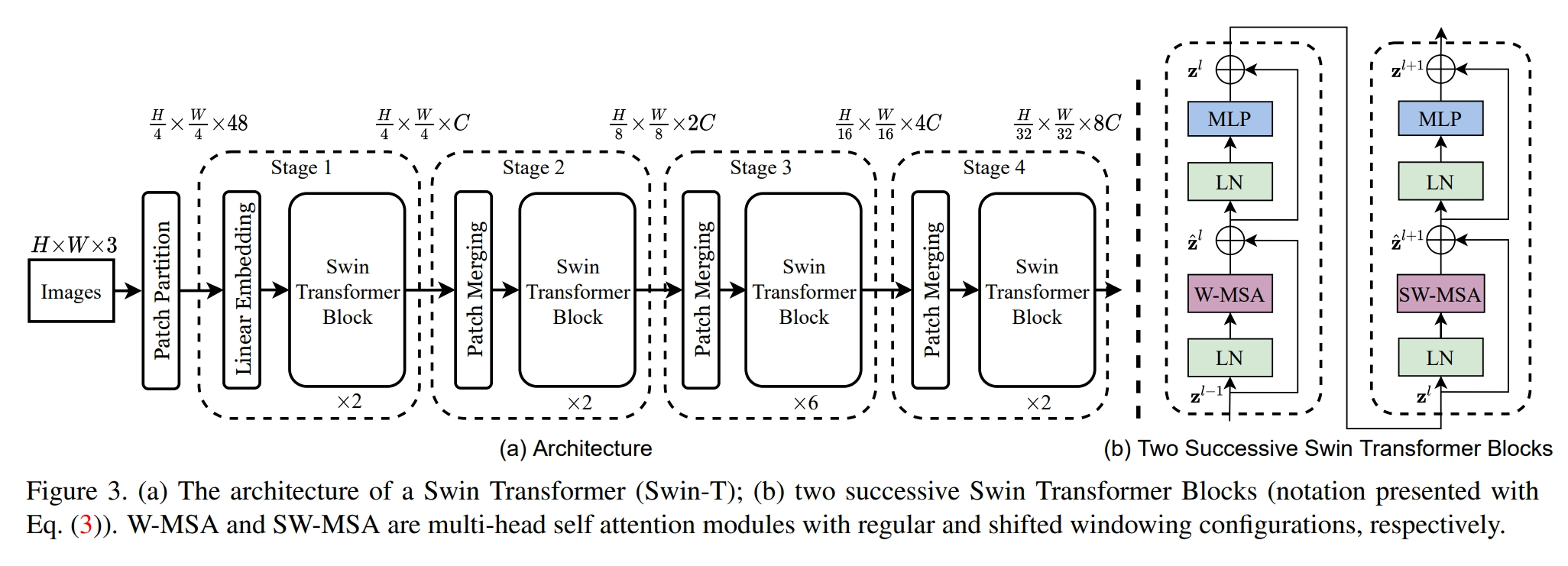

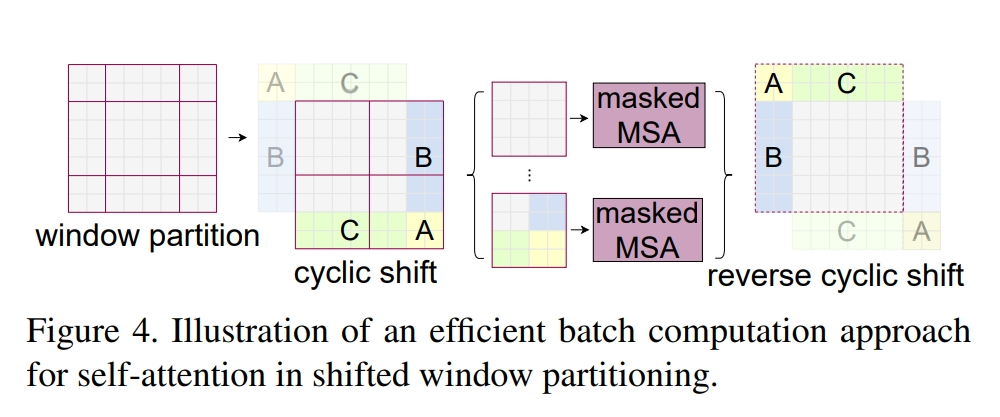

Swin Transformer

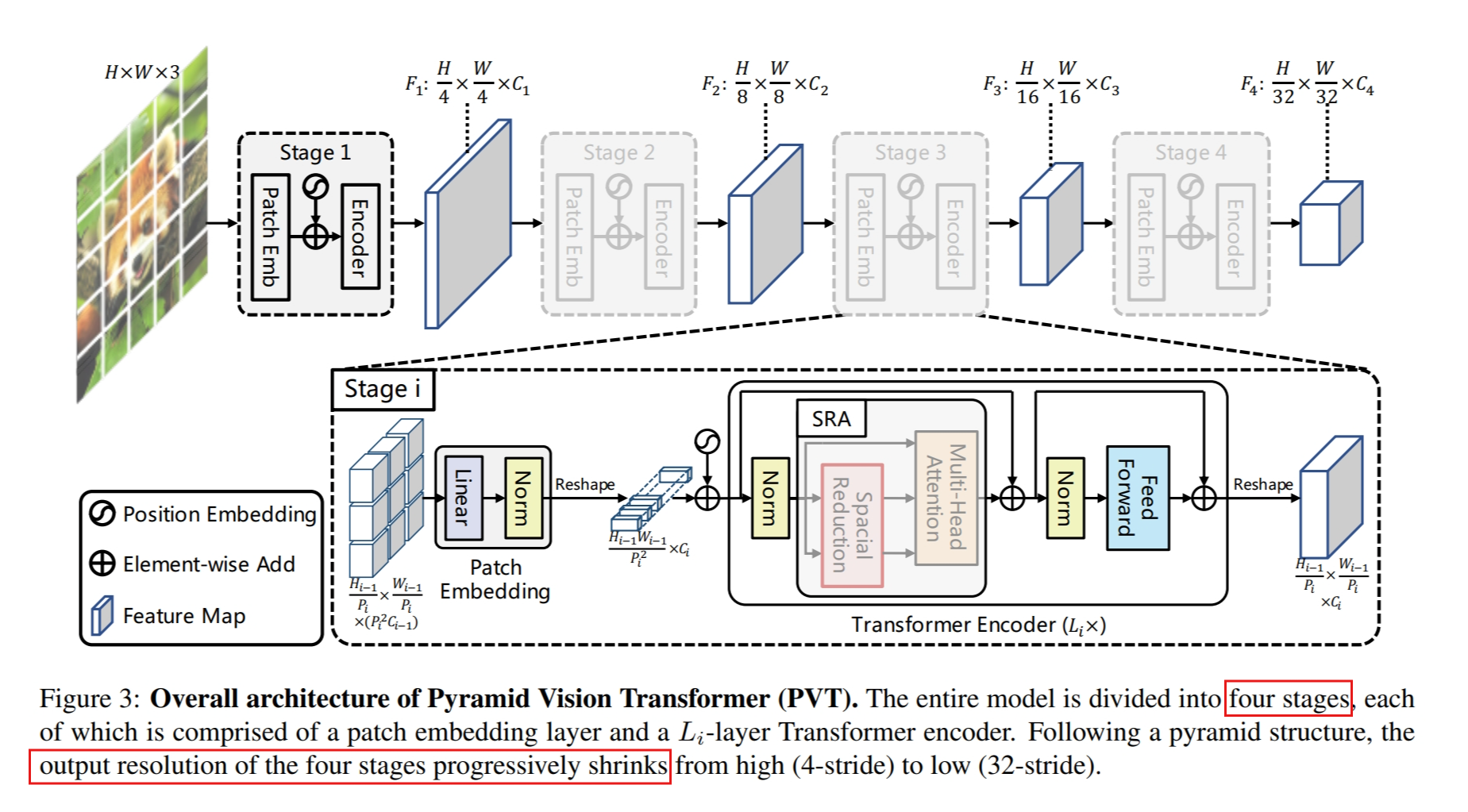

Pyramid Vision Transformer

semantic segmentation

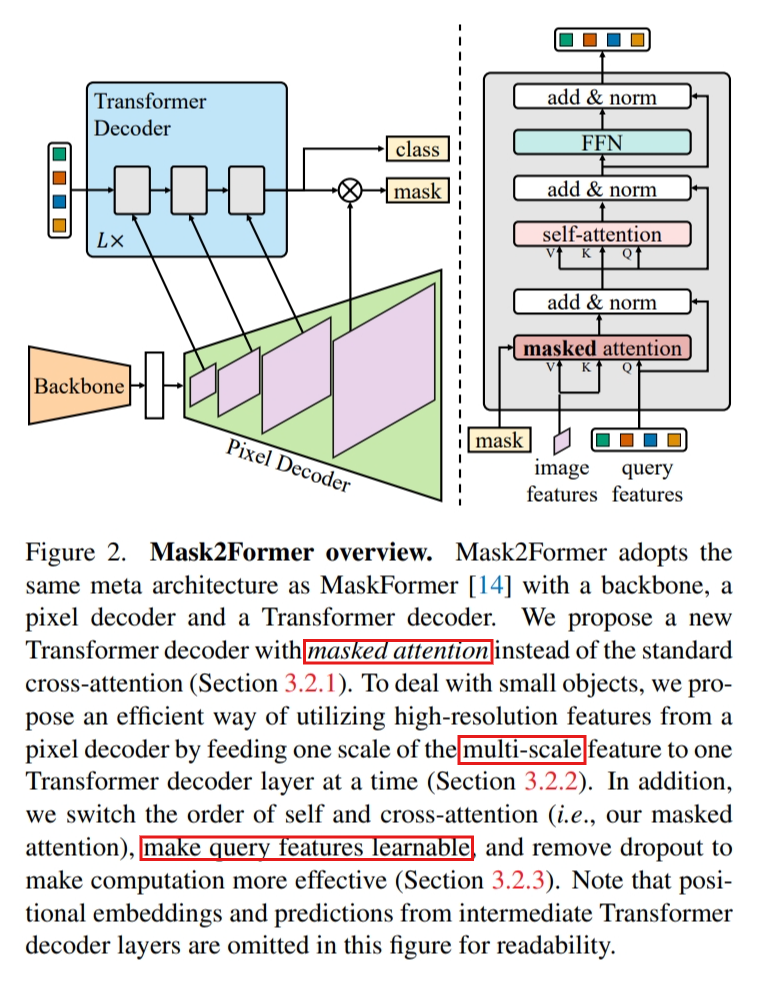

Masked-attention Mask Transformer for Universal Image Segmentation

https://arxiv.org/pdf/2112.01527.pdf

Unify pan-optic, instance, semantic segmentation

Masks are learned through training.

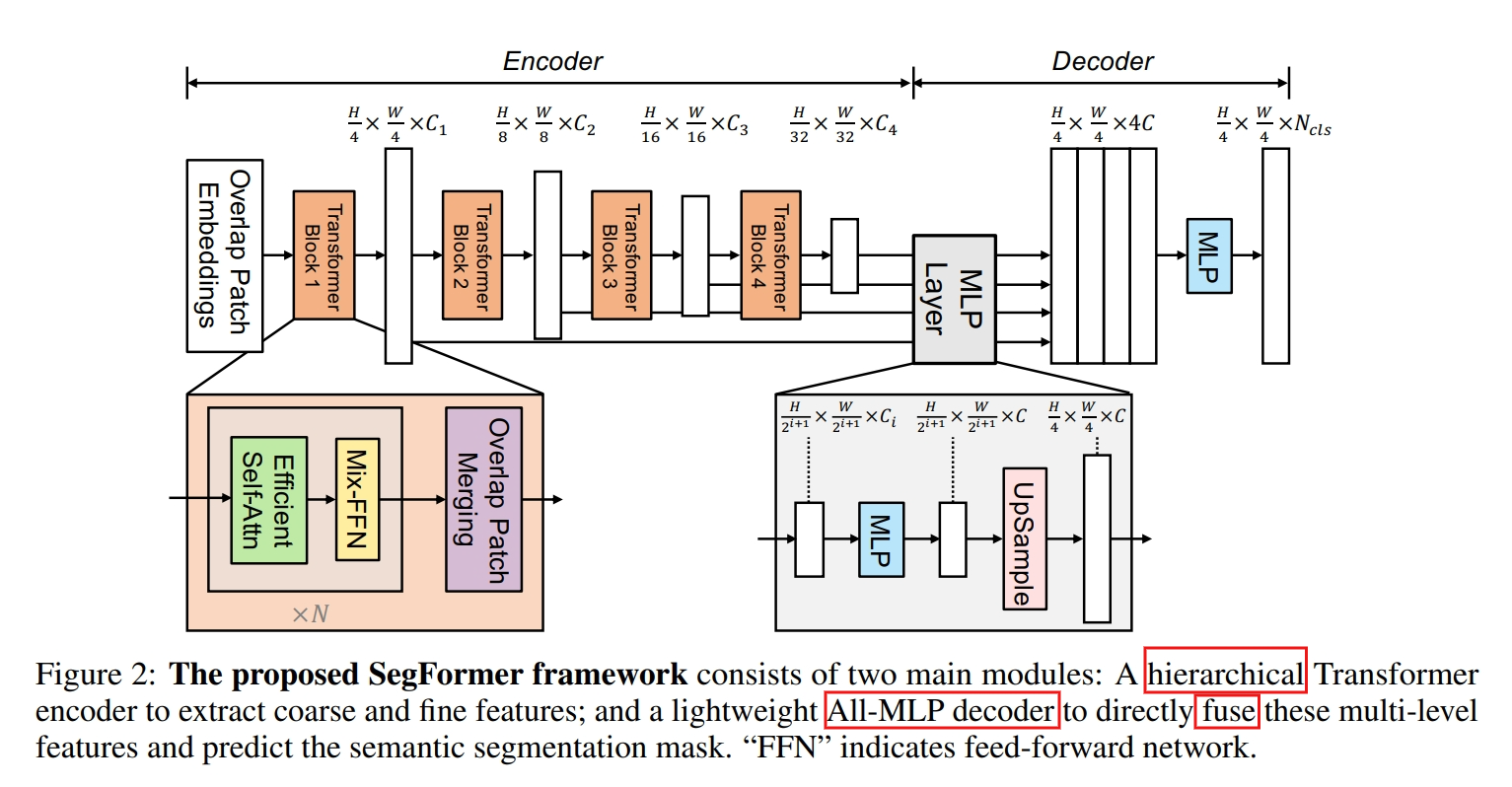

SegFormer: Simple and Efficient Design for Semantic Segmentation with Transformers

https://arxiv.org/pdf/2105.15203.pdf

Object Detection

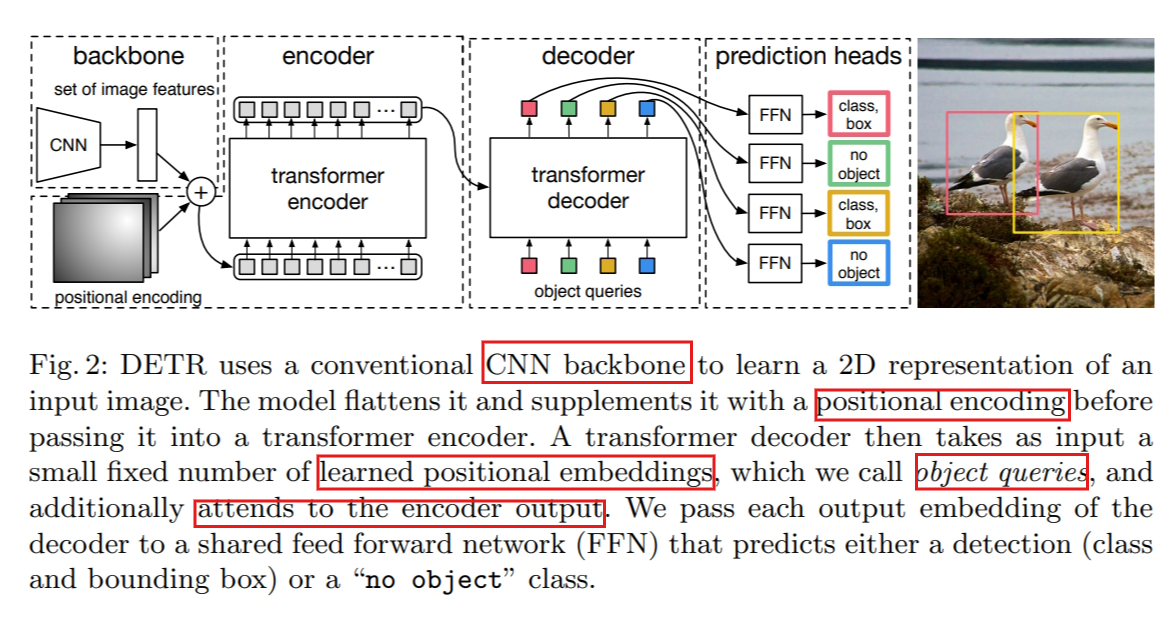

End-to-End Object Detection with Transformers

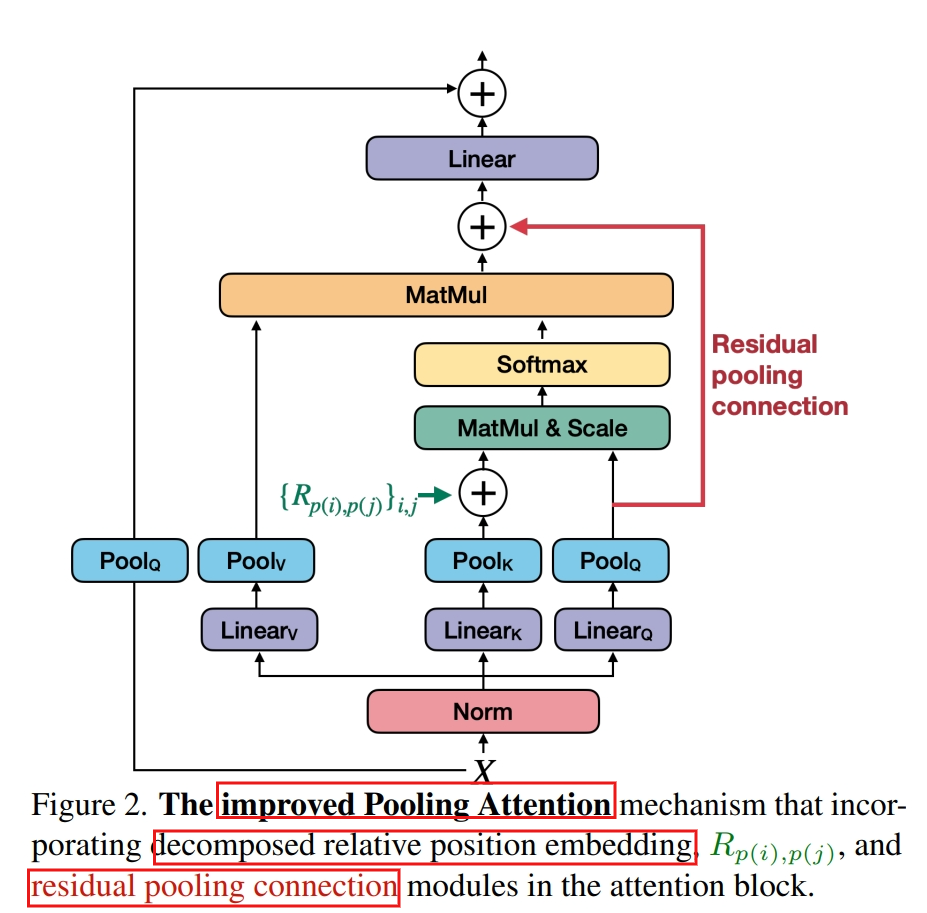

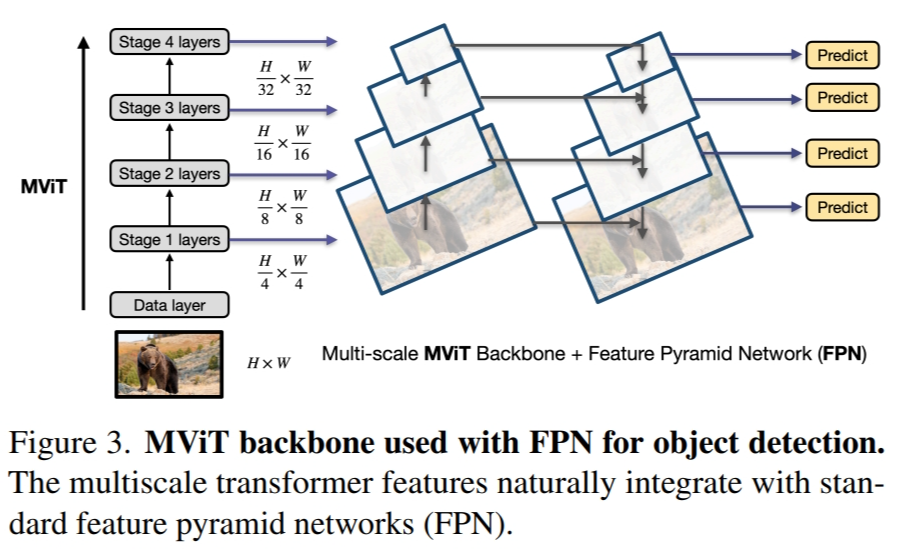

MViTv2: Improved Multi-scale Vision Transformers for Classification and Detection

Enhancing Computational Efficiency of Vision Transformers for Mobile Devices through Effective Design Principles

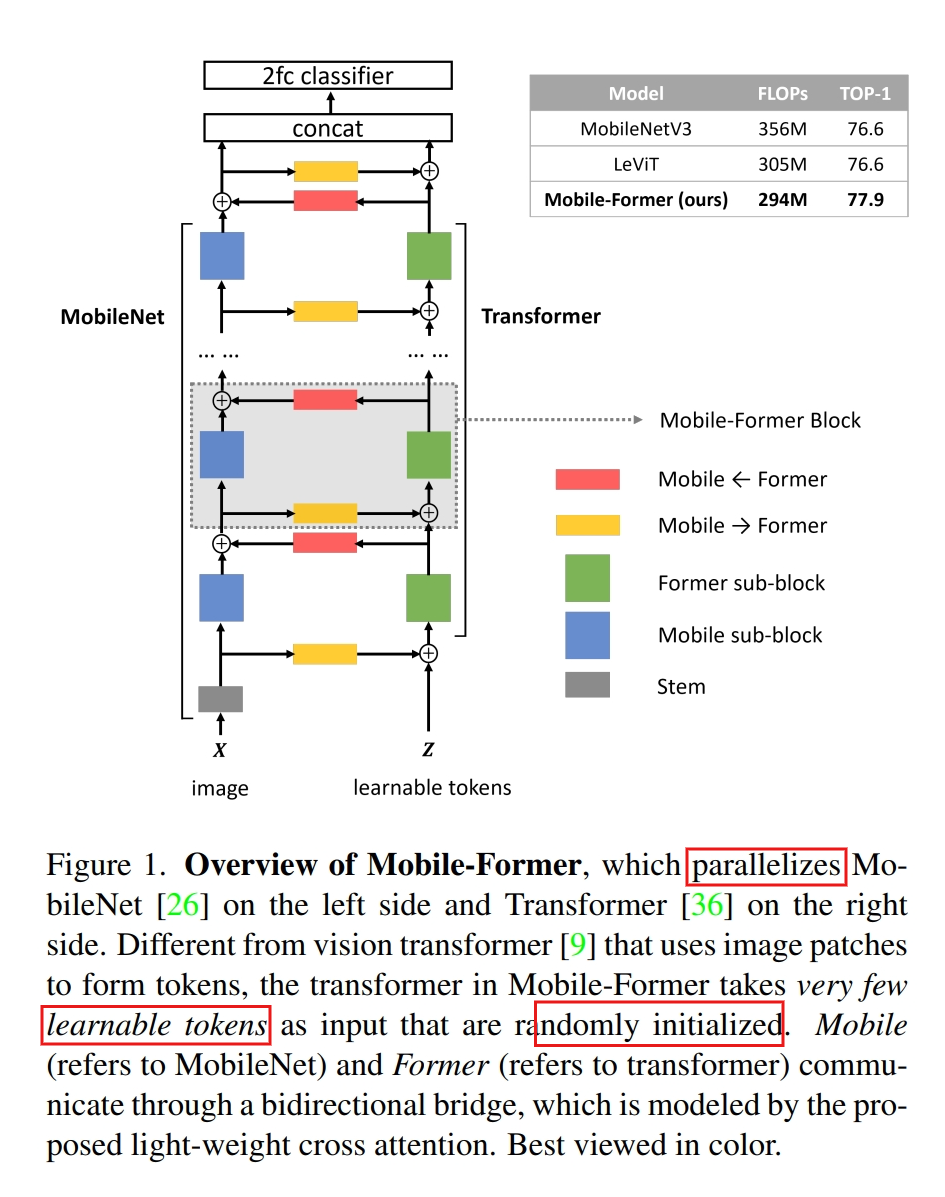

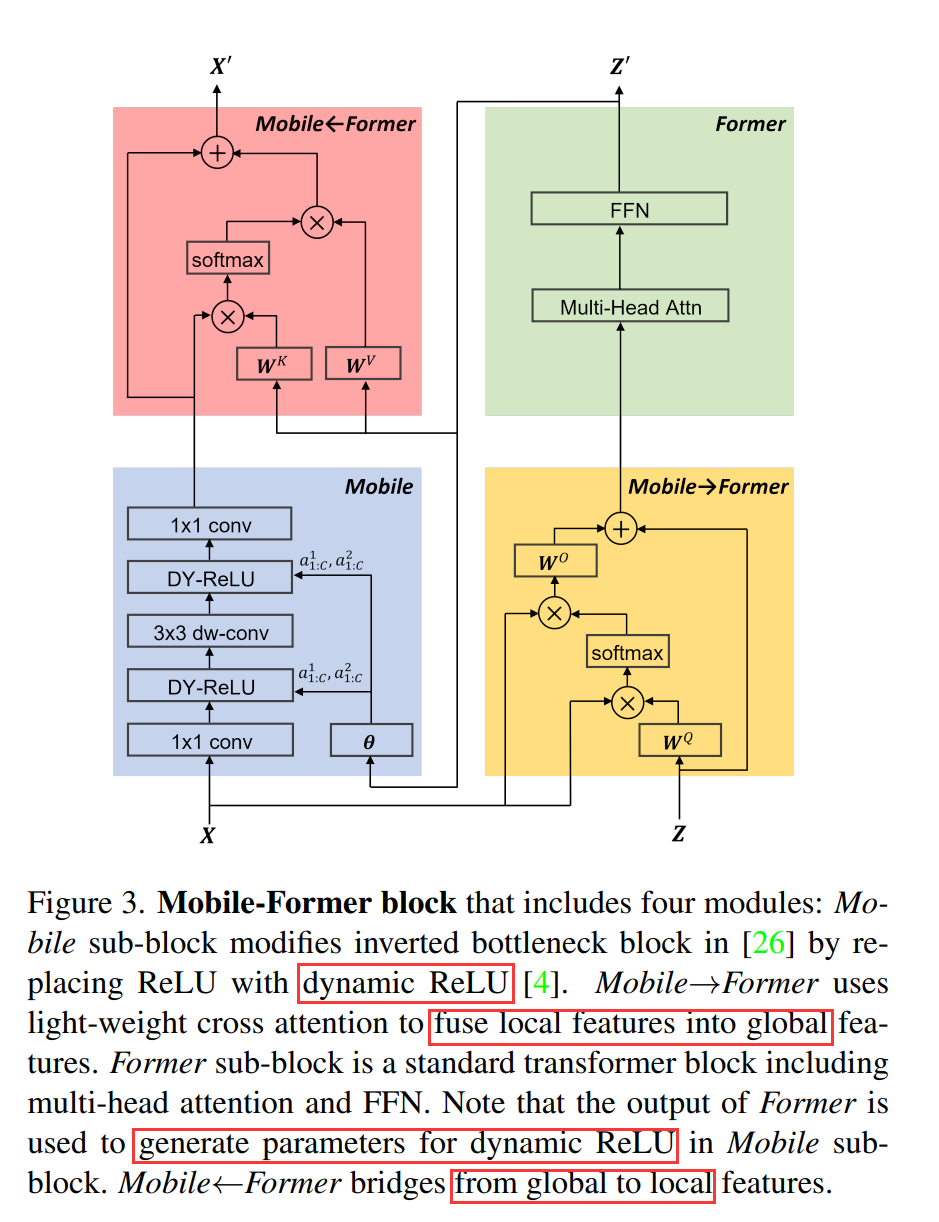

Mobile-Former: Bridging MobileNet and Transformer

Rethinking vision transformers for mobilenet size and speed.

proposed EfficientFormerV2

Token Mixers

Search Space Refinement

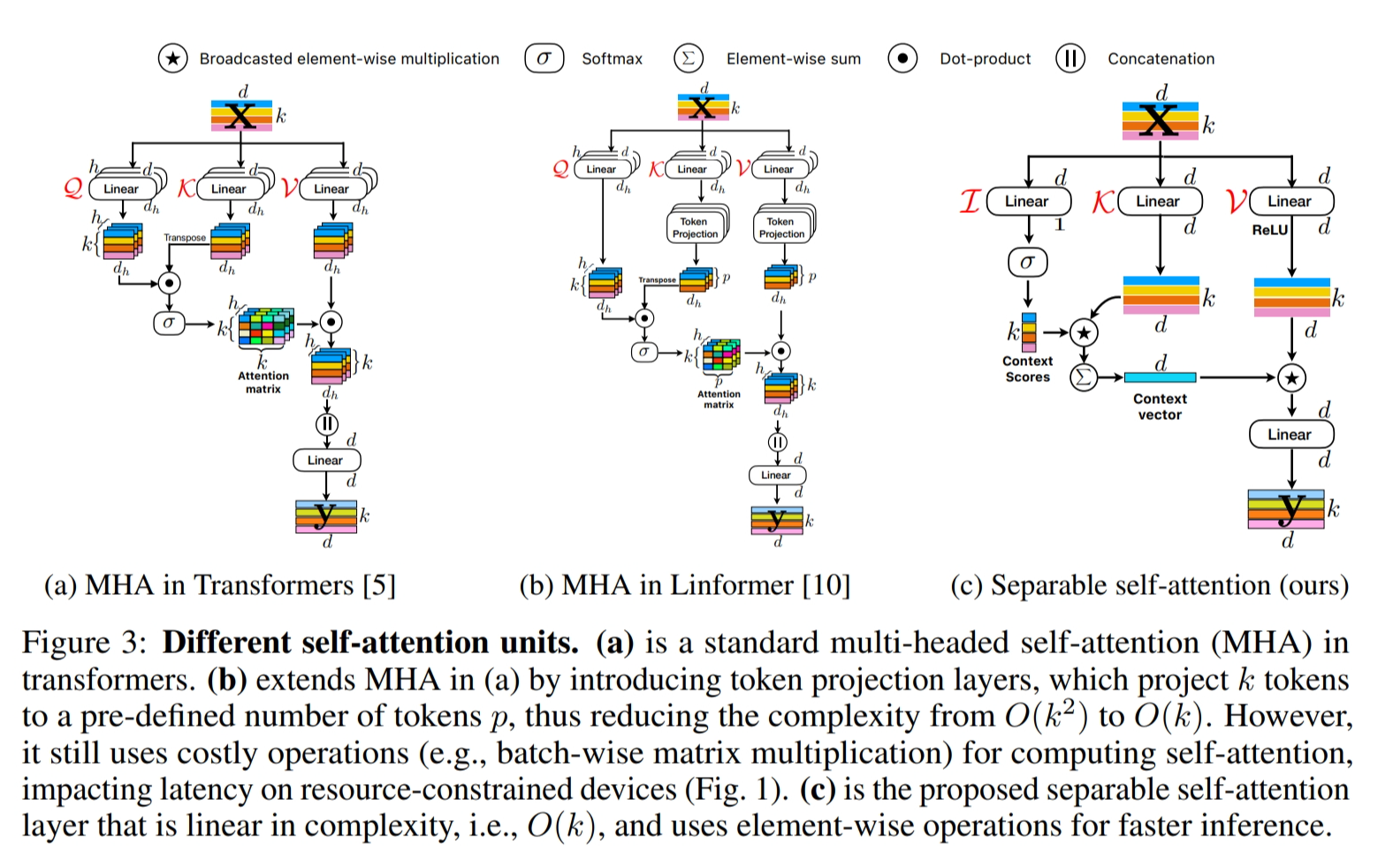

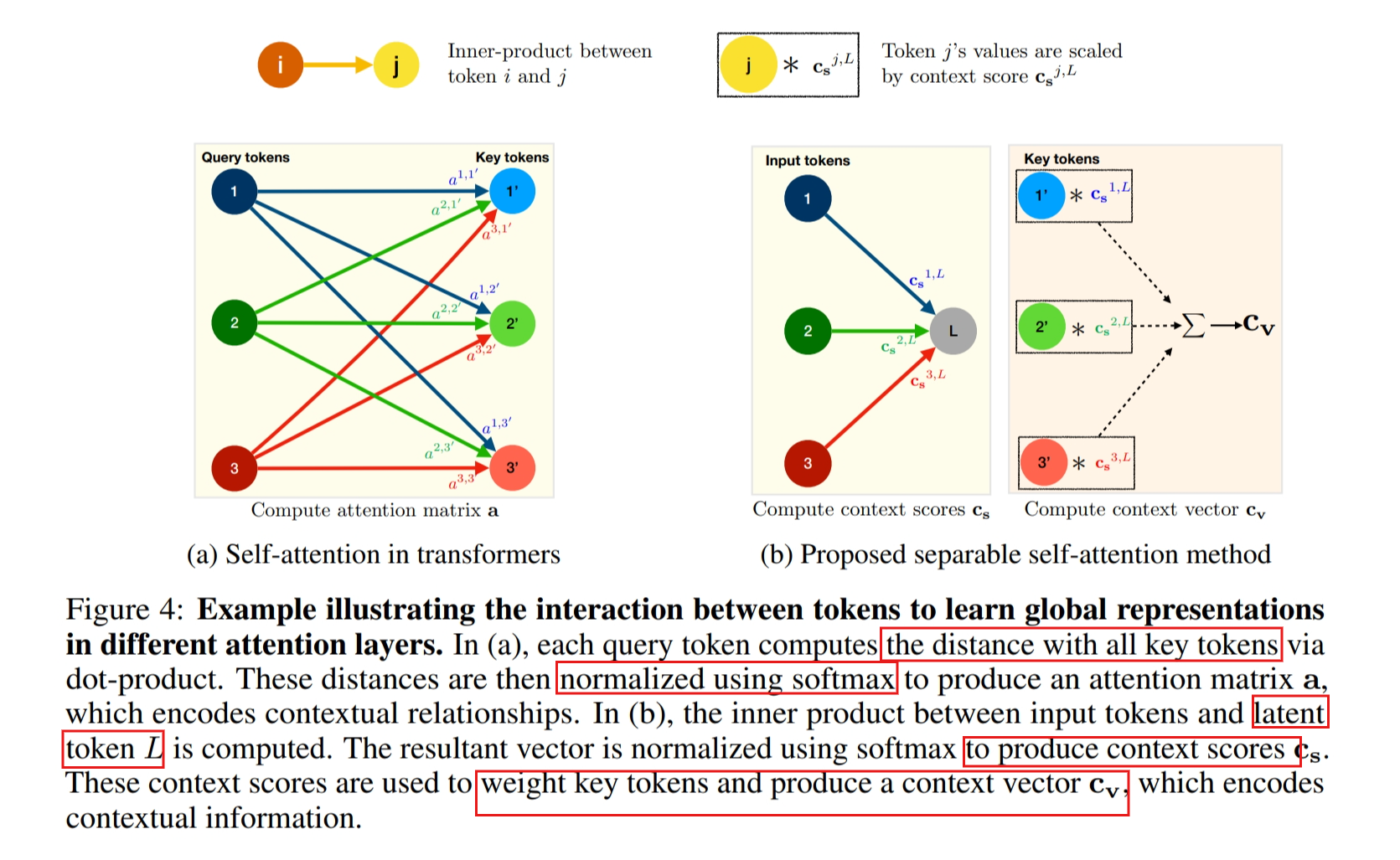

MHSA Improvements

Attention on Higher Resolution

Dual-Path Attention Down sampling (CNN + Attention Parallel Computation and Sum)

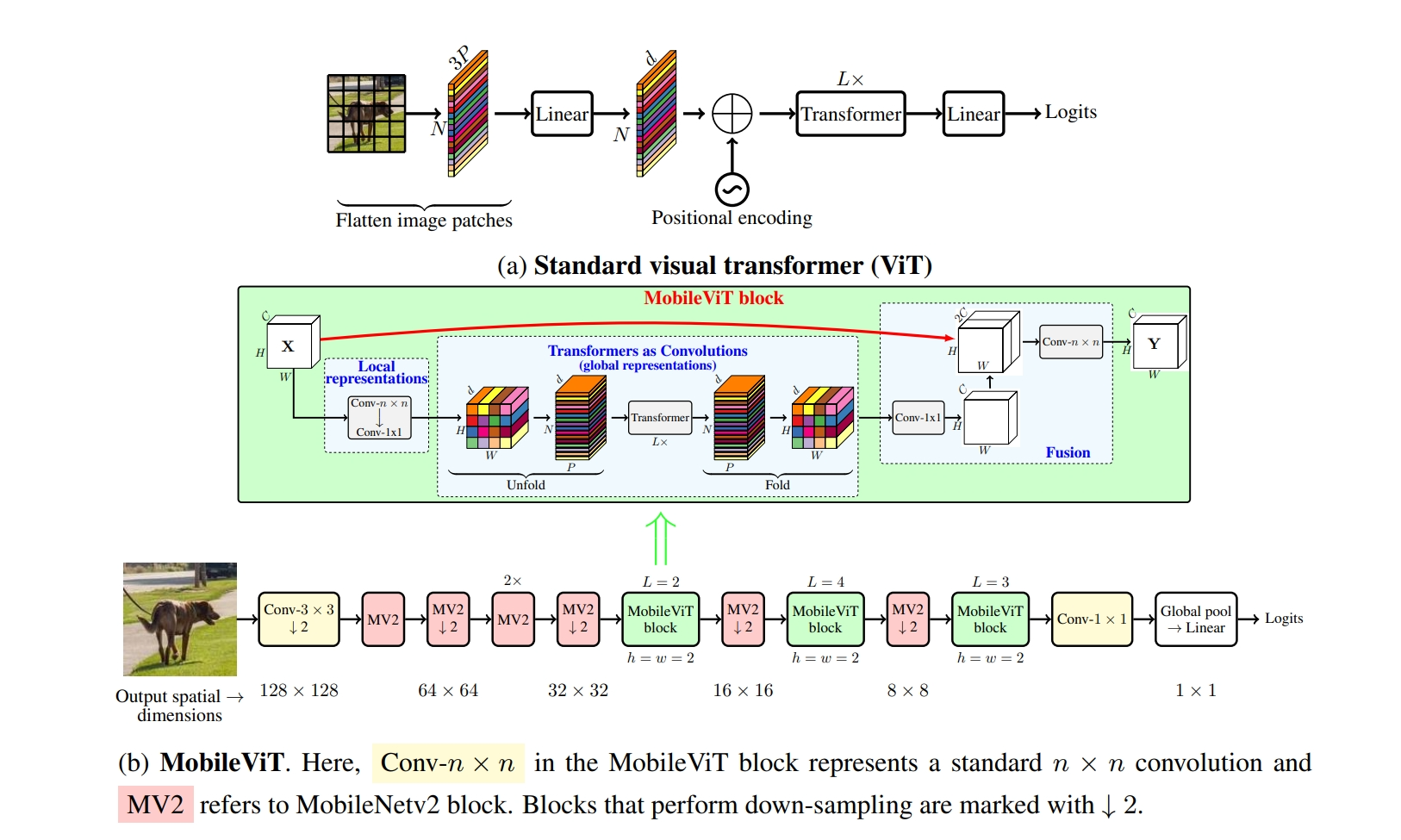

MOBILEVIT: LIGHT-WEIGHT, GENERAL-PURPOSE, AND MOBILE-FRIENDLY VISION TRANSFORMER

No need for patch embedding

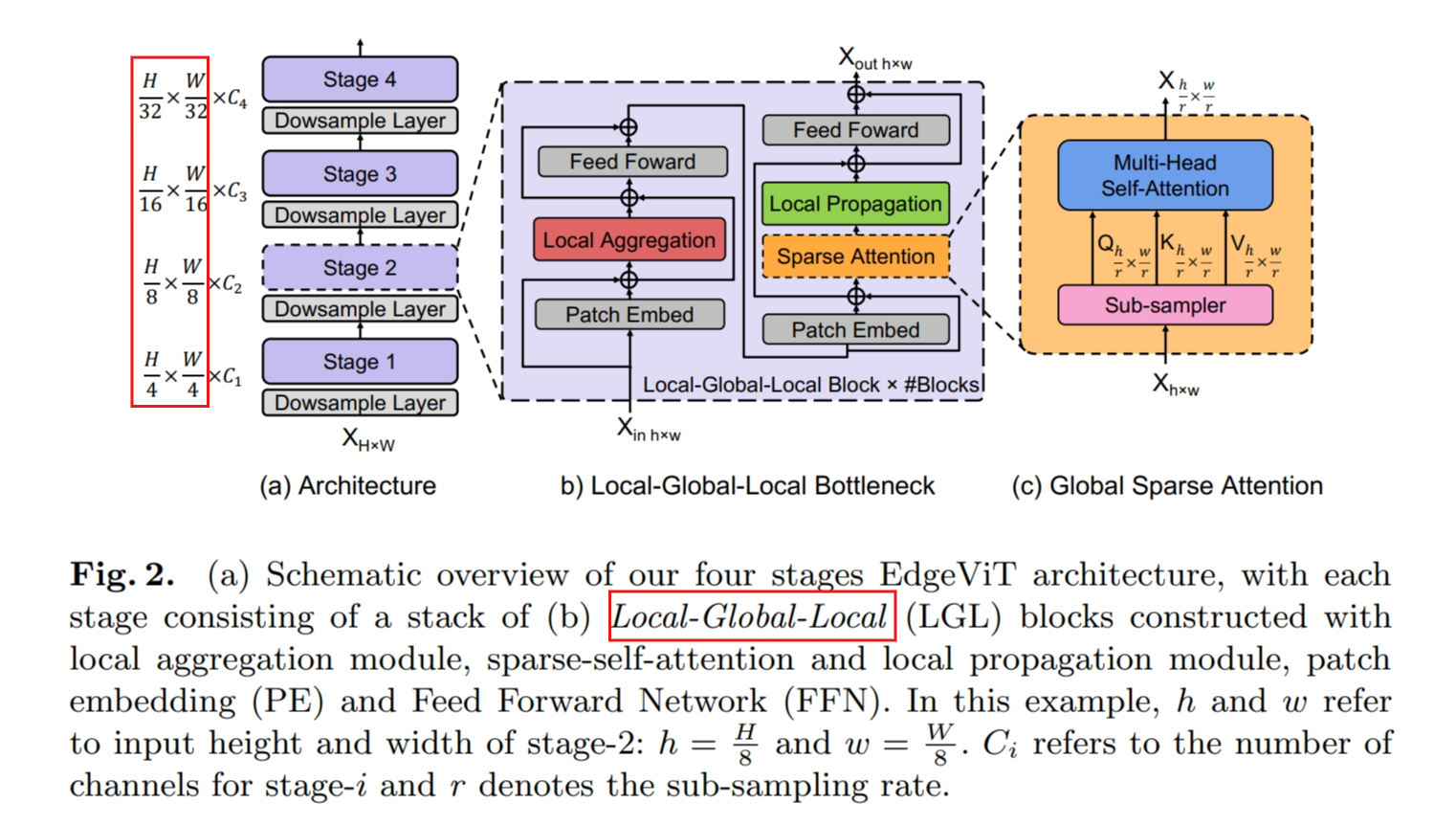

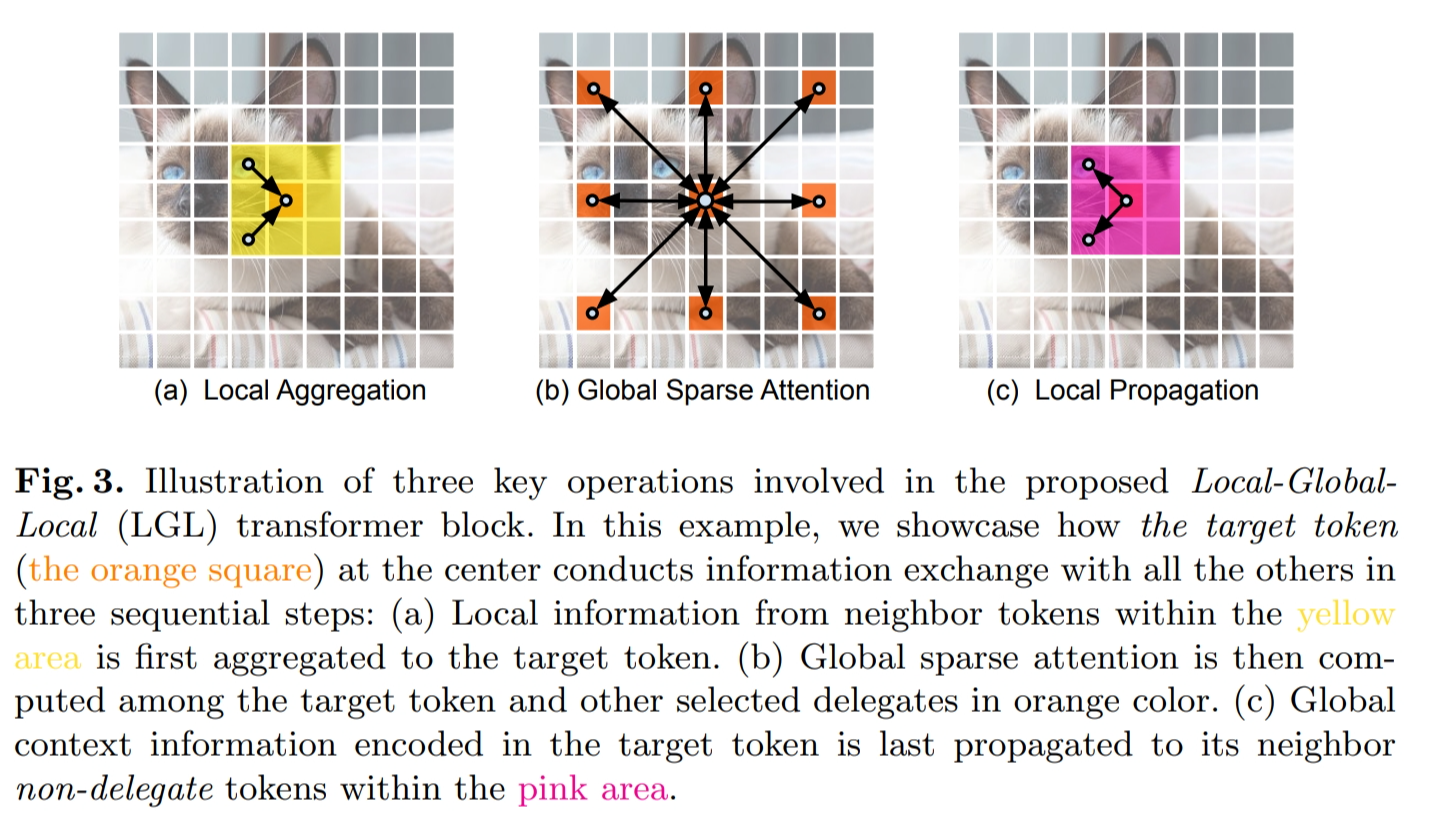

EdgeViTs: Competing Light-weight CNNs on Mobile Devices with Vision Transformers

Separable Self-attention for Mobile Vision Transformers: MobileViTv2

Summary

In this work, we revisit the design of lightweight CNNs by incorporating the architectural choices of lightweight ViTs.

Our research aims to narrow the divide between lightweight CNNs and lightweight ViTs, and highlight the potential of the former for employment on mobile devices compared to the latter.

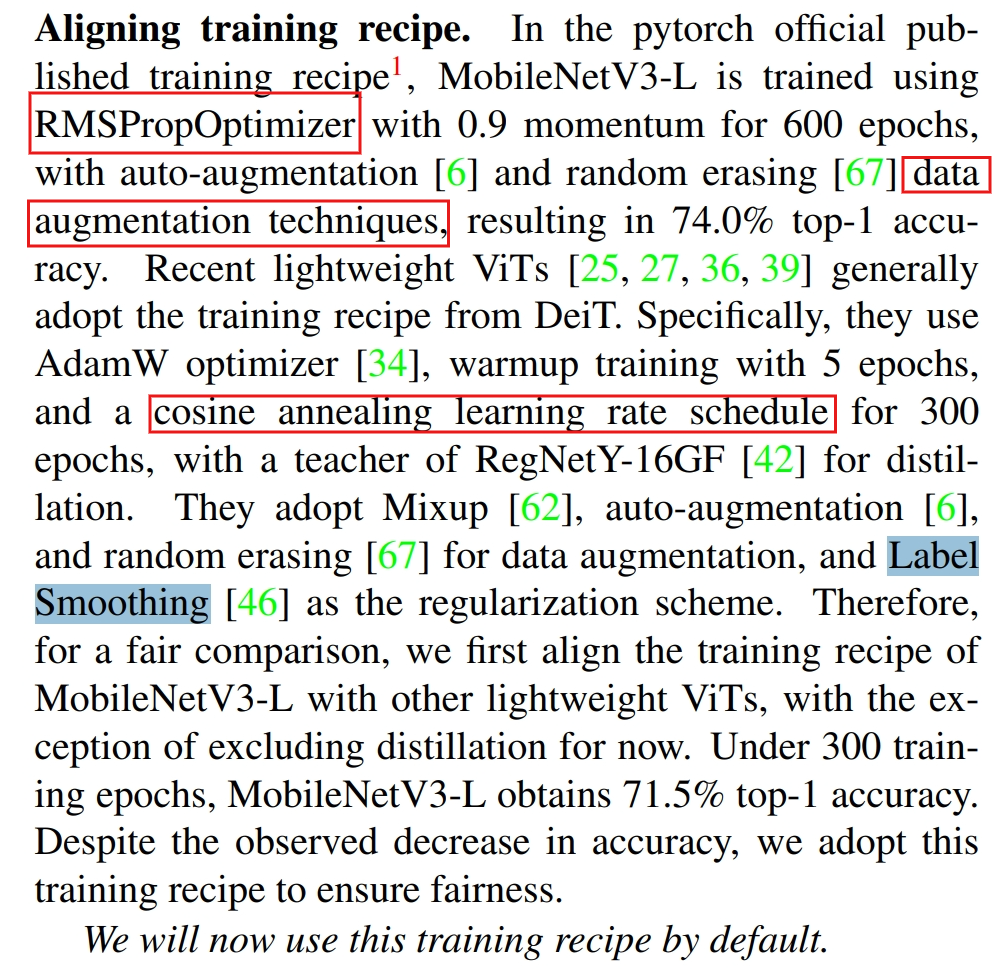

we begin with a standard lightweight CNN, i.e., MobileNetV3- L. We gradually “modernize” its architecture by incorporating the efficient architectural designs of lightweight ViTs

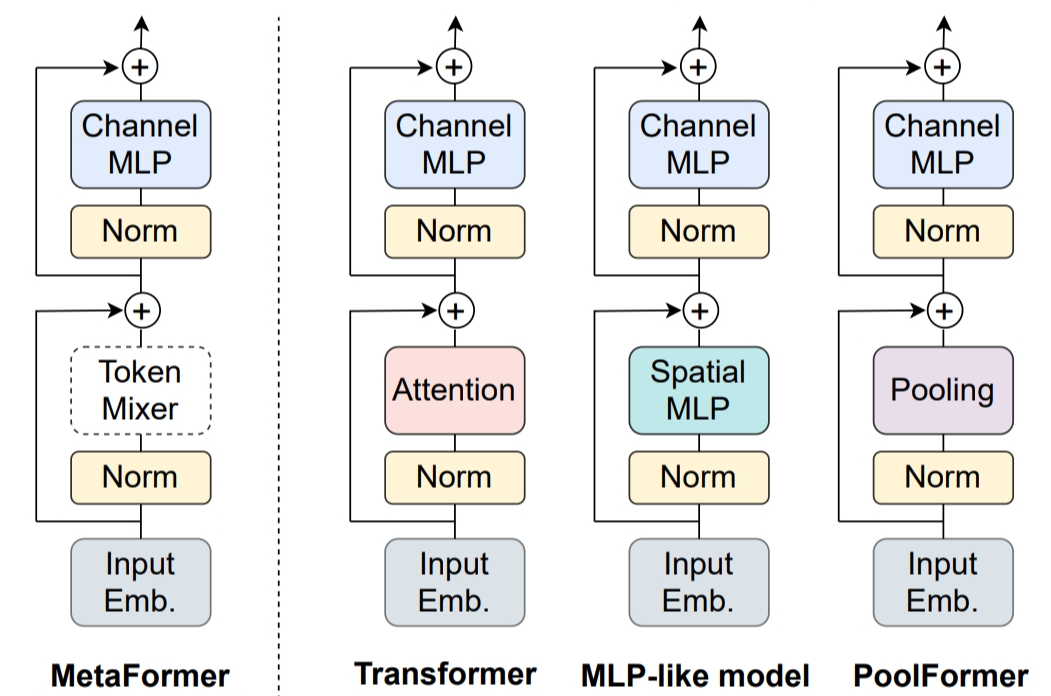

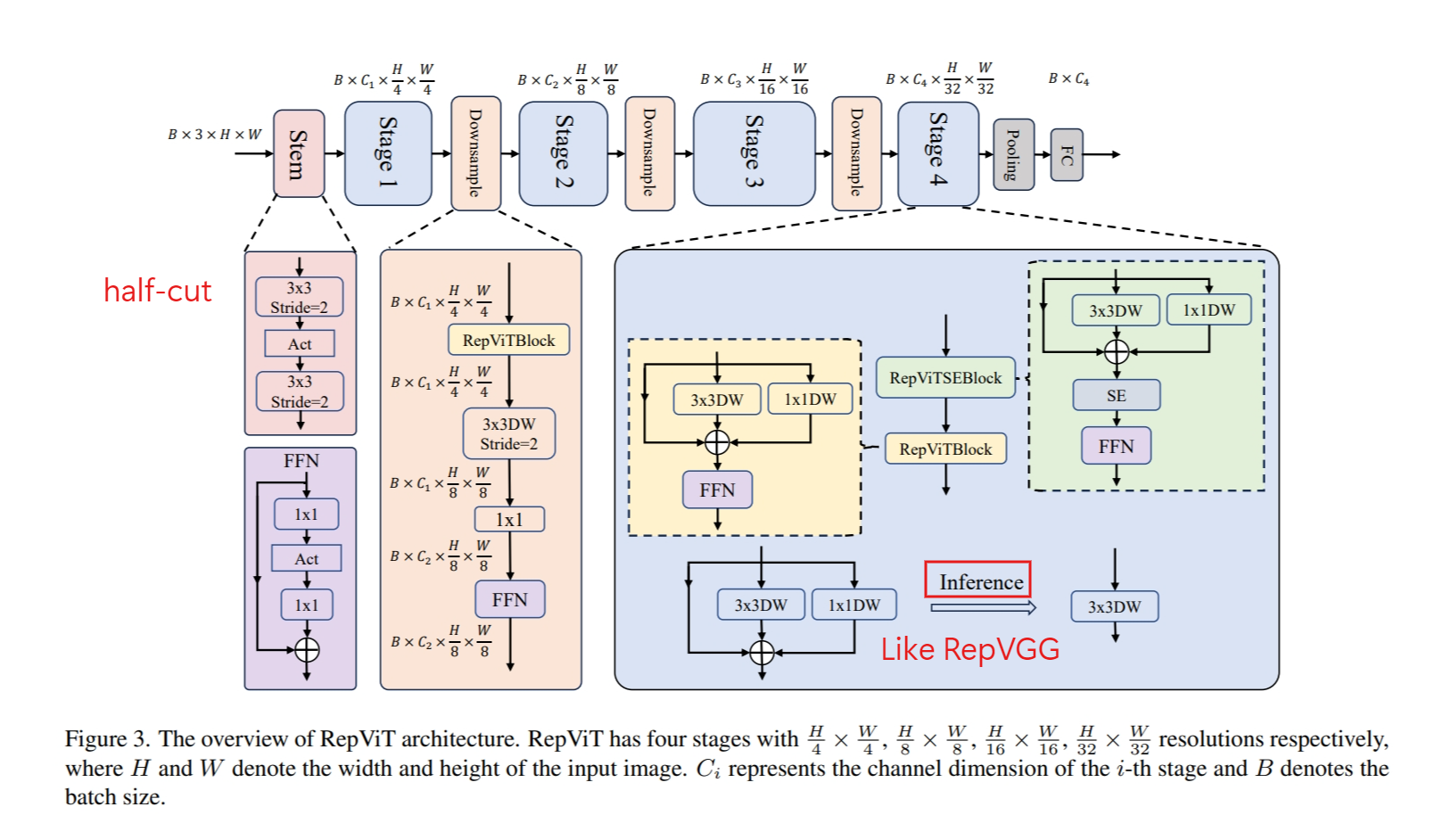

RepViT has a MetaFormer structure, but is composed entirely of convolutions.

Methodology

Preliminary

We utilize the iPhone 12 as the test device and Core ML Tools as the compiler.

We measure the actual on-device latency for models as the benchmark metric.

employ GeLU activations in the MobileNetV3-L model

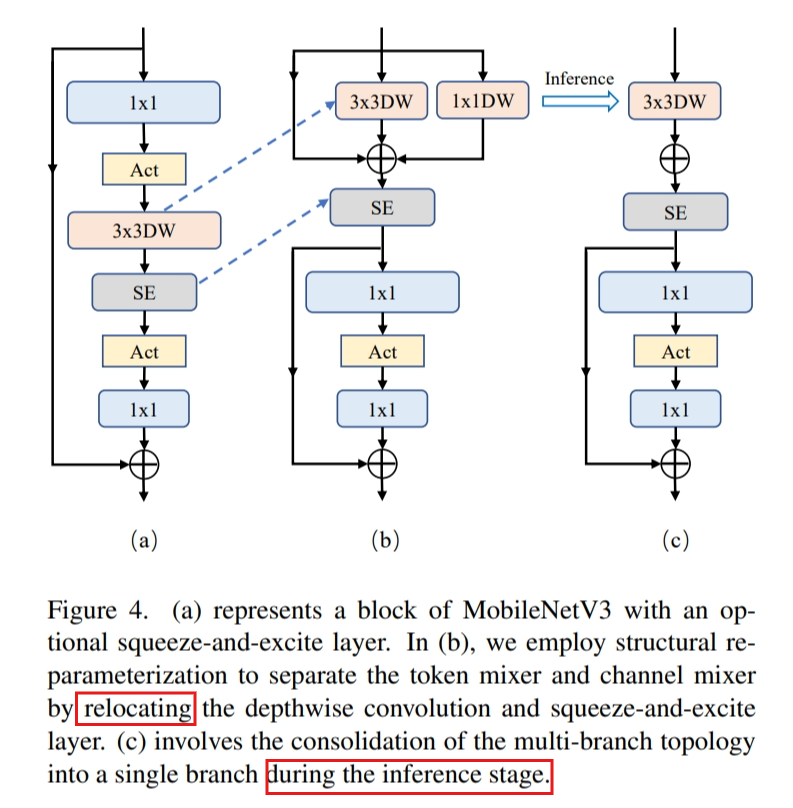

Block Design

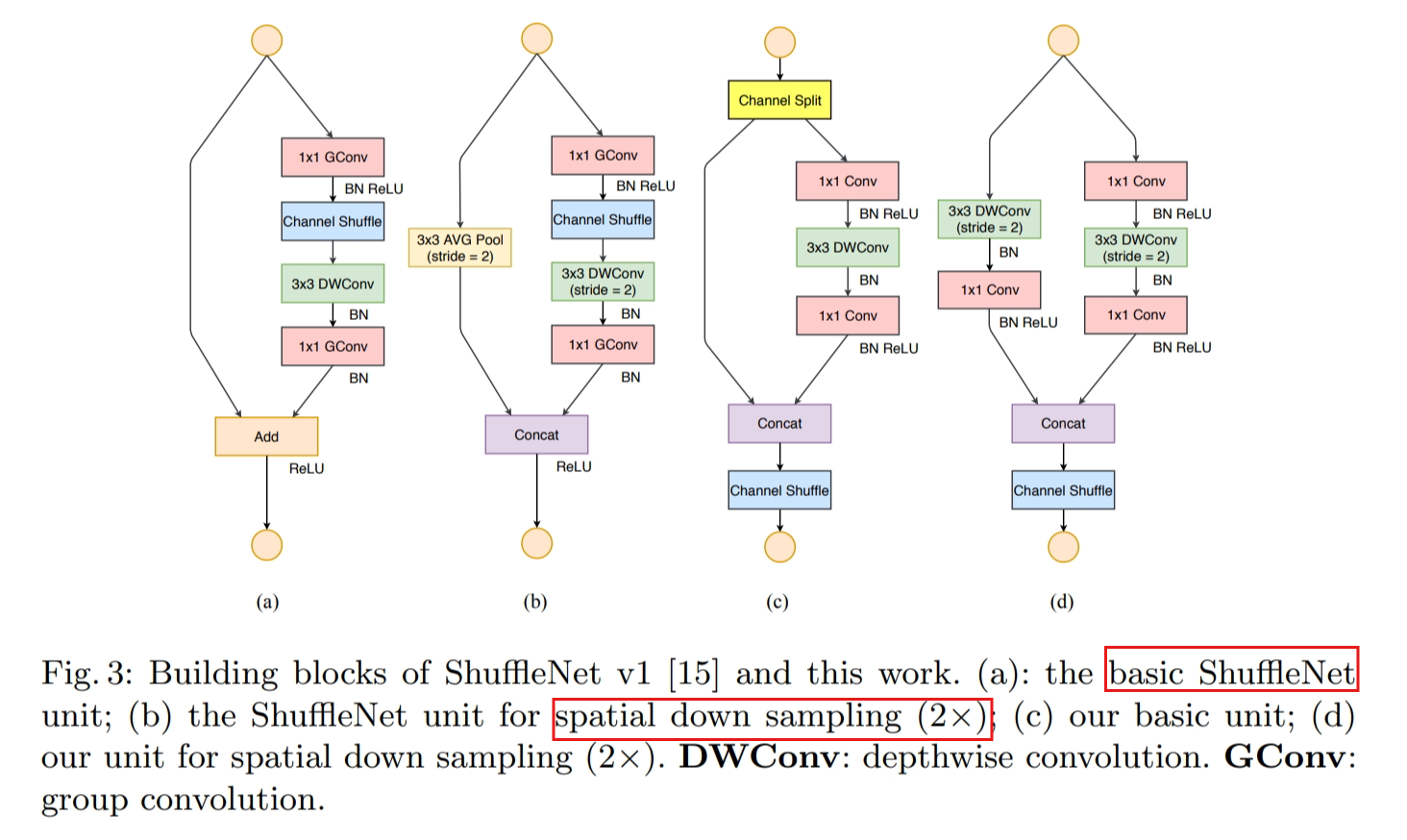

1x1 expansion is typically used to increase the number of channels in the feature maps, while the 1x1 projection convolution is used for dimensionality reduction. The combination of them is channel mixer.

And the depth-wise convolution is token mixer.

The squeeze and excitation module is also moved up to be placed after the depth-wise filters, as it depends on spatial information interaction.

Reducing the expansion ratio and increasing width

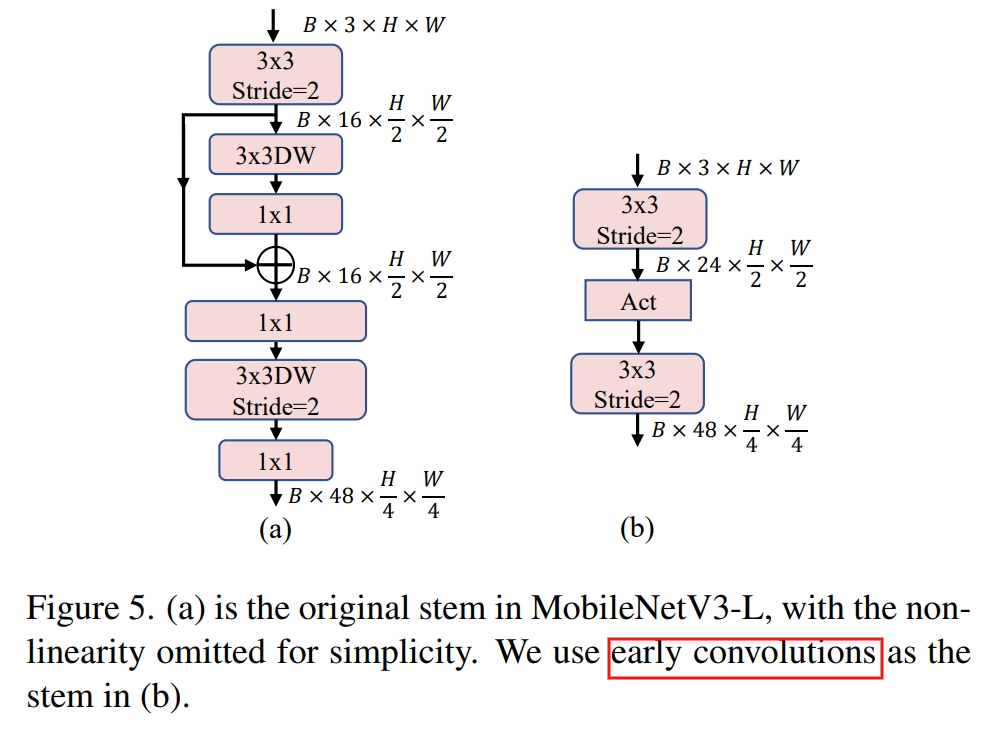

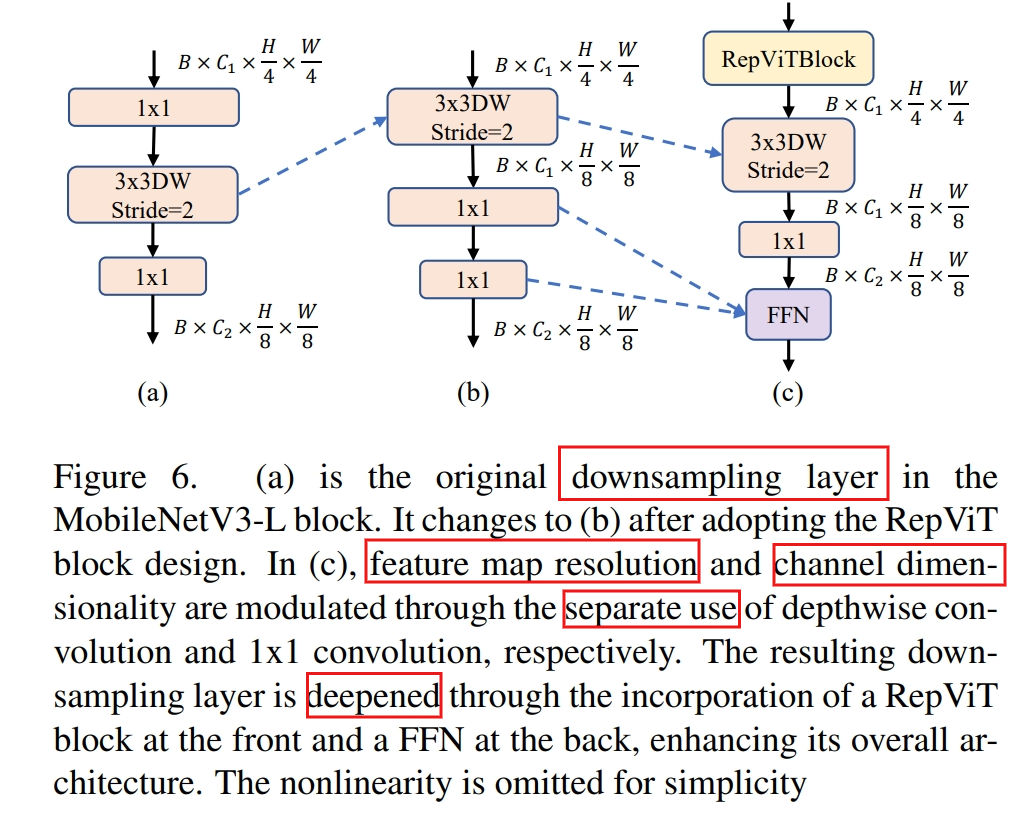

Macro design

patchifying operation results in ViTs’ substandard optimizability and sensitivity to training recipes. To address these issues, they suggest using a small number of stacked stride-2 3*3 convolutions as an alternative architectural choice for the stem, known as early convolutions.

Deeper down-sampling layers.

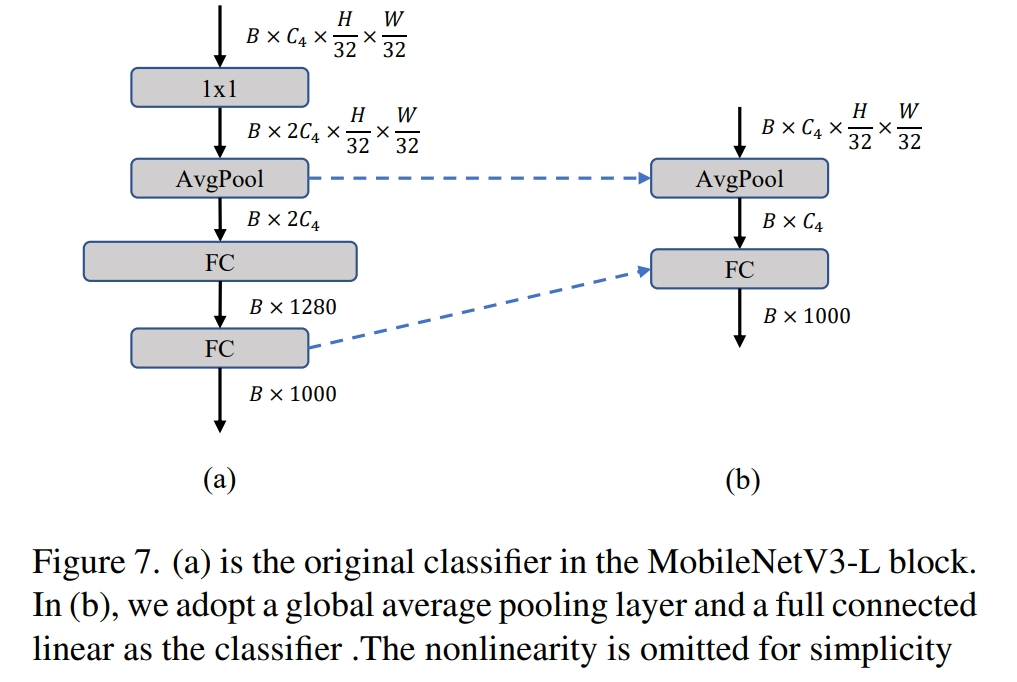

Simple classifier.

Overall stage ratio

Stage ratio represents the ratio of the number of blocks in different stages, thereby indicating the distribution of computation across the stages

The original stage ratio of MobileNetV3-L is 1:2:5:2. Therefore, we follow [19] to employ a more optimal stage ratio of 1:1:7:1 for the network. We then increase the network depth to 2:2:14:2, achieving a deeper layout [19, 24]. This step increases the top-1 accuracy to 76.9% with a latency of 0.91ms.

Micro design

Kernel size selection

large kernel-sized convolution is not friendly for mobile devices, due to its computation complexity and memory access costs.

Squeeze-and-excitation layer placement

Network architecture

We develop multiple RepViT variants, including RepViT-M0.9/M1.0/M1.1/M1.5/M2.3. The suffix “-MX” means that the latency of the model is Xms. RepViT-M0.9 is the outcome of the “modernizing” process applied to MobileNetV3-L. The different variants are distinguished by the number of channels and the number of blocks within each stage.